CNN vs. SIFT for Image Retrieval: Alternative or

Complementary?

Ke Yan

1,2

, Yaowei Wang

∗,3

, Dawei Liang

1,2

, Tiejun Huang

1,2

, Yonghong Tian

∗∗,1,2

1

National Engineering Laboratory for Video Technology, School of EE&CS,

Peking University, Beijing, China

2

Cooperative Medianet Innovation Center, China

3

Department of Electronic Engineering, Beijing Institute of Technology, China

{keyan, dwliang, tjhuang, yhtian}@pku.edu.cn;yaoweiwang@bit.edu.cn

ABSTRACT

In the past decade, SIFT is widely used in most vision tasks

such as image retrieval. While in recent several years, deep

convolutional neural networks (CNN) features achieve the

state-of-the-art performance in several tasks such as image

classification and object detection. Thus a natural question

arises: for the image retrieval task, can CNN features sub-

stitute for SIFT? In this paper, we experimentally demon-

strate that the two kinds of features are highly complemen-

tary. Following this fact, we propose an image representa-

tion model, complementary CNN and SIFT (CCS), to fuse

CNN and SIFT in a multi-level and complementary way. In

particular, it can be used to simultaneously describe scene-

level, object-level and point-level contents in images. Ex-

tensive experiments are conducted on four image retrieval

benchmarks, and the experimental results show that our

CCS achieves state-of-the-art retrieval results.

Keywords

Multi-level image representation; CNN; SIFT; Complemen-

tary CNN and SIFT (CCS)

1. INTRODUCTION

Scale-invariant feature transform (SIFT) [1] has been the

most widely-used hand-crafted feature for content-based im-

age retrieval (CBIR) in the past decade. Technologically,

SIFT is intrinsically robust to geometric transformations

and shows good performance for near-duplicate image re-

trieval [2] [3]. Meanwhile, there are also many works (e.g.,

Fisher vector [4], VLAD [5] and their variants [6] [7] [8]),

that attempt to construct semantically-richer mid-level im-

age representations so as to improve the retrieval perfor-

mance. However, in spite of significant efforts, it is still

difficult to fully bridge the semantic gap between such fea-

∗

Corresponding author: Yaowei Wang and Yonghong Tian.

Permission to make digital or hard copies of all or part of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for profit or commercial advantage and that copies bear this notice and the full cita-

tion on the first page. Copyrights for components of this work owned by others than

ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or re-

publish, to post on servers or to redistribute to lists, requires prior specific permission

and/or a fee. Request permissions from permissions@acm.org.

MM ’16, October 15-19, 2016, Amsterdam, Netherlands

c

2016 ACM. ISBN 978-1-4503-3603-1/16/10. . . $15.00

DOI: http://dx.doi.org/10.1145/2964284.2967252

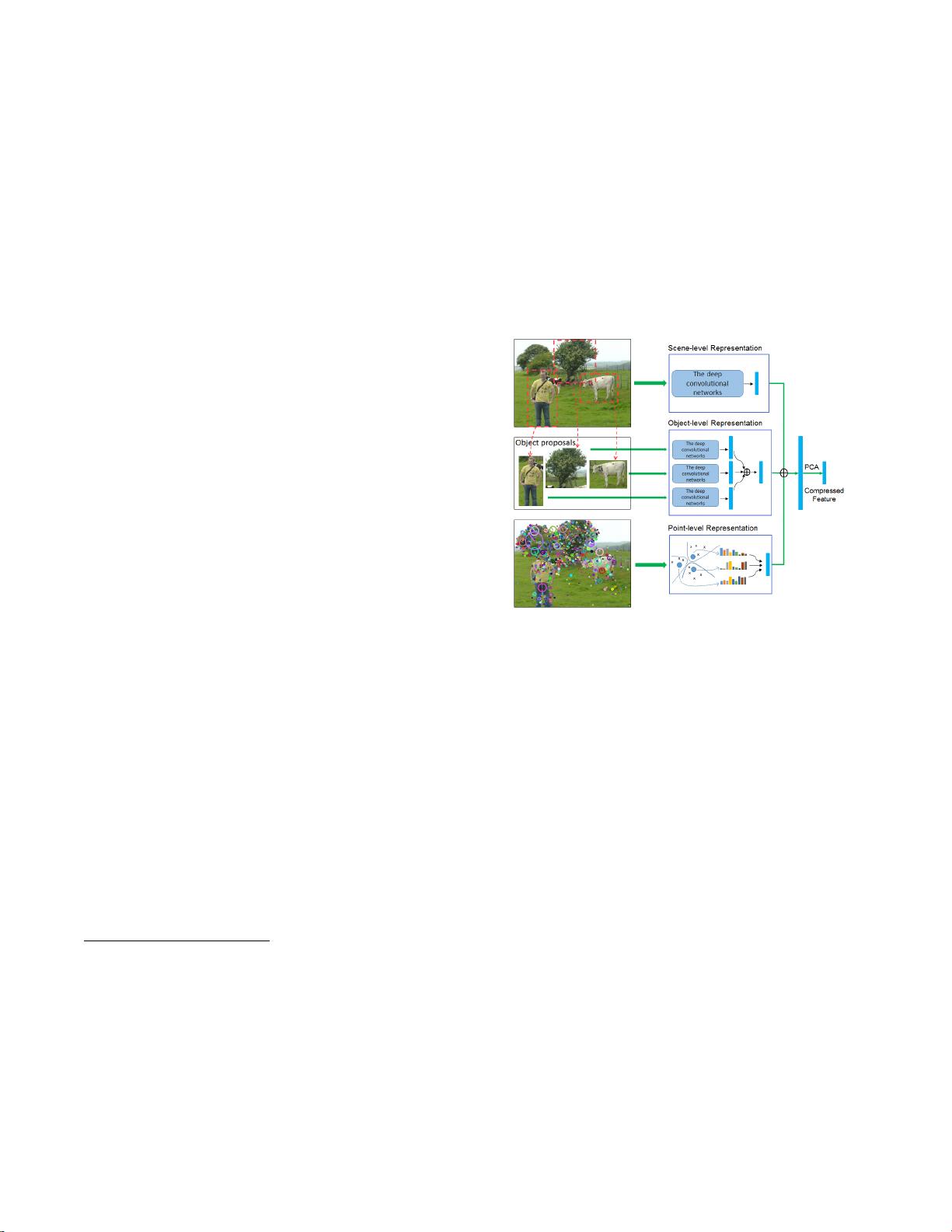

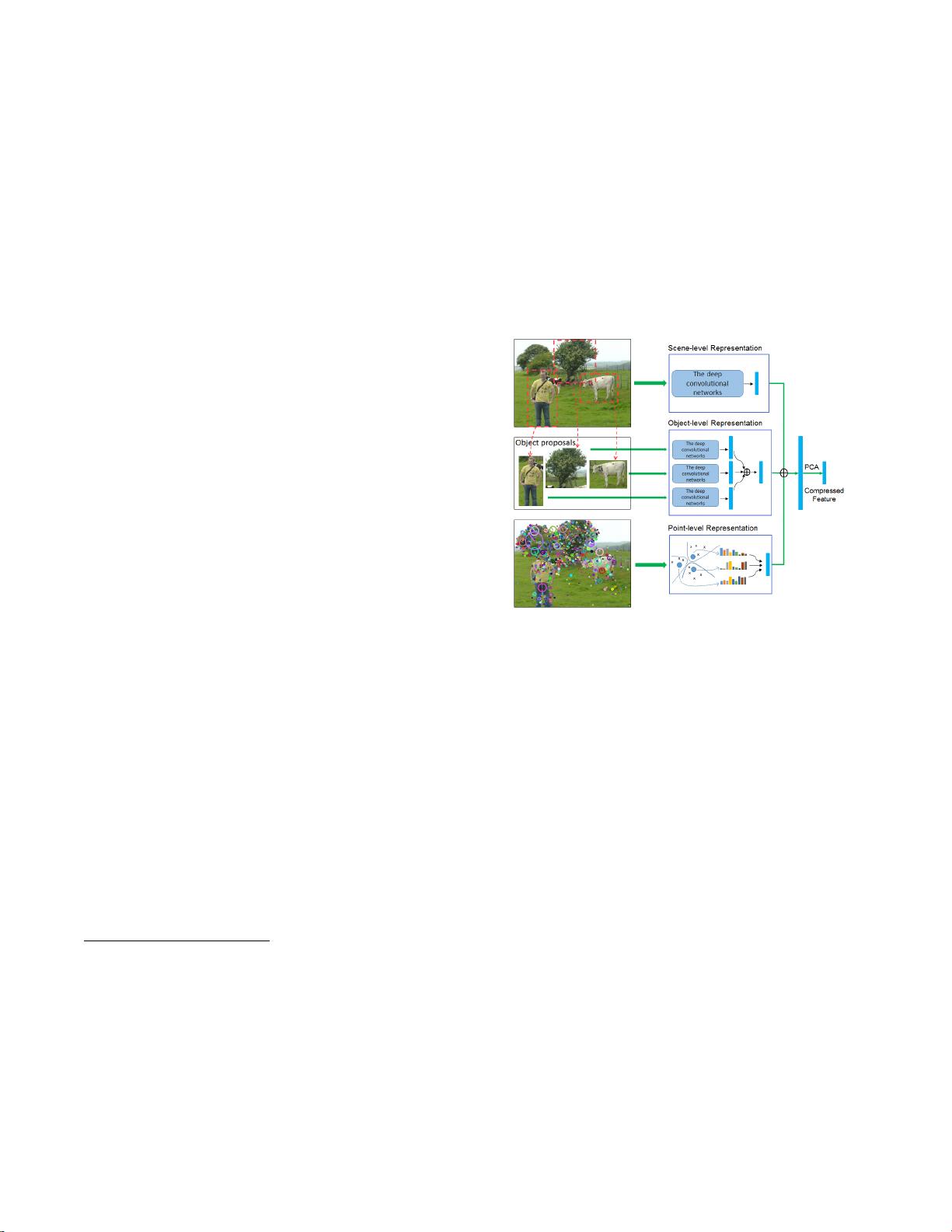

Figure 1: The demonstration of our proposed com-

plementary CNN and SIFT (CCS). The CCS aggre-

gates three level contents, i.e., scene-level, object-

level and point-level representations.

ture representations and human’s understanding of an image

only with SIFT-based features.

Recently, deep convolutional neural networks (CNN) have

achieved the state-of-the-art performance in several tasks,

such as image classification [9] [10], object detection [11] [12]

and saliency detection [13]. Compared with hand-crafted

features, CNN features learned from numerous annotated

data (e.g., ImageNet [14]) in a deep learning architecture,

carry richer high-level semantic information. Several at-

tempts in CBIR [15] [16] [17] showed that CNN features

work well for image retrieval as a scene-level representation.

Gong et al. [18] proposed an approach called Multi-scale

Orderless Pooling (MOP) to represent local information by

aggregating CNN features at three scales respectively. Kon-

da et al. [19] and Xie et.al. [20] detected object proposals

and extracted CNN features for each region at the object-

level. Besides, there are also some researchers who paid

attention to deep convolutional layers to derive representa-

tions [21] [22] [23] [24] for image retrieval. Although CNN

features achieve good performance, we can not say that C-

NN will always outperform SIFT yet. Vijay et al. [25] had

showed that no one was better than the other consistently

and the retrieval gains can be obtained by combining the

two features.