LI et al.: WORKLOAD-AWARE ELASTIC STRIPING WITH HOT DATA IDENTIFICATION FOR SSD RAID ARRAYS 817

Fig. 2. Elastic striping: P

2

= D

∗

1

⊕ D

∗

2

⊕ D

∗

4

.

However, parity update in an RAID will introduce extra

I/Os and degrade both the performance and endurance of SSD

RAID. To address this issue, three types of RAID schemes

are developed to reduce the number of I/Os caused by parity

update, e.g., parity logging, parity caching, and elastic strip-

ing. Specifically, parity logging [29] usually uses a dedicated

device to log writes so as to delay parity update and reduce

the amount of I/Os, and it has also been used for deploying

RAID for SSDs. For example, Mao et al. [20] proposed a

design for RAID-4, which uses one HDD as the parity device

to absorb parity writes and uses another HDD as a mirror to

absorb small write requests, and similar idea was also designed

for RAID-6 in [36]. Li et al. [17] proposed EPLog which

extends parity logging with an elastic feature and uses HDDs

as log devices to absorb writes so as to reduce the writes to

SSDs. Differently, parity caching [4], [10], [14], [16]usesa

buffer, e.g., nonvolatile memory, to delay parity updates so

as to reduce the writes to SSDs. At last, elastic striping [12]

chooses to construct new stripes with updated new data chunks

instead of immediately updating the parity chunks in the old

stripe. Considering the good feature of requiring no addi-

tional devices, we focus on the scheme of elastic striping in

this paper. In the following of this section, we first review

how elastic striping works, and then discuss its problem and

motivate our design.

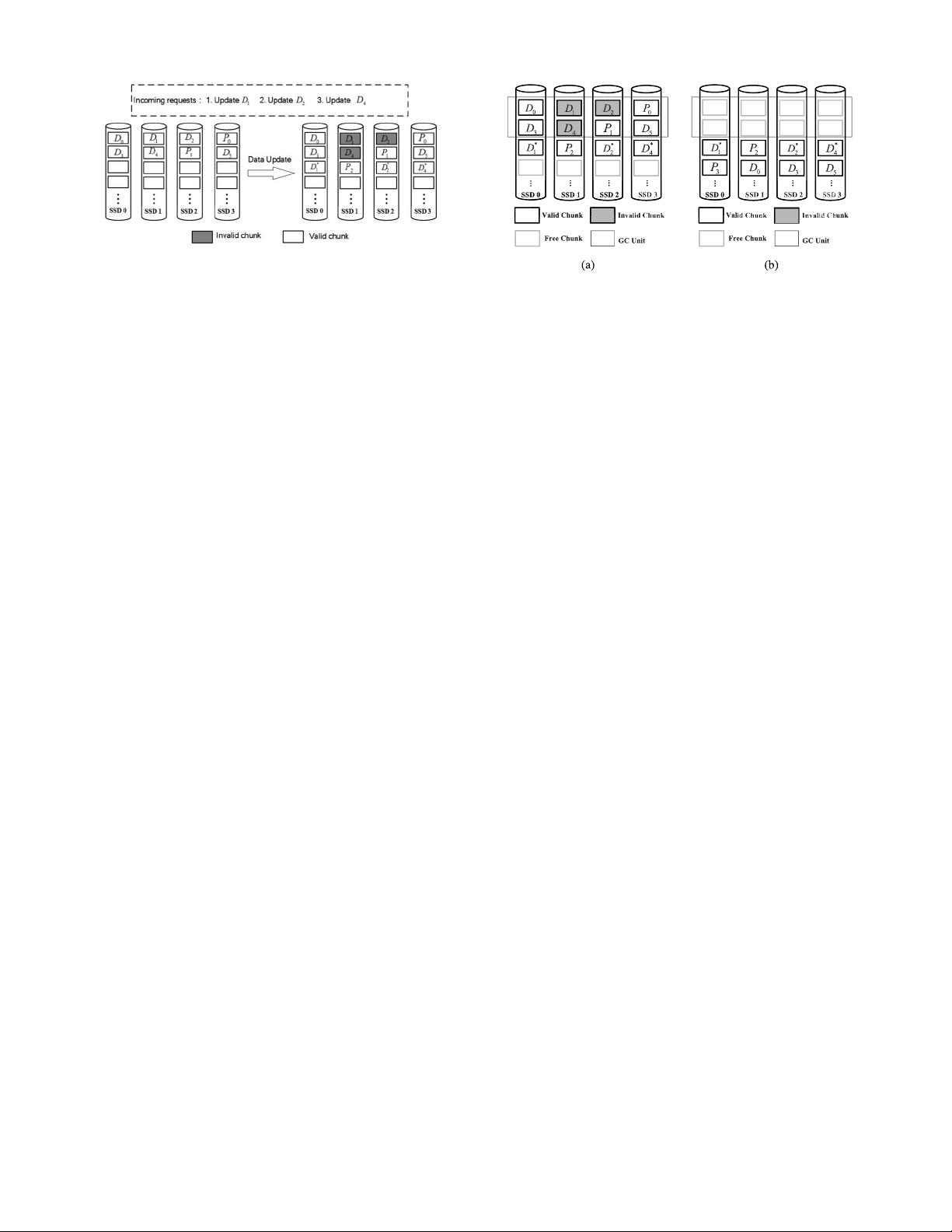

B. Elastic Striping

Elastic striping was first proposed for chip-level RAID in

single SSDs. Its main idea is to reconstruct new stripes with the

newly updated data instead of immediately updating the parity

chunks in the old stripe, so it requires no additional devices

such like nonvolatile memories. Fig. 2 shows an example

which illustrates the scheme.

Suppose that there are six data chunks D

0

–D

5

and two parity

chunks P

0

and P

1

in an SSD RAID at the beginning, and the

incoming requests are: 1) updating D

1

to D

∗

1

; 2) updating D

2

to D

∗

2

; and 3) updating D

4

to D

∗

4

. We assume that the three

update requests arrive sequentially.

Instead of immediately updating D

1

, D

2

, and D

4

and their

corresponding parity chunks, elastic striping manages write

requests in a log-structured manner. Precisely, it appends the

updated data D

∗

1

, D

∗

2

, and D

∗

4

into the RAID array by construct-

ing a new stripe without performing update to the old stripes.

Note that D

1

, D

2

, and D

4

are out-of-date, but still need to

be kept in SSDs for data protection. For space consideration,

elastic striping marks these chunks as invalid at RAID level

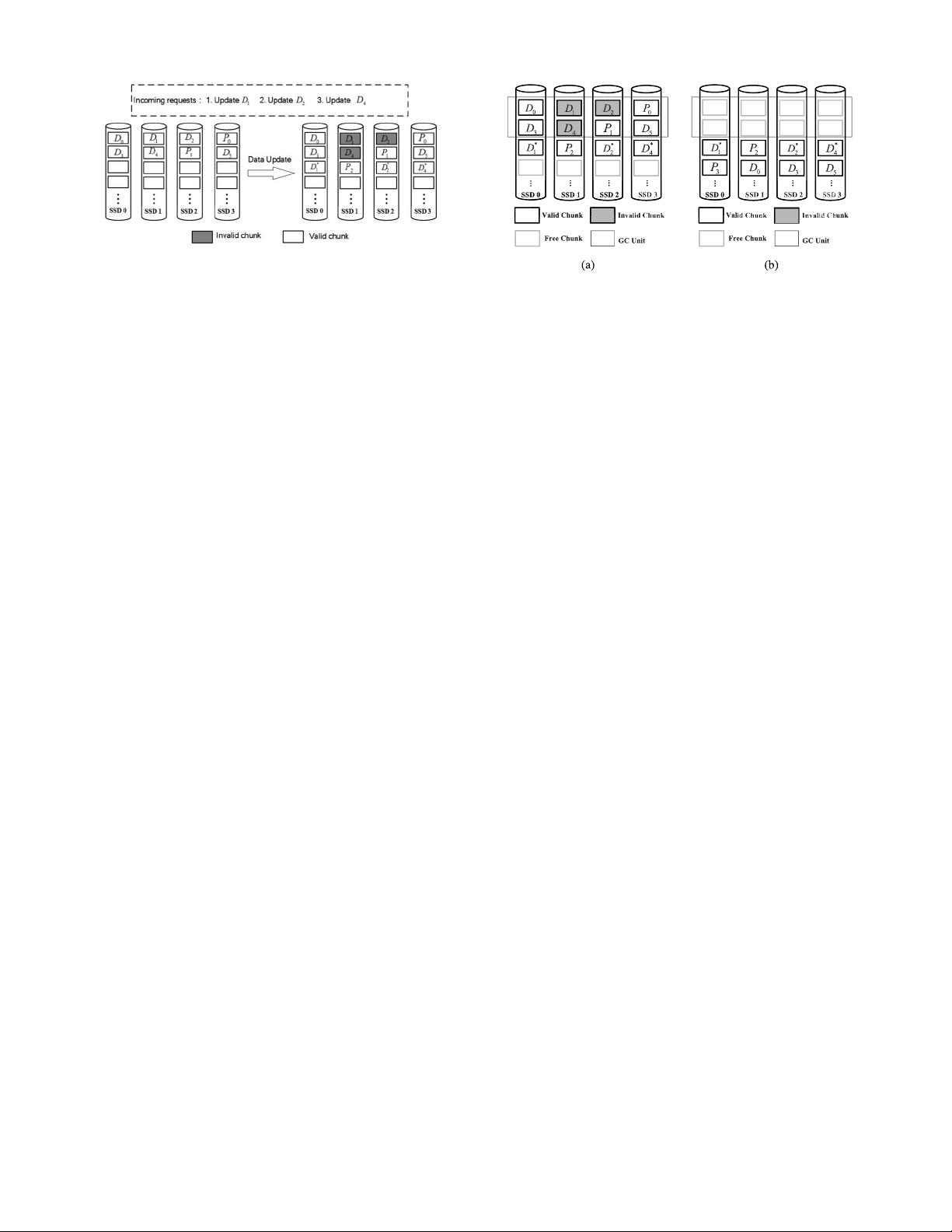

Fig. 3. RAID-level GC. (a) Before GC operation. (b) After GC operation.

and calls GC to reclaim the space occupied by invalid chunks

in future.

The GC works as follows. When it is triggered, it selects a

GC unit, which represents the smallest unit for GC and can

be a multiple of stripes, according to a GC algorithm, then

writes back all the valid data chunks in the selected GC unit

by reconstructing new stripes, and finally releases the space

of the GC unit for future allocation. We call this GC process

RAID-level GC so as to differentiate the GC process inside

single flash chips. We further define the average number of

valid data chunks that need to be written back during each

GC operation as RAID-level GC cost.

To further illustrate the RAID-level GC process, we consider

an example shown in Fig. 3, where a GC unit consists of two

stripes. As shown in the example, there are three valid data

chunks, D

0

, D

3

, and D

5

, in the selected GC unit in Fig. 3(a).

An RAID-level GC operation first reads D

0

, D

3

, and D

5

, then

writes them into free places, and reclaims those invalid chunks

for future allocation as shown in Fig. 3(b).

C. Motivation

We note that skewness and temporal locality exist in real-

world I/O workloads [6], [15], [28]. Skewness indicates that

some data are accessed and updated frequently while others

are updated rarely. Temporal locality means if a data chunk is

accessed at present, it will be accessed with a high probability

in the near future. In particular, many workloads of real-world

applications exhibit the characteristic that 80% of accesses are

directed to only 20% of data, which is the so called “80/20

Rule” [6], [23]. Our analysis of real-world workloads also

validates this property (see Table I). As a result, the mixture

of hot and cold data in SSD RAID will potentially increase

the number of chunk rewrites per RAID-level GC operation,

and finally aggravates the RAID-level GC cost. In order to

explain this, we present a simple numerical analysis by using

the example in Fig. 4.

Now we compare the RAID-level GC cost in two hypothetic

cases so as to study the impact of workload-awareness on elas-

tic striping: 1) hot and cold data are evenly mixed together [see

Fig. 4(a)] and 2) hot and cold data are perfectly separated [see

Fig. 4(b)]. In this example, we consider an 80/20 Rule sce-

nario, in which 20% of data in an SSD RAID is hot and

receives 80% of write requests, and the remaining 80% of

data is cold and receives 20% of writes, and use the num-

ber of write accesses to measure hotness so as to ease the