Lane disputed those estimates

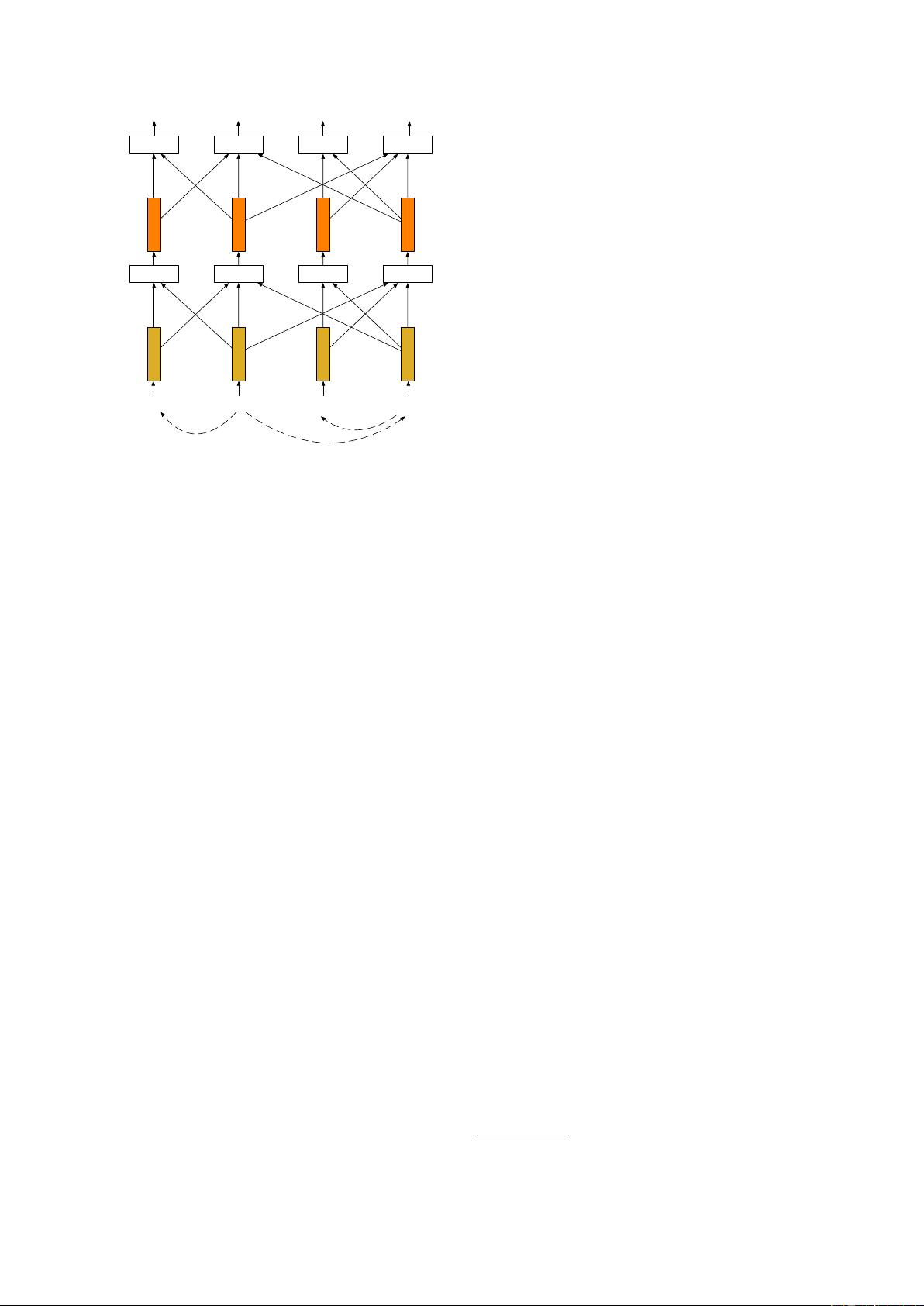

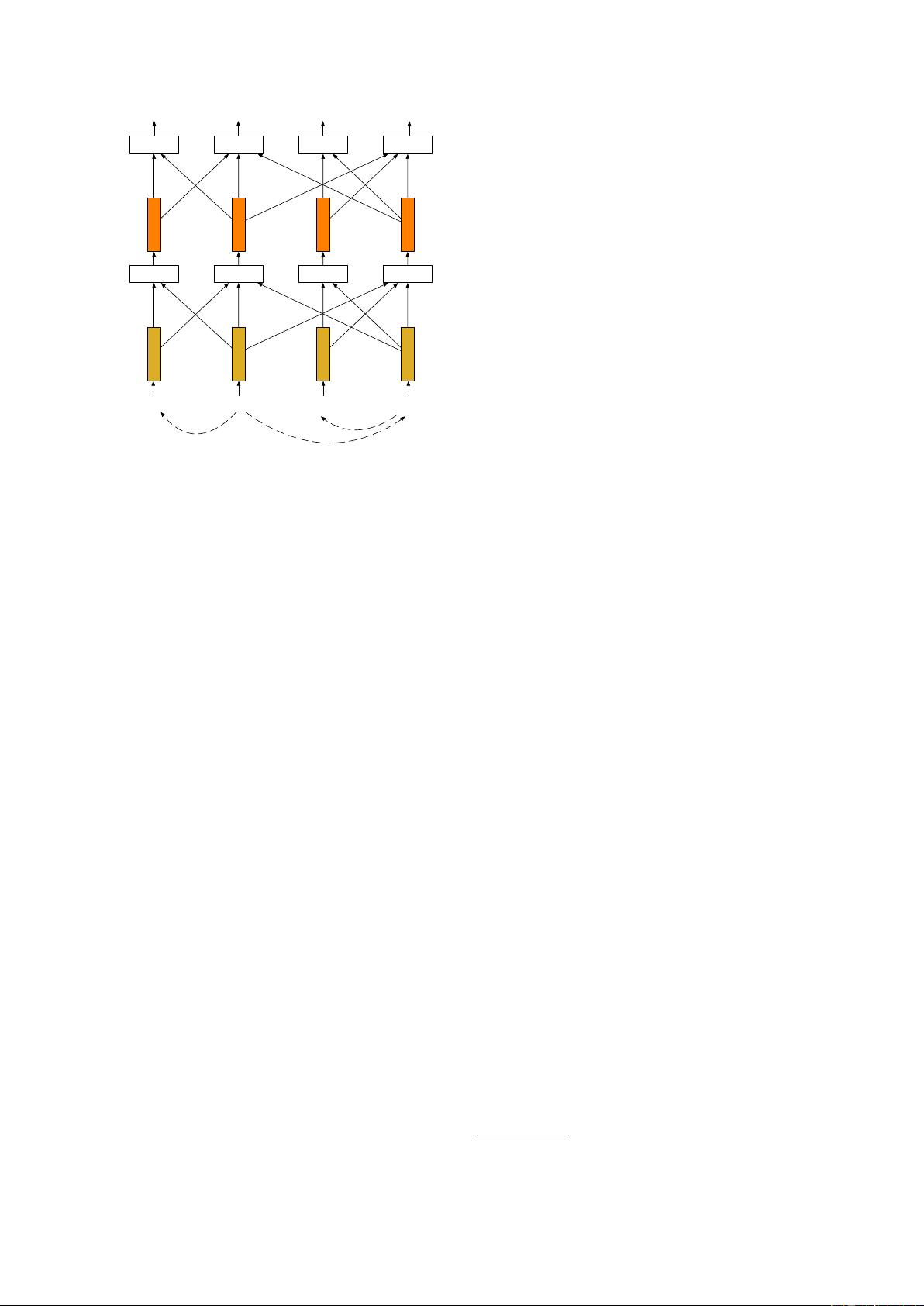

Figure 2: A simplified syntactic GCN (bias terms

and gates are omitted); the syntactic graph of the

sentence is shown with dashed lines at the bottom.

Parameter matrices are sub-indexed with syntactic

functions, and apostrophes (e.g., subj’) signify that

information flows in the direction opposite of the

dependency arcs (i.e., from dependents to heads).

As in standard convolutional networks (LeCun

et al., 2001), by stacking GCN layers one can in-

corporate higher degree neighborhoods:

h

(k+1)

v

= ReLU

X

u∈N (v)

W

(k)

h

(k)

u

+ b

(k)

where k denotes the layer number and h

(1)

v

= x

v

.

3 Syntactic GCNs

As syntactic dependency trees are directed and la-

beled (we refer to the dependency labels as syn-

tactic functions), we first need to modify the com-

putation in order to incorporate label information

(Section 3.1). In the subsequent section, we incor-

porate gates in GCNs, so that the model can decide

which edges are more relevant to the task in ques-

tion. Having gates is also important as we rely on

automatically predicted syntactic representations,

and the gates can detect and downweight poten-

tially erroneous edges.

3.1 Incorporating directions and labels

Now, we introduce a generalization of GCNs ap-

propriate for syntactic dependency trees, and in

general, for directed labeled graphs. First note

that there is no reason to assume that information

flows only along the syntactic dependency arcs

(e.g., from makes to Sequa), so we allow it to flow

in the opposite direction as well (i.e., from depen-

dents to heads). We use a graph G = (V, E), where

the edge set contains all pairs of nodes (i.e., words)

adjacent in the dependency tree. In our example,

both (Sequa, makes) and (makes, Sequa) belong

to the edge set. The graph is labeled, and the label

L(u, v) for (u, v) ∈ E contains both information

about the syntactic function and indicates whether

the edge is in the same or opposite direction as

the syntactic dependency arc. For example, the la-

bel for (makes, Sequa) is subj, whereas the label

for (Sequa, makes) is subj

0

, with the apostrophe

indicating that the edge is in the direction oppo-

site to the corresponding syntactic arc. Similarly,

self-loops will have label self. Consequently, we

can simply assume that the GCN parameters are

label-specific, resulting in the following computa-

tion, also illustrated in Figure 2:

h

(k+1)

v

= ReLU

X

u∈N (v)

W

(k)

L(u,v)

h

(k)

u

+ b

(k)

L(u,v)

.

This model is over-parameterized,

3

especially

given that SRL datasets are moderately sized, by

deep learning standards. So instead of learning the

GCN parameters directly, we define them as

W

(k)

L(u,v)

= V

(k)

dir(u,v)

, (2)

where dir(u, v) indicates whether the edge (u, v)

is directed (1) along, (2) in the opposite direction

to the syntactic dependency arc, or (3) is a self-

loop; V

(k)

dir(u,v)

∈ R

m×m

. Our simplification cap-

tures the intuition that information should be prop-

agated differently along edges depending whether

this is a head-to-dependent or dependent-to-head

edge (i.e., along or opposite the corresponding

syntactic arc) and whether it is a self-loop. So we

do not share any parameters between these three

very different edge types. Syntactic functions are

important, but perhaps less crucial, so they are en-

coded only in the feature vectors b

L(u,v)

.

3.2 Edge-wise gating

Uniformly accepting information from all neigh-

boring nodes may not be appropriate for the SRL

3

Chinese and English CoNLL-2009 datasets used 41 and

48 different syntactic functions, which would result in having

83 and 97 different matrices in every layer, respectively.