FlowNet3D: Learning Scene Flow in 3D Point Clouds

Xingyu Liu

∗1

Charles R. Qi

∗2

Leonidas J. Guibas

1,2

1

Stanford University

2

Facebook AI Research

Abstract

Many applications in robotics and human-computer in-

teraction can benefit from understanding 3D motion of

points in a dynamic environment, widely noted as scene

flow. While most previous methods focus on stereo and

RGB-D images as input, few try to estimate scene flow di-

rectly from point clouds. In this work, we propose a novel

deep neural network named FlowNet3D that learns scene

flow from point clouds in an end-to-end fashion. Our net-

work simultaneously learns deep hierarchical features of

point clouds and flow embeddings that represent point mo-

tions, supported by two newly proposed learning layers for

point sets. We evaluate the network on both challenging

synthetic data from FlyingThings3D and real Lidar scans

from KITTI. Trained on synthetic data only, our network

successfully generalizes to real scans, outperforming vari-

ous baselines and showing competitive results to the prior

art. We also demonstrate two applications of our scene flow

output (scan registration and motion segmentation) to show

its potential wide use cases.

1. Introduction

Scene flow is the 3D motion field of points in the

scene [27]. Its projection to an image plane becomes 2D

optical flow. It is a low-level understanding of a dynamic

environment, without any assumed knowledge of structure

or motion of the scene. With this flexibility, scene flow can

serve many higher level applications. For example, it pro-

vides motion cues for object segmentation, action recogni-

tion, camera pose estimation, or even serve as a regulariza-

tion for other 3D vision problems.

However, for this 3D flow estimation problem, most pre-

vious works rely on 2D representations. They extend meth-

ods for optical flow estimation to stereo or RGB-D images,

and usually estimate optical flow and disparity map sepa-

rately [33, 28, 16], not directly optimizing for 3D scene

flow. These methods cannot be applied to cases where point

clouds are the only input.

Very recently, researchers in the robotics community

started to study scene flow estimation directly in 3D point

* indicates equal contributions.

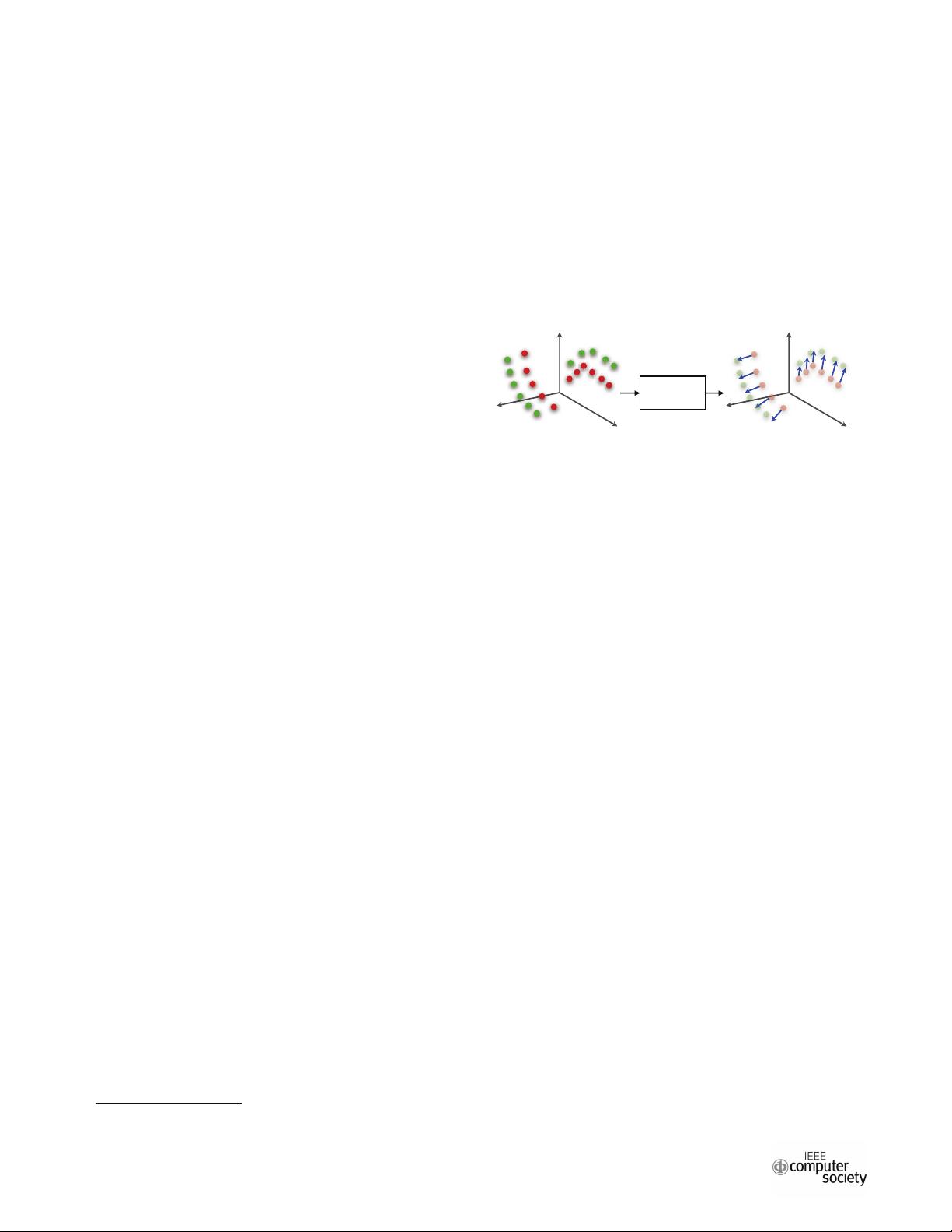

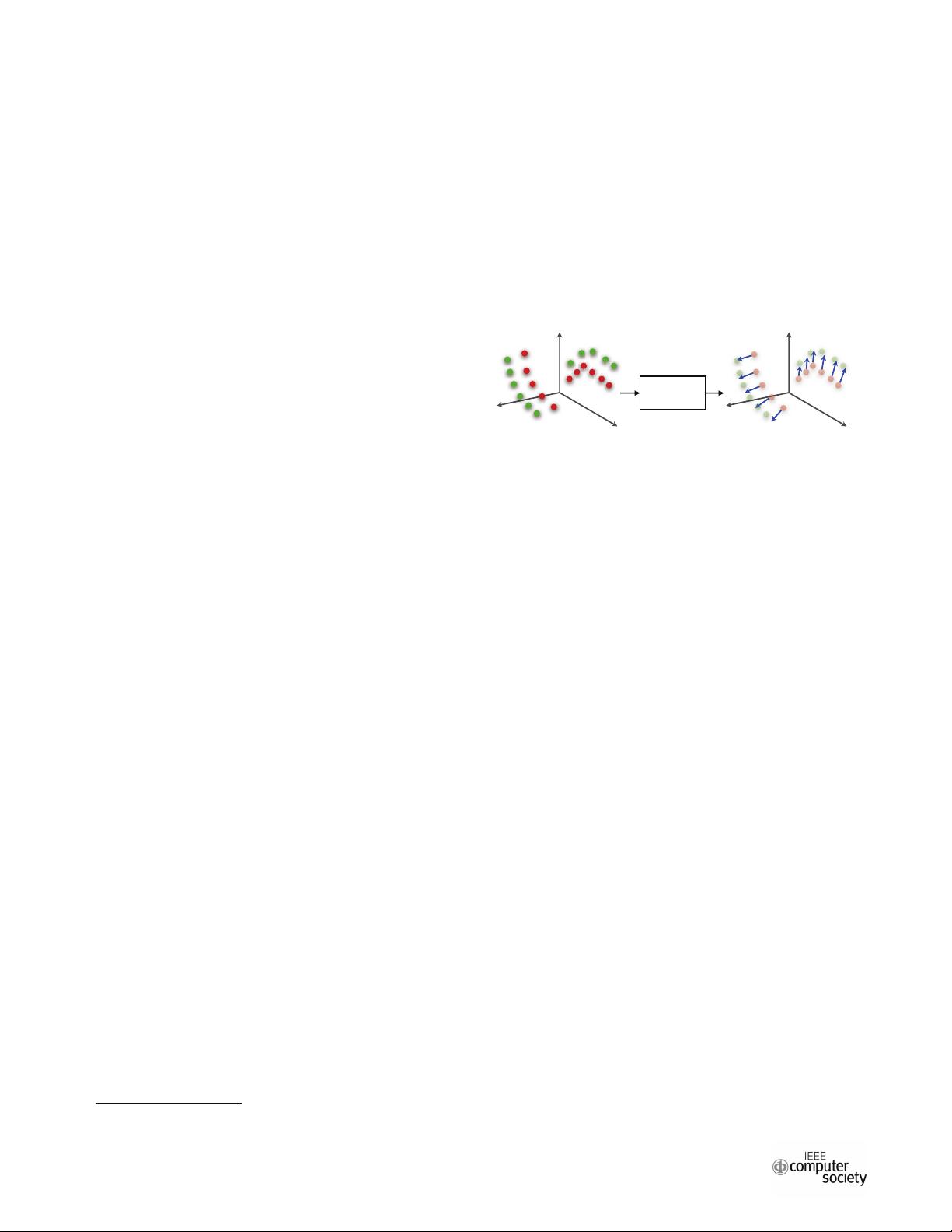

point cloud 1:

point cloud 2:

scene flow:

FlowNet3D

Figure 1: End-to-end scene flow estimation from point

clouds. Our model directly consumes raw point clouds

from two consecutive frames, and outputs dense scene flow

(as translation vectors) for all points in the 1st frame.

clouds (e.g. from Lidar) [7, 25]. But those works did not

benefit from deep learning as they built multi-stage systems

based on hand-crafted features, with simple models such as

logistic regression. There are often many assumptions in-

volved such as assumed scene rigidity or existence of point

correspondences, which make it hard to adapt those systems

to benefit from deep networks. On the other hand, in the

learning domain, Qi et al. [19, 20] recently proposed novel

deep architectures that directly consume point clouds for

3D classification and segmentation. However, their work

focused on processing static point clouds.

In this work, we connect the above two research frontiers

by proposing a deep neural network called FlowNet3D that

learns scene flow in 3D point clouds end-to-end. As illus-

trated in Fig. 1, given input point clouds from two consec-

utive frames (point cloud 1 and point cloud 2), our network

estimates a translational flow vector for every point in the

first frame to indicate its motion between the two frames.

The network, based on the building blocks from [19], is

able to simultaneously learn deep hierarchical features of

point clouds and flow embeddings that represent their mo-

tions. While there are no correspondences between the

two sampled point clouds, our network learns to associate

points from their spatial localities and geometric similar-

ities, through our newly proposed flow embedding layer.

Each output embedding implicitly represents the 3D mo-

tion of a point. From the embeddings, the network further

up-samples and refines them in an informed way through

another novel set upconv layer. Compared to direct feature

529

2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

978-1-7281-3293-8/19/$31.00 ©2019 IEEE

DOI 10.1109/CVPR.2019.00062