We illustrate the three steps of deriving the RC based on a

polyhedral uncertainty set:

Z ¼f

ζ : Dζ þq Z 0g;

where DA R

mL

; ζ A R

L

, and qA R

m

.

Step 1(worst case reformulation): Notice that (3) is equivalent to

the following worst case reformulation:

a

>

x þ max

ζ

:D

ζ

þq Z 0

ðP

>

xÞ

>

ζ r d: ð4Þ

Step 2(duality): We take the dual of the inner maximization

problem in (4). The inner maximization problem and its dual yield

the same optimal objective value by strong duality. Therefore, (4)

is equivalent to

a

>

x þmin

w

fq

>

w : D

>

w ¼P

>

x; w Z 0gr d: ð5Þ

Step 3(RC): It is important to point out that we can omit the

minimization term in (5), since it is sufficient that the constraint

holds for at least one w. Hence, the final formulation of the RC

becomes

( w : a

>

x þq

>

wr d; D

>

w ¼P

>

x; w Z 0: ð6Þ

Note that the constraints in (6) are linear in xA R

n

and wA R

m

.

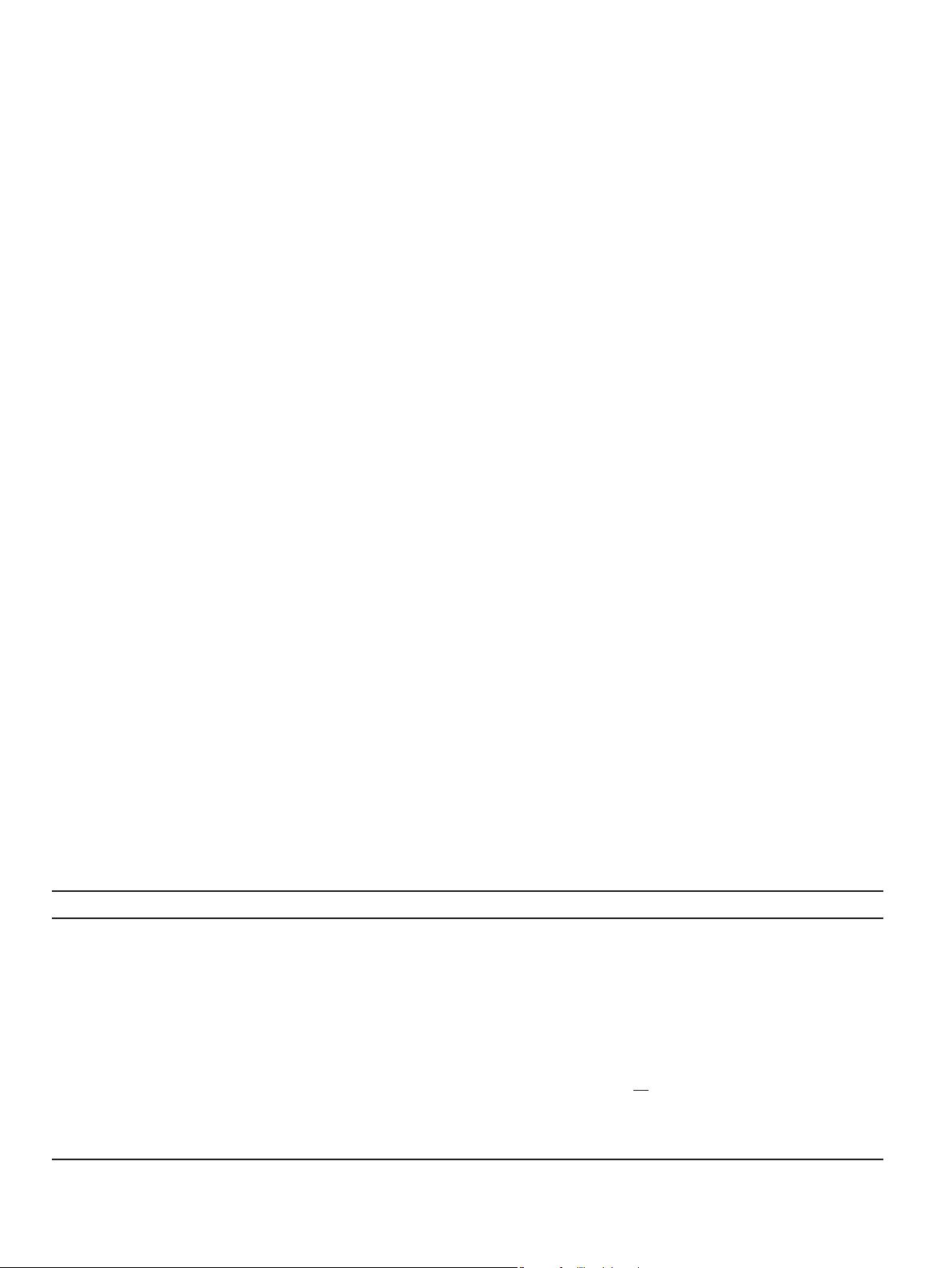

Table 1 presents the tractable robust counterparts of an

uncertain linear optimization problem for different classes of

uncertainty sets. These robust counterparts are derived using the

three steps that are described above. However, we need conic

duality instead of LP duality in Step 2 to derive the tractable robust

counterparts for the conic uncertainty set; see the fourth row of

Table 1. Similarly, to derive the tractable RC for an uncertainty

region specified by general convex constraints, i.e., in the fifth row

of Table 1, we need Fenchel duality in Step 2; see Rockafellar [45]

for details on Fenchel duality, and Ben-Tal et al. [11] for the formal

proof of the associated RC reformulation. Notice that each RC

constraint has a positive safeguard in the constraint left-hand side,

e.g., J P

>

xJ

1

, J P

>

xJ

2

, and q

>

w; see the tractable RCs in the third

column of Table 1. These safeguards represent the level of

robustness that we introduce to the constraints. Note the equiva-

lence between the robust counterparts of the polyhedral and the

conic uncertainty set when K is the nonnegative orthant.

Nonlinear problems: Table 1 focuses on uncertain linear opti-

mization problems, i.e., both linear in the decision variables and

the uncertain parameters. Notice that, different than the presen-

ted results, the original uncertain optimization problem can be

nonlinear in the optimization variables and/or the uncertain

parameters; for more detailed treatment of such problems that

are nonlinear in the uncertain parameters, we refer to Ben-Tal

et al. [9, pp. 383–388] and Ben-Tal et al. [11].

Adversarial approach: If the robust counterpart cannot be

written as or approximated by a tractable reformulation, we

advocate to perform the so-called adversarial approach. The

adversarial approach starts with a finite set of scenarios S

i

Z

i

for the uncertain parameter in constraint i. For example, at the

start, S

i

only contains the nominal scenario. Then, the robust

optimization problem, which has a finite number of constraints

since Z

i

has been replaced by S

i

, is solved. If the resulting solution

is robust feasible, we have found the robust optimal solution. If

that is not the case, we can find a scenario for the uncertain

parameter that makes the last found solution infeasible, e.g., we

can search for the scenario that maximizes the infeasibility. We

add this scenario to S

i

, and solve the resulting robust optimization

problem, and so on. For a more detailed description, we refer to

Bienstock and Özbay [21]. It appeared that this simple approach

often converges to optimality in a few number of iterations. The

advantage of this approach is that solving the robust optimization

problem with S

i

instead of Z

i

in each iteration, preserves the

structure of the original optimization problem. Only constraints of

the same type are added, since constraint i should hold for all

scenarios in S

i

. This approach could be faster than reformulating,

even for polyhedral uncertainty sets. See Bertsimas et al. [20] for a

comparison. Alternatively, if the probability distribution of the

uncertain parameter is known, one may also use the randomized

sampling of the uncertainty set proposed by Calafiore and Campi

[23]. The randomized approach substitutes the infinitely many

robust constraints with a finite set of constraints that are

randomly sampled. It is shown that such a randomized approach

is an accurate approximation of the original uncertain problem

provided that a sufficient number of samples is drawn; see Campi

and Garatti [24, Theorem 1].

Pareto efficiency: Iancu and Trichakis [38] discovered that “the

inherent focus of RO on optimizing performance only under worst

case outcomes might leave decisions un-optimized in case a non-

worst case scenario materialized”. Therefore, the “classical” RO

framework might lead to a Pareto inefficient solution; i.e., an

alternative robust optimal solution may guarantee an improve-

ment in the objective or slack size for (at least) one scenario

without deteriorating it in other scenarios. Given a robust optimal

solution, Iancu and Trichakis propose optimizing a new problem to

Table 1

Tractable reformulations for the uncertain constraint [ðaþP

ζ

Þ

>

xr d 8

ζ

A Z] for different types of uncertainty sets.

Uncertainty Z Robust counterpart Tractability

Box

J

ζ

J

1

r 1

a

>

xþ J P

>

xJ

1

r d

LP

Ellipsoidal

J

ζ

J

2

r 1

a

>

xþ J P

>

xJ

2

r d

CQP

Polyhedral

D

ζ

þqZ 0

a

>

xþq

>

wr d

D

>

w ¼P

>

x

wZ 0

8

>

<

>

:

LP

Cone (closed, convex, pointed)

D

ζ

þqA K

a

>

xþq

>

wr d

D

>

w ¼P

>

x

wA K

n

8

>

<

>

:

Conic opt.

Convex cons.

h

k

ð

ζ

Þr 0 8k

a

>

xþ

P

k

u

k

h

n

k

w

k

u

k

r d

P

k

w

k

¼P

>

x

uZ 0

8

>

>

>

<

>

>

>

:

Convex opt.

n

h

n

is the convex conjugate function, i.e, h

n

ðxÞ¼sup

y

fx

>

yhðyÞg; and K

n

is the dual cone of K. Source: Ben-Tal et al. [11].

B.L. Gorissen et al. / Omega 53 (2015) 124–137126