gorithms are better expressed using a bulk-synchronous

parallel model (BSP) using message passing to com-

municate between vertices, rather than the heavy, all-

to-all communication barrier in a fault-tolerant, large-

scale MapReduce job [22]. This mismatch became an

impediment to users’ productivity, but the MapReduce-

centric resource model in Hadoop admitted no compet-

ing application model. Hadoop’s wide deployment in-

side Yahoo! and the gravity of its data pipelines made

these tensions irreconcilable. Undeterred, users would

write “MapReduce” programs that would spawn alter-

native frameworks. To the scheduler they appeared as

map-only jobs with radically different resource curves,

thwarting the assumptions built into to the platform and

causing poor utilization, potential deadlocks, and insta-

bility. YARN must declare a truce with its users, and pro-

vide explicit [R8:] Support for Programming Model

Diversity.

Beyond their mismatch with emerging framework re-

quirements, typed slots also harm utilization. While the

separation between map and reduce capacity prevents

deadlocks, it can also bottleneck resources. In Hadoop,

the overlap between the two stages is configured by the

user for each submitted job; starting reduce tasks later

increases cluster throughput, while starting them early

in a job’s execution reduces its latency.

3

The number of

map and reduce slots are fixed by the cluster operator,

so fallow map capacity can’t be used to spawn reduce

tasks and vice versa.

4

Because the two task types com-

plete at different rates, no configuration will be perfectly

balanced; when either slot type becomes saturated, the

JobTracker may be required to apply backpressure to job

initialization, creating a classic pipeline bubble. Fungi-

ble resources complicate scheduling, but they also em-

power the allocator to pack the cluster more tightly.

This highlights the need for a [R9:] Flexible Resource

Model.

While the move to shared clusters improved utiliza-

tion and locality compared to HoD, it also brought con-

cerns for serviceability and availability into sharp re-

lief. Deploying a new version of Apache Hadoop in a

shared cluster was a carefully choreographed, and a re-

grettably common event. To fix a bug in the MapReduce

implementation, operators would necessarily schedule

downtime, shut down the cluster, deploy the new bits,

validate the upgrade, then admit new jobs. By conflat-

ing the platform responsible for arbitrating resource us-

age with the framework expressing that program, one

is forced to evolve them simultaneously; when opera-

tors improve the allocation efficiency of the platform,

3

This oversimplifies significantly, particularly in clusters of unreli-

able nodes, but it is generally true.

4

Some users even optimized their jobs to favor either map or reduce

tasks based on shifting demand in the cluster [28].

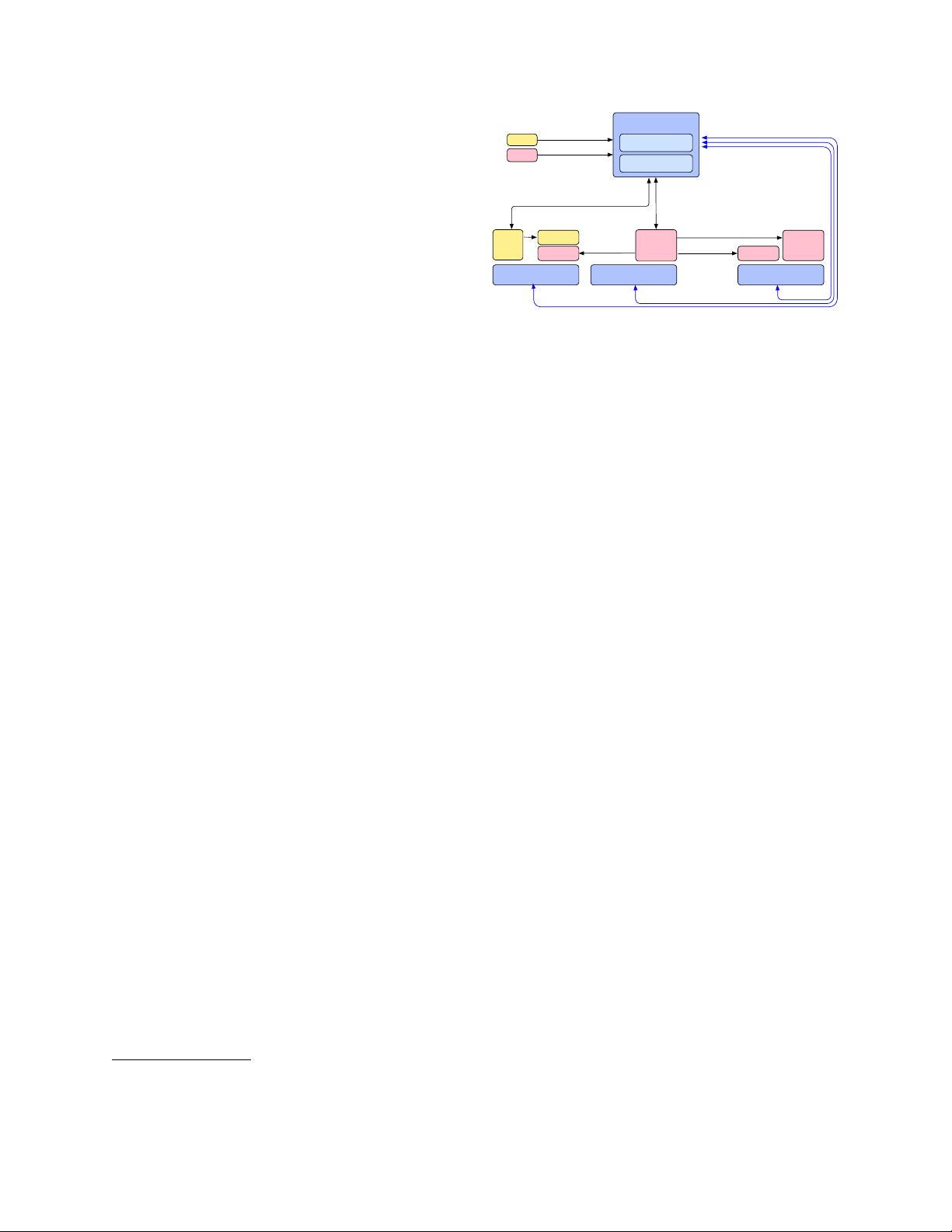

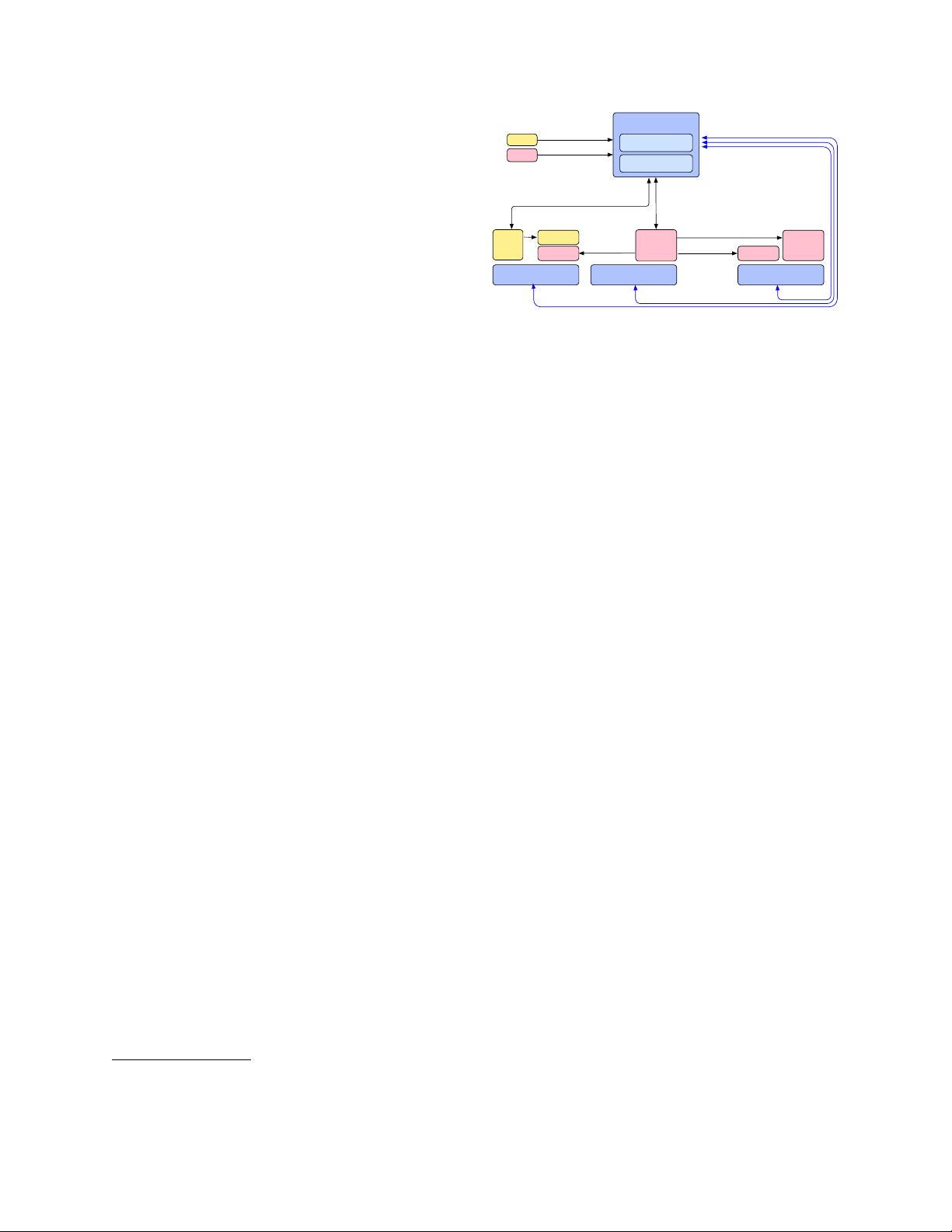

Node ManagerNode Manager Node Manager

ResourceManager

Scheduler

AMService

MR

AM

Container

Container

Container

MPI

AM

..

.

Container

RM -- NodeManager

RM -- AM

Umbilical

client

client

Client -- RM

Figure 1: YARN Architecture (in blue the system components,

and in yellow and pink two applications running.)

users must necessarily incorporate framework changes.

Thus, upgrading a cluster requires users to halt, vali-

date, and restore their pipelines for orthogonal changes.

While updates typically required no more than re-

compilation, users’ assumptions about internal frame-

work details—or developers’ assumptions about users’

programs—occasionally created blocking incompatibil-

ities on pipelines running on the grid.

Building on lessons learned by evolving Apache Ha-

doop MapReduce, YARN was designed to address re-

quirements (R1-R9). However, the massive install base

of MapReduce applications, the ecosystem of related

projects, well-worn deployment practice, and a tight

schedule would not tolerate a radical redesign. To avoid

the trap of a “second system syndrome” [6], the new ar-

chitecture reused as much code from the existing frame-

work as possible, behaved in familiar patterns, and left

many speculative features on the drawing board. This

lead to the final requirement for the YARN redesign:

[R10:] Backward compatibility.

In the remainder of this paper, we provide a descrip-

tion of YARN’s architecture (Sec. 3), we report about

real-world adoption of YARN (Sec. 4), provide experi-

mental evidence validating some of the key architectural

choices (Sec. 5) and conclude by comparing YARN with

some related work (Sec. 6).

3 Architecture

To address the requirements we discussed in Section 2,

YARN lifts some functions into a platform layer respon-

sible for resource management, leaving coordination of

logical execution plans to a host of framework imple-

mentations. Specifically, a per-cluster ResourceManager

(RM) tracks resource usage and node liveness, enforces

allocation invariants, and arbitrates contention among

tenants. By separating these duties in the JobTracker’s

charter, the central allocator can use an abstract descrip-

tion of tenants’ requirements, but remain ignorant of the