KinectFusion: Real-Time Dense Surface Mapping and Tracking

∗

Richard A. Newcombe

Imperial College London

Shahram Izadi

Microsoft Research

Otmar Hilliges

Microsoft Research

David Molyneaux

Microsoft Research

Lancaster University

David Kim

Microsoft Research

Newcastle University

Andrew J. Davison

Imperial College London

Pushmeet Kohli

Microsoft Research

Jamie Shotton

Microsoft Research

Steve Hodges

Microsoft Research

Andrew Fitzgibbon

Microsoft Research

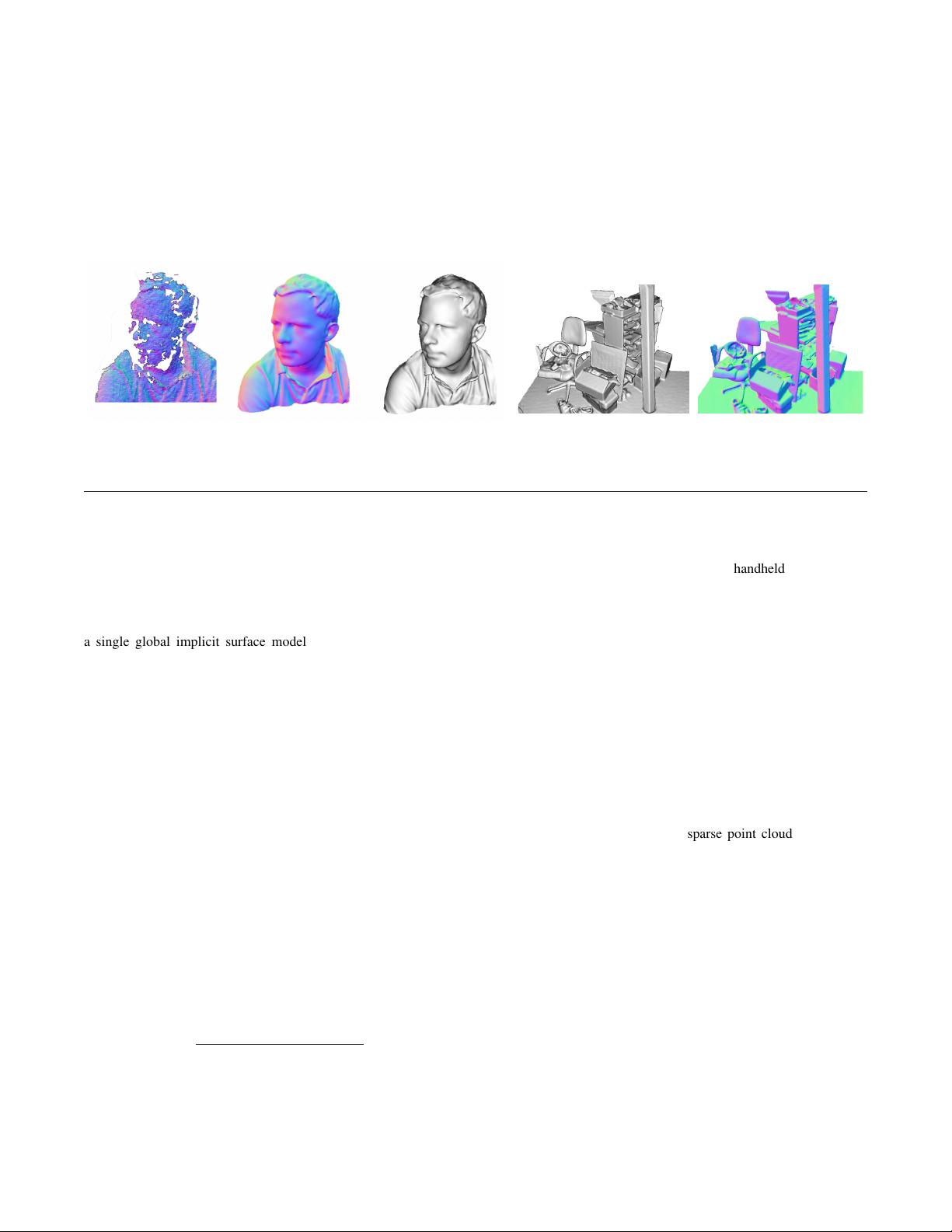

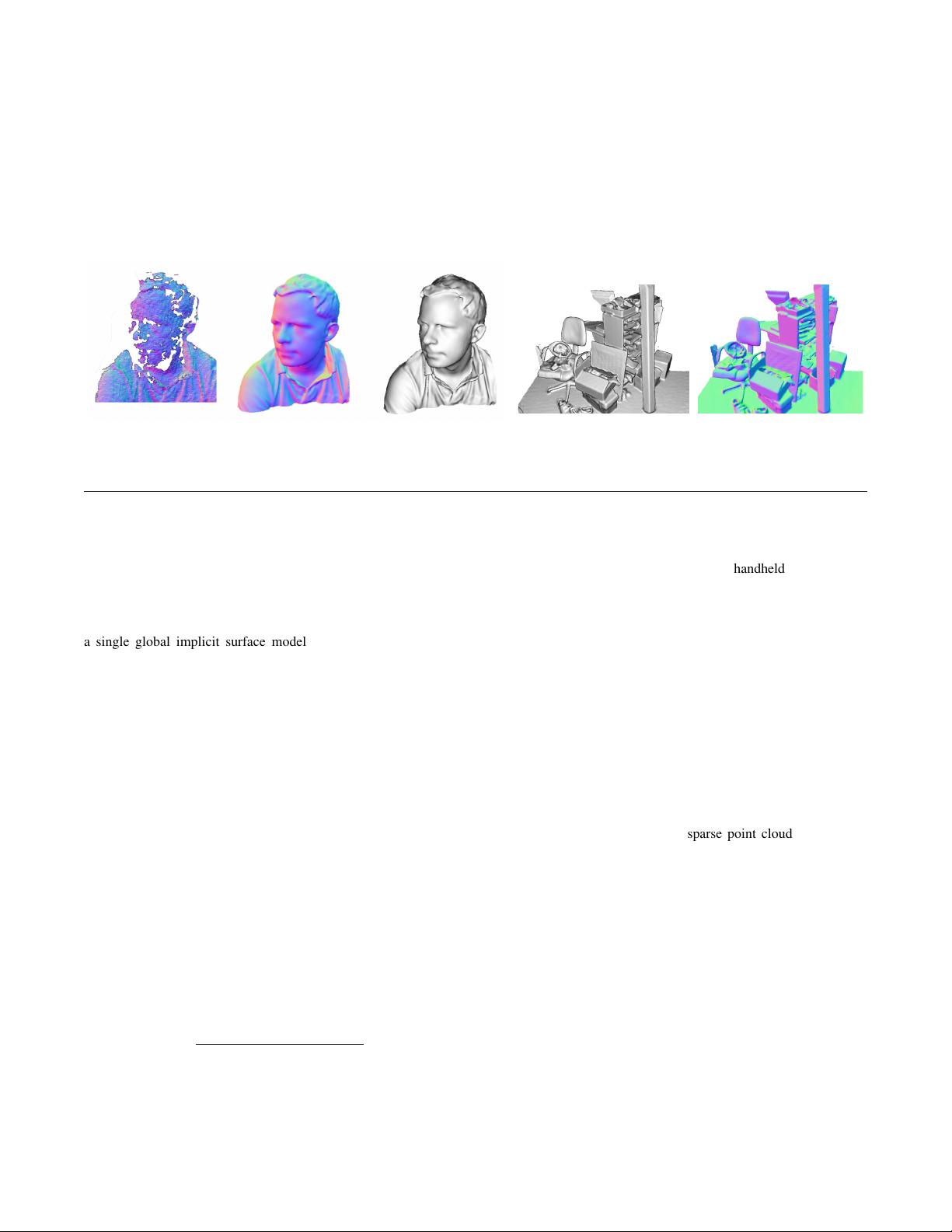

Figure 1: Example output from our system, generated in real-time with a handheld Kinect depth camera and no other sensing infrastructure.

Normal maps (colour) and Phong-shaded renderings (greyscale) from our dense reconstruction system are shown. On the left for comparison

is an example of the live, incomplete, and noisy data from the Kinect sensor (used as input to our system).

ABSTRACT

We present a system for accurate real-time mapping of complex and

arbitrary indoor scenes in variable lighting conditions, using only a

moving low-cost depth camera and commodity graphics hardware.

We fuse all of the depth data streamed from a Kinect sensor into

a single global implicit surface model of the observed scene in

real-time. The current sensor pose is simultaneously obtained by

tracking the live depth frame relative to the global model using a

coarse-to-fine iterative closest point (ICP) algorithm, which uses

all of the observed depth data available. We demonstrate the advan-

tages of tracking against the growing full surface model compared

with frame-to-frame tracking, obtaining tracking and mapping re-

sults in constant time within room sized scenes with limited drift

and high accuracy. We also show both qualitative and quantitative

results relating to various aspects of our tracking and mapping sys-

tem. Modelling of natural scenes, in real-time with only commod-

ity sensor and GPU hardware, promises an exciting step forward

in augmented reality (AR), in particular, it allows dense surfaces to

be reconstructed in real-time, with a level of detail and robustness

beyond any solution yet presented using passive computer vision.

Keywords: Real-Time, Dense Reconstruction, Tracking, GPU,

SLAM, Depth Cameras, Volumetric Representation, AR

Index Terms: I.3.3 [Computer Graphics] Picture/Image Genera-

tion - Digitizing and Scanning; I.4.8 [Image Processing and Com-

puter Vision] Scene Analysis - Tracking, Surface Fitting; H.5.1

[Information Interfaces and Presentation]: Multimedia Information

Systems - Artificial, augmented, and virtual realities

∗

This work was performed at Microsoft Research.

1 INTRODUCTIO N

Real-time infrastructure-free tracking of a handheld camera whilst

simultaneously mapping the physical scene in high-detail promises

new possibilities for augmented and mixed reality applications.

In computer vision, research on structure from motion (SFM)

and multi-view stereo (MVS) has produced many compelling re-

sults, in particular accurate camera tracking and sparse reconstruc-

tions (e.g. [10]), and increasingly reconstruction of dense surfaces

(e.g. [24]). However, much of this work was not motivated by real-

time applications.

Research on simultaneous localisation and mapping (SLAM) has

focused more on real-time markerless tracking and live scene re-

construction based on the input of a single commodity sensor—a

monocular RGB camera. Such ‘monocular SLAM’ systems such as

MonoSLAM [8] and the more accurate Parallel Tracking and Map-

ping (PTAM) system [17] allow researchers to investigate flexible

infrastructure- and marker-free AR applications. But while these

systems perform real-time mapping, they were optimised for ef-

ficient camera tracking, with the sparse point cloud models they

produce enabling only rudimentary scene reconstruction.

In the past year, systems have begun to emerge that combine

PTAM’s handheld camera tracking capability with dense surface

MVS-style reconstruction modules, enabling more sophisticated

occlusion prediction and surface interaction [19, 26]. Most recently

in this line of research, iterative image alignment against dense re-

constructions has also been used to replace point features for cam-

era tracking [20]. While this work is very promising for AR, dense

scene reconstruction in real-time remains a challenge for passive

monocular systems which assume the availability of the right type

of camera motion and suitable scene illumination.

But while algorithms for estimating camera pose and extract-

ing geometry from images have been evolving at pace, so have

the camera technologies themselves. New depth cameras based ei-

ther on time-of-flight (ToF) or structured light sensing offer dense

measurements of depth in an integrated device. With the arrival

of Microsoft’s Kinect, such sensing has suddenly reached wide

consumer-level accessibility. The opportunities for SLAM and AR