2350 IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 37, NO. 11, NOVEMBER 2018

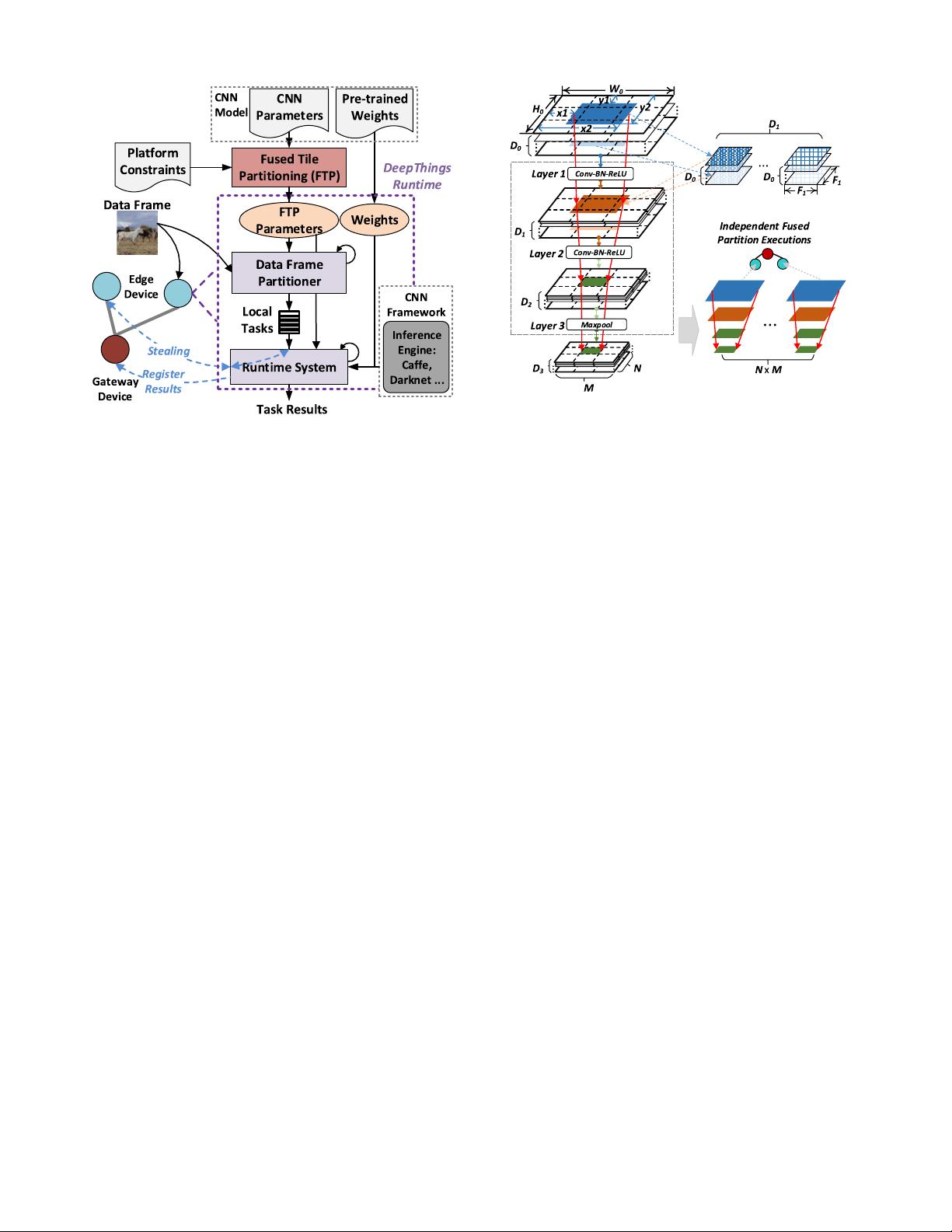

Fig. 3. Overview of the DeepThings framework.

between edge and gateway devices. However, this straightfor-

ward approach cannot explore the inference parallelism within

one data frame, and significant computation is still happening

in each layer. Apart from the long computation time, early

convolutional layers also leave a large memory footprint, as

shown by memory profiling results in Fig. 2. Taking the first

16 layers in You Only Look Once, version 2 (YOLOv2) as an

example, the maximum memory footprint for layer execution

can be as large as 70 MB, where the input and output data

contribute more than 90% of the total memory consumption.

Such large memory footprint prohibits the deployment of CNN

inference layers directly on resource-constrained IoT edge

devices.

In this paper, we focus on partition and distribution meth-

ods that enable execution of early stage convolutional layers

on IoT edge clusters with lightweight memory footprint. The

key idea is to slice original CNN layer stacks into indepen-

dently distributable execution units, each with smaller memory

footprint and maximal memory reuse within each IoT device.

With these small partitions, DeepThings will then dynami-

cally balance the workload among edge clusters to enable

efficient locally distributed CNN inference under time-varying

processing needs.

IV. D

EEPTHINGS FRAMEWORK

An overview of the DeepThings framework is shown in

Fig. 3. In general, DeepThings includes an offline CNN

partitioning step and an online executable to enable dis-

tributed adaptive CNN inference under dynamic IoT appli-

cation environments. Before execution, DeepThings takes

structural parameters of the original CNN model as input

and feeds them into an Fused Tile Partitioning (FTP). Based

on resource constraints of edge devices, a proper offloading

point between gateway/edge nodes and partitioning parameters

are generated in a one-time offline process. FTP parame-

ters together with model weights are then downloaded into

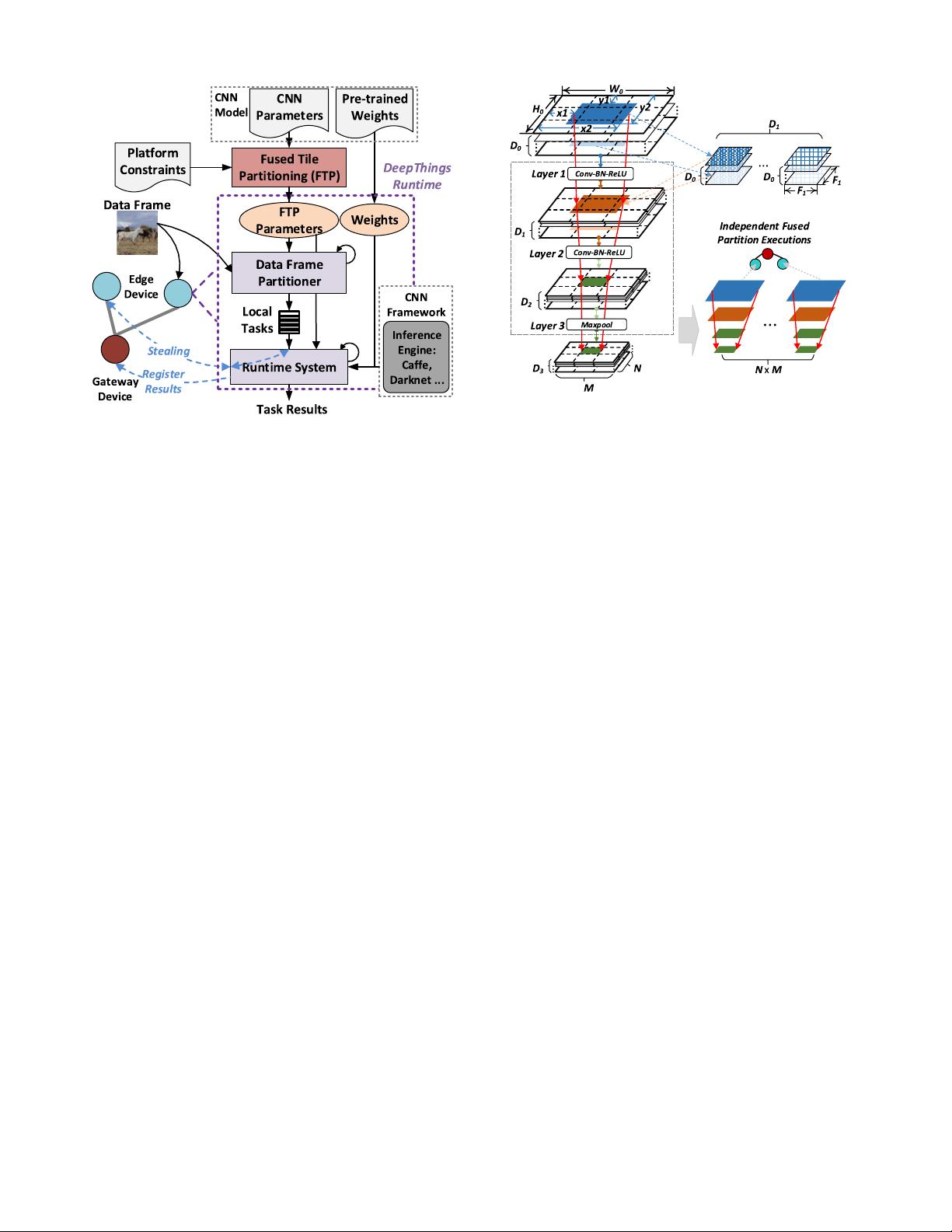

Fig. 4. Fused Tile Partitioning for CNN.

each edge device. For inference, a DeepThings runtime is

instantiated in each IoT device to manage task computa-

tion, distribution, and data communication. Its Data Frame

Partitioner will partition any incoming data frames from local

data sources into distributable and lightweight inference tasks

according to the precomputed FTP parameters. The Runtime

System in turn loads the pretrained weights and invokes an

externally linked CNN inference engine to process the parti-

tioned inference tasks. In the process, the Runtime System will

register itself with the gateway device, which centrally moni-

tors and coordinates work distribution and stealing. If its task

queue runs empty, an IoT edge node will poll the gateway

for devices with active work items and start stealing tasks

by directly communicating with other DeepThings runtimes

in a peer-to-peer fashion. Finally, after edge devices have fin-

ished processing all tasks for a given data source associated

with one of the devices, the gateway will collect and merge

partition results from different devices and finish the remain-

ing offloaded inference layers. A key aspect of DeepThings

is that it is designed to be independent of and general in

supporting arbitrary pretrained models and external inference

engines.

A. Fused Tile Partitioning

Fig. 4 illustrates our FTP partitioning on a typical exam-

ple of a CNN inference flow. In DNN architectures, multiple

convolutional and pooling layers are usually stacked together

to gradually extract hidden features from input data. In a

CNN with L layers, for each convolutional operation in layer

l = 1 ···L with input dimensions W

l−1

× H

l−1

,asetofD

l

learnable filters with dimensions F

l

× F

l

× D

l−1

are used to

slide across D

l−1

input feature maps with a stride of S

l

.Inthe

process, dot products of the filter sets and corresponding input

region are computed to generate D

l

output feature maps with

dimensions W

l

× H

l

, which in turn form the input maps for

layer l + 1. Note that in such convolutional chains of DNNs,

the depth of intermediate feature maps (D

l

) are usually very