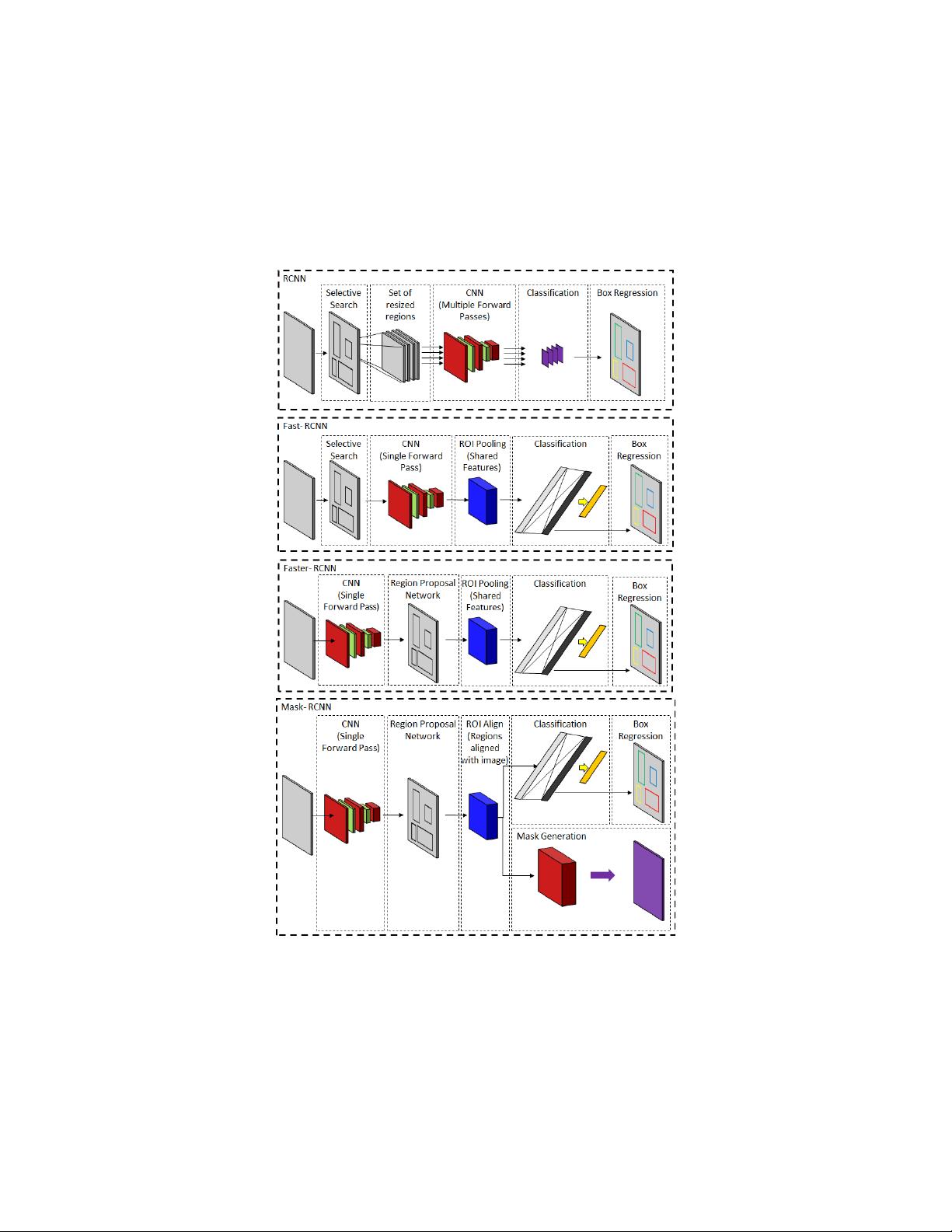

intensities to cluster the pixels into objects. The bounding boxes corresponding

to these segments are passed through classifying networks to short-list some of

the most sensible boxes. Finally, with a simple linear regression network tighter

co-ordinate can be obtained. The main downside of the technique is its compu-

tational cost. The network needs to compute a forward pass for every bounding

box proposition. The problem with sharing computation across all boxes was

that the boxes were of different sizes and hence uniform sized features were not

achievable. In the upgraded Fast R-CNN [69], ROI (Region of Interest) Pooling

was proposed in which region of interests were dynamically pooled to obtain a

fixed size feature output. Henceforth, the network was mainly bottlenecked by

the selective search technique for candidate region proposal. In Faster-RCNN

[175], instead of depending on external features, the intermediate activation

maps were used to propose bounding boxes, thus speeding up the feature ex-

traction process. Bounding boxes are representative of the location of the object,

however they do not provide pixel-level segments. The Faster R-CNN network

was extended as Mask R-CNN [76] with a parallel branch that performed pixel

level object specific binary classification to provide accurate segments. With

Mask-RCNN an average precision of 35.7 was attained in the COCO[122] test

images. The family of RCNN algorithms have been depicted in fig.7. Region

proposal networks have often been combined with other networks [118, 44] to

give instance level segmentations. RCNN was further improved under the name

of HyperNet [99] by using features from multiple layers of the feature extrac-

tor. Region proposal networks have also been implemented for instance specific

segmentation as well. As mentioned before object detection capabilities of ap-

proaches like RCNN are often coupled with segmentation models to generate

different masks for different instances of the same object[43].

4.1.3 DeepLab

While pixel level segmentation was effective, two complementing issues were

still affecting the performance. Firstly, smaller kernel sizes failed to capture

contextual information. In classification problems, this is handled using pooling

layers that increases the sensory area of the kernels with respect to the original

image. But in segmentation that reduces the sharpness of the segmented output.

Alternative usage of larger kernels tend to be slower due to significanty larger

number of trainable parameters. To handle this issue the DeepLab [30, 32]

family of algorithms demonstrated the usage of various methodologies like atrous

convolutions [211], spatial pooling pyramids [77] and fully connected conditional

random fields [100] to perform image segmentation with great efficiency. The

DeepLab algorithm was able to attain a meanIOU of 79.7 on the PASCAL VOC

2012 dataset[54].

Atrous/Dilated Convolution The size of the convolution kernels in any

layer determine the sensory response area of the network. While smaller kernels

extract local information, larger kernels try to focus on more contextual informa-

tion. However, larger kernels normally comes with more number of parameters.

11