没有合适的资源?快使用搜索试试~ 我知道了~

首页Image Based Rendering -- Part I

Image Based Rendering -- Part I

基于图像的绘制的 Image-Based Rendering Shum, Heung-Yeung, Chan, Shing-Chow, Kang, Sing Bing 2007, XX, 408 p. 95 illus., Hardcover 来自 http://www.springer.com/computer/computer+imaging/book/978-0-387-21113-8 (如果侵犯的您的利益,请通知本人,马上删除) 此链接上有下载,但是是分章节下载的,且没有书签。 我合并了一下,并添加了比较全面的书签。 全书包括下面的6个文件 front-matter.pdf PartI Representations and Rendering Techniques.pdf --- 本文件 Part II Sampling.pdf Part III Compression.pdf Part IV Systems and Applications.pdf back-matter.pdf

资源详情

资源评论

资源推荐

Parti

Representations and Rendering Techniques

The first part of the book is a broad survey of IBR representations and rendering tech-

niques. While there is significant overlap between the type of representation and the

rendering mechanism, we chose to highlight representational and rendering issues in

separate chapters. We devote two chapters to representations: one for (mostly) static

scenes, and another for dynamic scenes. (Other relevant surveys on IBR can be found

in [155, 339, 345].)

Unsurprisingly, the earliest work on IBR focused on static scenes, mostly due

to hardware limitations in image capture and storage. Chapter 2 describes IBR rep-

resentations for static scenes. More importantly, it sets the stage for other chapters

by describing fundamental issues such as the plenoptic function and how the repre-

sentations are related to it, classifications of representations (no geometry, implicit

geometry, explicit geometry), and the importance of view-dependency.

Chapter 3 follows up with descriptions of systems for rendering dynamic scenes.

Such systems are possible with recent advancements in image acquisition hardware,

higher capacity drives, and faster PCs. Virtually all these systems rely on extracted

geometry for rendering due to the limit in the number of cameras. It is interesting

to note their different design decisions, such as generating global 3D models on a

per-timeframe basis versus view-dependent layered geometries, and freeform shapes

versus model-based ones. The different design decisions result in varying rendering

complexity and quality.

The type of rendering depends on the type of representation. In Chapter 4, we

partition the type of rendering into point-based, layer-based, and monolithic-based

rendering. (By monolithic, we mean single geometries such as 3D meshes.) We

describe well-known concepts such as forward and backward mapping and ray-

selection strategies. We also discuss hardware rendering issues in this chapter.

8 Image-Based Rendering

Additional Notes on Chapters

A significant part of Chapter 2 is based on

the

journal article "Survey of image-based

representations and compression techniques," by H.-Y. Shum, S.B. Kang, and S.-C.

Chan, which appeared in IEEE Trans. On Circuits and Systems for Video Technol-

ogy, vol. 13, no.

11,

Nov.

2003,

pp. 1020-1037. ©2003 IEEE.

Parts of Chapter 3 were adapted from "High-quality video view interpolation us-

ing a layered representation," by C.L. Zitnick, S.B. Kang, M. Uyttendaele, S. Winder,

and R. Szeliski, ACM SIGGRAPH and ACM Transactions on Graphics, Aug. 2004,

pp.

600-608.

Xin Tong implemented the "locally reparameterized Lumigraph" (LRL) de-

scribed in Section 2.4. Yin Li and Xin Tong contributed significantly to Chapter 4.

static Scene Representations

In Chapter 1, we introduced the IBR continuum that spans a variety of representa-

tions (Figure 1.1). The continuum is constructed based on how geometric-centric the

representation is. We structure this chapter based on this continuum: representations

that rely on no geometry are described first, followed by those using implicit geom-

etry (i.e., relationships expressed through image correspondences), and finally those

with explicit 3D geometry.

2.1 Rendering with no geometry

We start with representative techniques for rendering with unknown scene geometry.

These techniques typically rely on many input images; they also rely on the charac-

terization of the plenoptic function.

2.1.1 Plenoptic modeling

(a) (K,, F,, VJ

Fig. 2.1. Plenoptic functions: (a) full 7-parameter (\4, K,,

14,6',

</>,

A,

t), (b) 5-parameter

{V:c,

Kj/,

14,6*,

(/)),

and (c)2-parameter

{d,<p).

The original 7D plenoptic function [2] is defined as the intensity of light rays

passing through the camera center at every 3D location (V^, Vy,Vz) at every possible

angle {9,

cj)),

for every wavelength A, at every timet, i.e., ^7(14, V^, V^, ^,

</>,

A.i).

10 Image-Based Rendering

Adelson and Bergen [2] considered one of the tasks of early vision as extracting

a compact and useful description of the plenoptic function's local properties (e.g.,

low order derivatives). It has also been shown by Wong et

al.

[322] that light source

directions can be incorporated into the plenoptic function for illumination control. By

removing two variables, time t (therefore static environment) and light wavelength

A, McMillan and Bishop [194] introduced the notion of plenoptic modeling with the

5D complete plenoptic function of the form

PTJ{VX,

Vy, Vz,9,

(f)).

The simplest plenoptic function is a 2D panorama (cylindrical [41] or spherical

[291]) when the viewpoint is fixed, namely P2{0,

(f)-

A regular rectilinear image with

a limited field of view can be regarded as an incomplete plenoptic sample at a fixed

viewpoint.

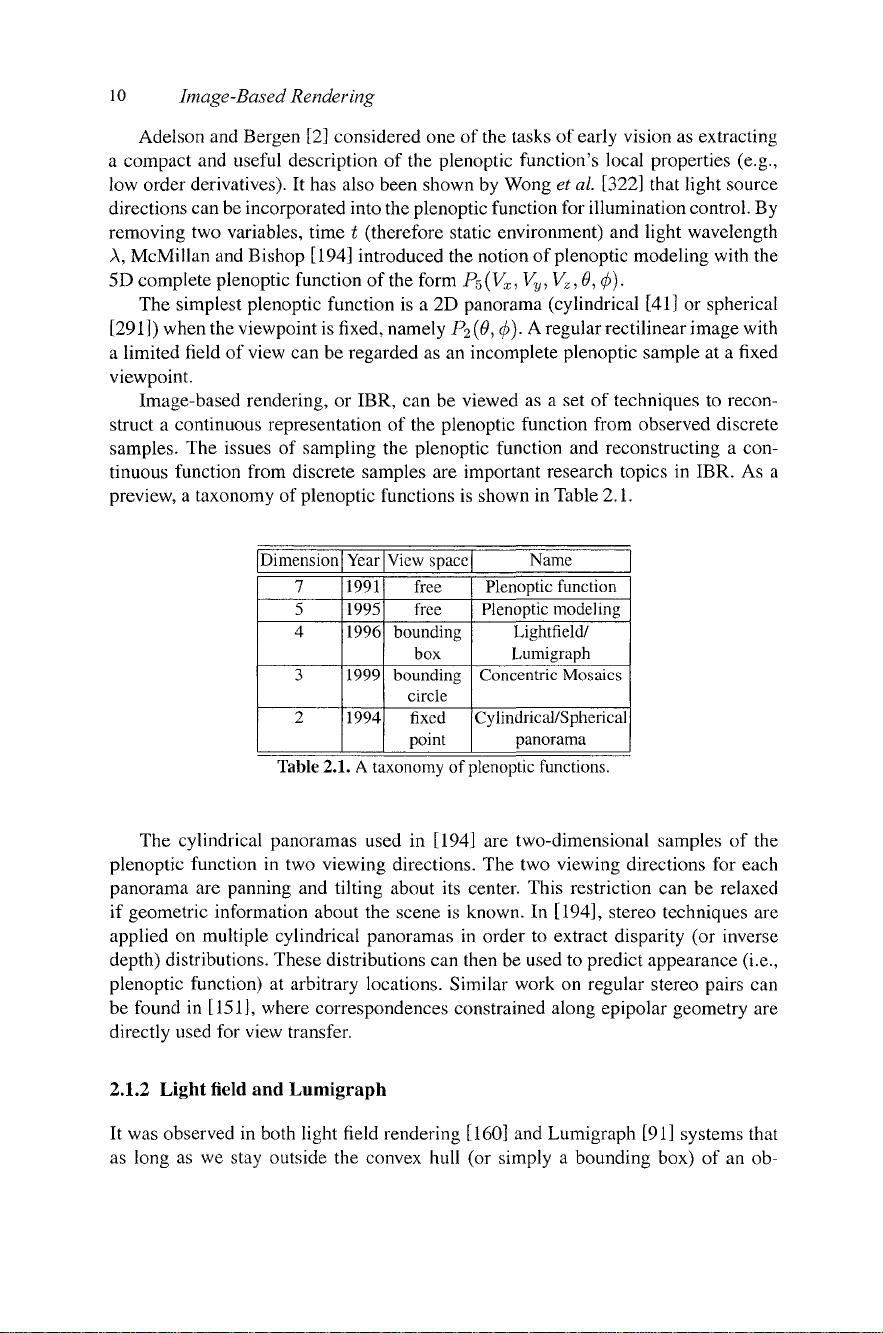

Image-based rendering, or IBR, can be viewed as a set of techniques to recon-

struct a continuous representation of the plenoptic function from observed discrete

samples. The issues of sampling the plenoptic function and reconstructing a con-

tinuous function from discrete samples are important research topics in IBR. As a

preview, a taxonomy of plenoptic functions is shown in Table

2.1.

Dimension

7

5

4

3

2

Year

1991

1995

1996

1999

1994

View space

free

free

bounding

box

bounding

circle

fixed

point

Name

Plenoptic function

Plenoptic modeling

Lightfield/

Lumigraph

Concentric Mosaics

Cylindrical/Spherical

panorama

Table

2.1.

A

taxonomy of

plenoptic

functions.

The cylindrical panoramas used in [194] are two-dimensional samples of the

plenoptic function in two viewing directions. The two viewing directions for each

panorama are panning and tilting about its center. This restriction can be relaxed

if geometric information about the scene is known. In

[194],

stereo techniques are

applied on multiple cylindrical panoramas in order to extract disparity (or inverse

depth) distributions. These distributions can then be used to predict appearance (i.e.,

plenoptic function) at arbitrary locations. Similar work on regular stereo pairs can

be found in

[151],

where correspondences constrained along epipolar geometry are

directly used for view transfer.

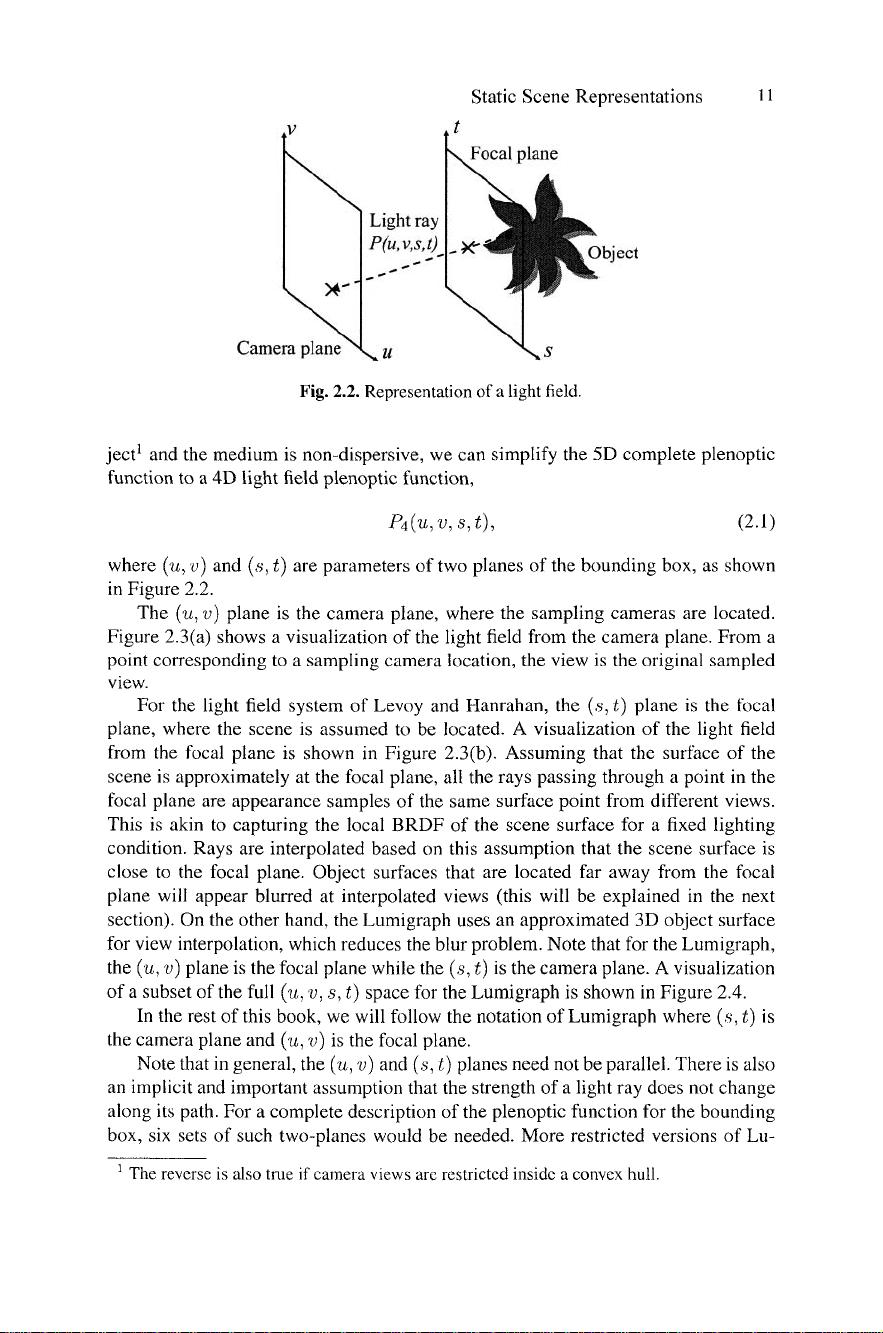

2.1.2 Light field and Lumigraph

It was observed in both light field rendering [160] and Lumigraph [91] systems that

as long as we stay outside the convex hull (or simply a bounding box) of an ob-

Static Scene Representations

Focal plane

Light ray

P(u,v,s,t)

Object

Camera plane

X,^^^

y v^ 5

Fig. 2.2. Representation of a light field.

ject and the medium is non-dispersive, we can simplify the 5D complete plenoptic

function to a 4D light field plenoptic function,

Pi{u,v,s,i),

(2.1)

where (u, u) and {s,t) are parameters of two planes of the bounding box, as shown

in Figure 2.2.

The (w, v) plane is the camera plane, where the sampling cameras are located.

Figure 2.3(a) shows a visualization of the light field from the camera plane. From a

point corresponding to a sampling camera location, the view is the original sampled

view.

For the light field system of Levoy and Hanrahan, the {s,t) plane is the focal

plane, where the scene is assumed to be located. A visualization of the light field

from the focal plane is shown in Figure 2.3(b). Assuming that the surface of the

scene is approximately at the focal plane, all the rays passing through a point in the

focal plane are appearance samples of the same surface point from different views.

This is akin to capturing the local BRDF of the scene surface for a fixed lighting

condition. Rays are interpolated based on this assumption that the scene surface is

close to the focal plane. Object surfaces that are located far away from the focal

plane will appear blurred at interpolated views (this will be explained in the next

section). On the other hand, the Lumigraph uses an approximated 3D object surface

for view interpolation, which reduces the blur problem. Note that for the Lumigraph,

the (u, v) plane is the focal plane while the (s, t) is the camera plane. A visualization

of a subset of the full {u, v, s, t) space for the Lumigraph is shown in Figure 2.4.

In the rest of this book, we will follow the notation of Lumigraph where (,s,

i^)

is

the camera plane and (w, v) is the focal plane.

Note that in general, the (w, v) and (s, t) planes need not be parallel. There is also

an implicit and important assumption that the strength of a light ray does not change

along its path. For a complete description of the plenoptic function for the bounding

box, six sets of such two-planes would be needed. More restricted versions of Lu-

^ The reverse is also tme if camera views are restricted inside a convex hull.

剩余82页未读,继续阅读

1213151231

- 粉丝: 13

- 资源: 12

上传资源 快速赚钱

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- RTL8188FU-Linux-v5.7.4.2-36687.20200602.tar(20765).gz

- c++校园超市商品信息管理系统课程设计说明书(含源代码) (2).pdf

- 建筑供配电系统相关课件.pptx

- 企业管理规章制度及管理模式.doc

- vb打开摄像头.doc

- 云计算-可信计算中认证协议改进方案.pdf

- [详细完整版]单片机编程4.ppt

- c语言常用算法.pdf

- c++经典程序代码大全.pdf

- 单片机数字时钟资料.doc

- 11项目管理前沿1.0.pptx

- 基于ssm的“魅力”繁峙宣传网站的设计与实现论文.doc

- 智慧交通综合解决方案.pptx

- 建筑防潮设计-PowerPointPresentati.pptx

- SPC统计过程控制程序.pptx

- SPC统计方法基础知识.pptx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功

评论1