没有合适的资源?快使用搜索试试~ 我知道了~

首页Transfer Learning with Convolutional Neural Networks in PyTorch

资源详情

资源评论

资源推荐

Transfer Learning with Convolutional

Neural Networks inPyTorch

How to use a pre‑trained convolutional

neural network for object recognition

withPyTorch

Although Keras is a great library with a simple API for building

neural networks, the recent excitement about PyTorch finally got me

interested in exploring this library. While I’m one to blindly follow

the hype, the adoption by researchers and inclusion in the fast.ai

library convinced me there must be something behind this new entry

in deep learning.

Will Koehrsen

Nov 27, 2018

·

15 min read

(Source)

Since the best way to learn a new technology is by using it to solve a

problem, my efforts to learn PyTorch started out with a simple

project: use a pre-trained convolutional neural network for an object

recognition task. In this article, we’ll see how to use PyTorch to

accomplish this goal, along the way, learning a little about the library

and about the important concept of transfer learning.

WhilePyTorchmightnotbeforeveryone,atthispointit’s

impossibletosaywhichdeeplearninglibrarywillcomeoutontop,

andbeingabletoquicklylearnandusedifferenttoolsiscrucialto

succeedasadatascientist.

The complete code for this project is available as a Jupyter Notebook

on GitHub. This project was born out of my participation in the

Udacity PyTorch scholarship challenge.

. . .

Approach to TransferLearning

Our task will be to train a convolutional neural network (CNN) that

can identify objects in images. We’ll be using the Caltech 101 dataset

which has images in 101 categories. Most categories only have 50

images which typically isn’t enough for a neural network to learn to

high accuracy. Therefore, instead of building and training a CNN

from scratch, we’ll use a pre-built and pre-trained model applying

transfer learning.

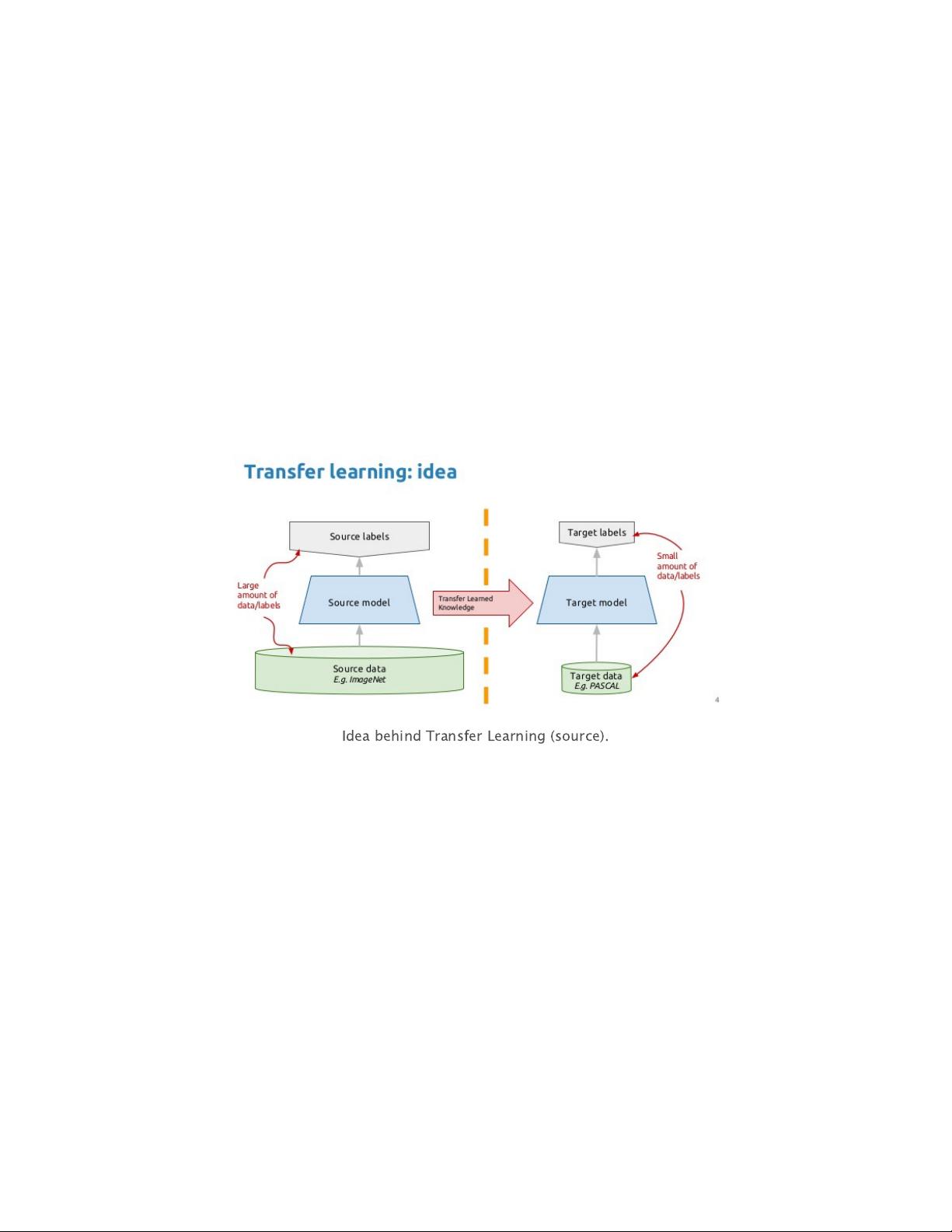

The basic premise of transfer learning is simple: take a model trained

on a large dataset and transferits knowledge to a smaller dataset. For

object recognition with a CNN, we freeze the early convolutional

Predicted from trainednetwork

layers of the network and only train the last few layers which make a

prediction. The idea is the convolutional layers extract general, low-

level features that are applicable across images — such as edges,

patterns, gradients — and the later layers identify specific features

within an image such as eyes or wheels.

Thus, we can use a network trained on unrelated categories in a

massive dataset (usually Imagenet) and apply it to our own problem

because there are universal, low-level features shared between

images. The images in the Caltech 101 dataset are very similar to

those in the Imagenet dataset and the knowledge a model learns on

Imagenet should easily transfer to this task.

Following is the general outline for transfer learning for object

recognition:

Load in a pre-trained CNN model trained on a large dataset

Freeze parameters (weights) in model’s lower convolutional

layers

Add custom classifier with several layers of trainable parameters

to model

Train classifier layers on training data available for task

Fine-tune hyperparameters and unfreeze more layers as needed

1.

2.

3.

4.

5.

Idea behind Transfer Learning (source).

This approach has proven successful for a wide range of domains. It’s

a great tool to have in your arsenal and generally the first approach

that should be tried when confronted with a new image recognition

problem.

. . .

Data SetUp

With all data science problems, formatting the data correctly will

determine the success or failure of the project. Fortunately, the

Caltech 101 dataset images are clean and stored in the correct format.

If we correctly set up the data directories, PyTorch makes it simple to

associate the correct labels with each class. I separated the data into

training,validation,andtesting sets with a 50%, 25%, 25% split and

then structured the directories as follows:

/datadir

/train

/class1

/class2

.

.

/valid

/class1

/class2

.

.

/test

/class1

/class2

.

.

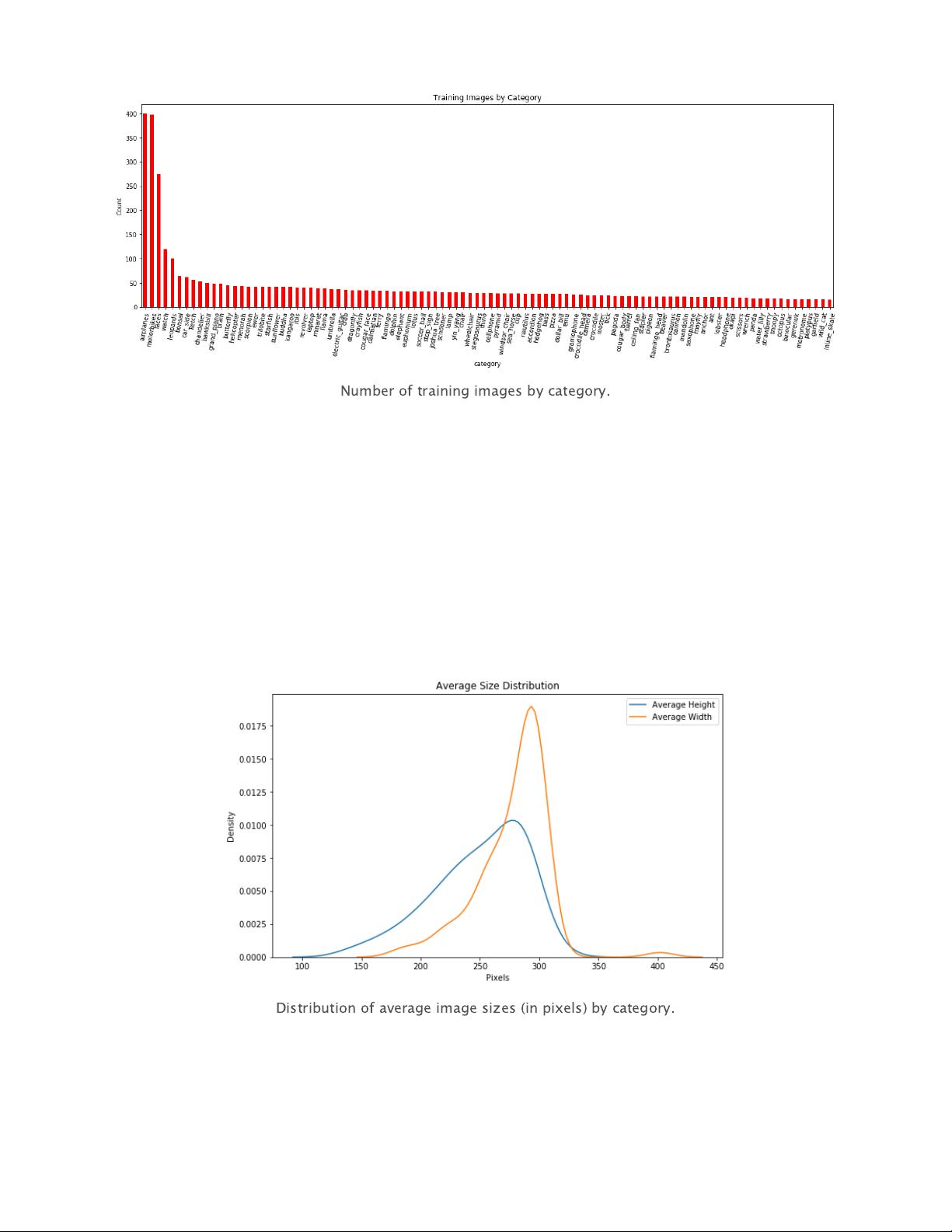

The number of training images by classes is below (I use the terms

classes and categories interchangeably):

We expect the model to do better on classes with more examples

because it can better learn to map features to labels. To deal with the

limited number of training examples we’ll use dataaugmentation

during training (more later).

As another bit of data exploration, we can also look at the size

distribution.

Imagenet models need an input size of 224 x 224 so one of the

preprocessing steps will be to resize the images. Preprocessing is also

where we will implement data augmentation for our training data.

Number of training images by category.

Distribution of average image sizes (in pixels) by category.

剩余22页未读,继续阅读

tox33

- 粉丝: 64

- 资源: 304

上传资源 快速赚钱

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- RTL8188FU-Linux-v5.7.4.2-36687.20200602.tar(20765).gz

- c++校园超市商品信息管理系统课程设计说明书(含源代码) (2).pdf

- 建筑供配电系统相关课件.pptx

- 企业管理规章制度及管理模式.doc

- vb打开摄像头.doc

- 云计算-可信计算中认证协议改进方案.pdf

- [详细完整版]单片机编程4.ppt

- c语言常用算法.pdf

- c++经典程序代码大全.pdf

- 单片机数字时钟资料.doc

- 11项目管理前沿1.0.pptx

- 基于ssm的“魅力”繁峙宣传网站的设计与实现论文.doc

- 智慧交通综合解决方案.pptx

- 建筑防潮设计-PowerPointPresentati.pptx

- SPC统计过程控制程序.pptx

- SPC统计方法基础知识.pptx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功

评论0