Enhanced Deep Residual Networks for Single Image Super-Resolution

Bee Lim Sanghyun Son Heewon Kim Seungjun Nah Kyoung Mu Lee

Department of ECE, ASRI, Seoul National University, 08826, Seoul, Korea

forestrainee@gmail.com, thstkdgus35@snu.ac.kr, ghimhw@gmail.com

seungjun.nah@gmail.com, kyoungmu@snu.ac.kr

Abstract

Recent research on super-resolution has progressed with

the development of deep convolutional neural networks

(DCNN). In particular, residual learning techniques exhibit

improved performance. In this paper, we develop an en-

hanced deep super-resolution network (EDSR) with perfor-

mance exceeding those of current state-of-the-art SR meth-

ods. The significant performance improvement of our model

is due to optimization by removing unnecessary modules in

conventional residual networks. The performance is further

improved by expanding the model size while we stabilize

the training procedure. We also propose a new multi-scale

deep super-resolution system (MDSR) and training method,

which can reconstruct high-resolution images of different

upscaling factors in a single model. The proposed methods

show superior performance over the state-of-the-art meth-

ods on benchmark datasets and prove its excellence by win-

ning the NTIRE2017 Super-Resolution Challenge [26].

1. Introduction

Image super-resolution (SR) problem, particularly sin-

gle image super-resolution (SISR), has gained increasing

research attention for decades. SISR aims to reconstruct

a high-resolution image I

SR

from a single low-resolution

image I

LR

. Generally, the relationship between I

LR

and

the original high-resolution image I

HR

can vary depending

on the situation. Many studies assume that I

LR

is a bicubic

downsampled version of I

HR

, but other degrading factors

such as blur, decimation, or noise can also be considered for

practical applications.

Recently, deep neural networks [11, 12, 14] provide sig-

nificantly improved performance in terms of peak signal-to-

noise ratio (PSNR) in the SR problem. However, such net-

works exhibit limitations in terms of architecture optimality.

First, the reconstruction performance of the neural network

models is sensitive to minor architectural changes. Also, the

same model achieves different levels of performance by dif-

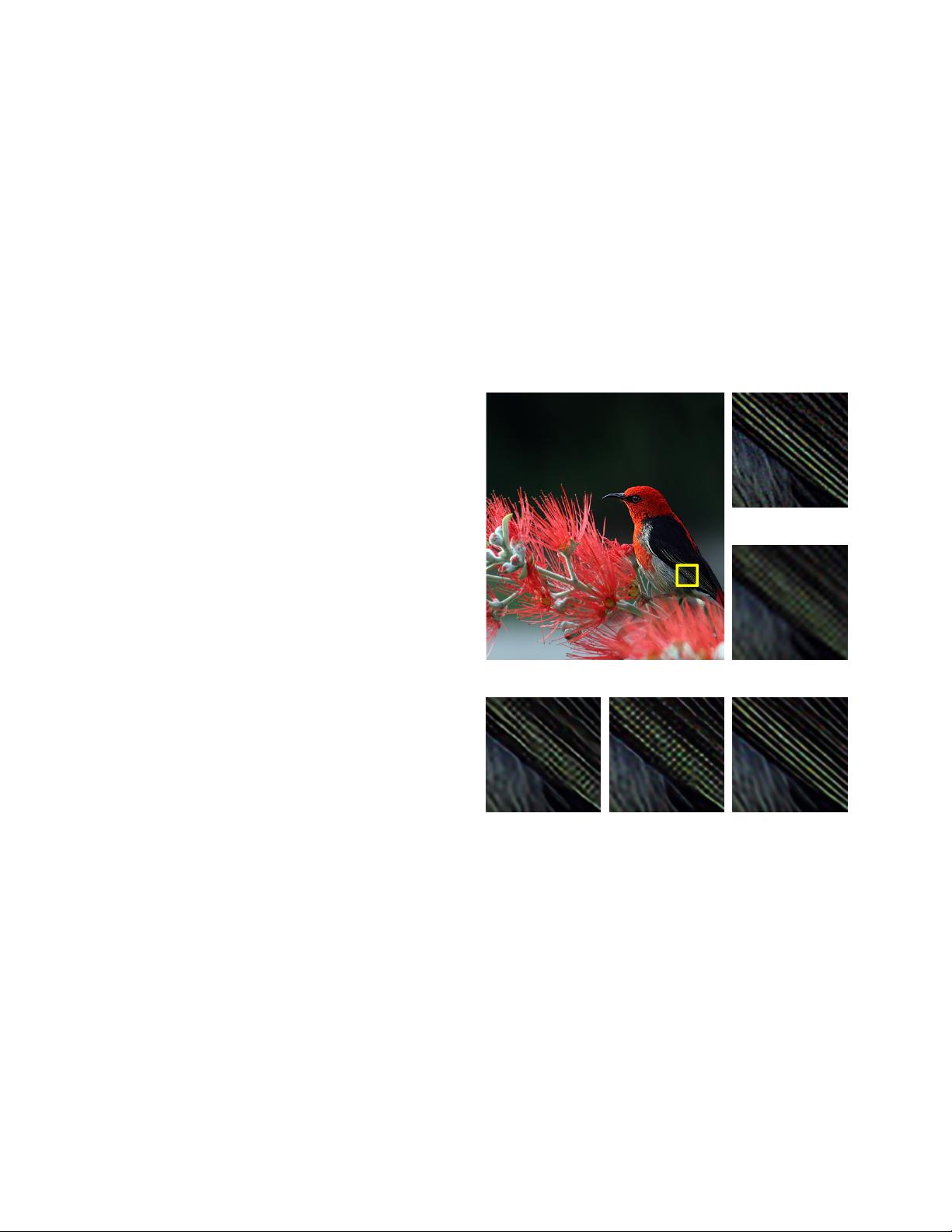

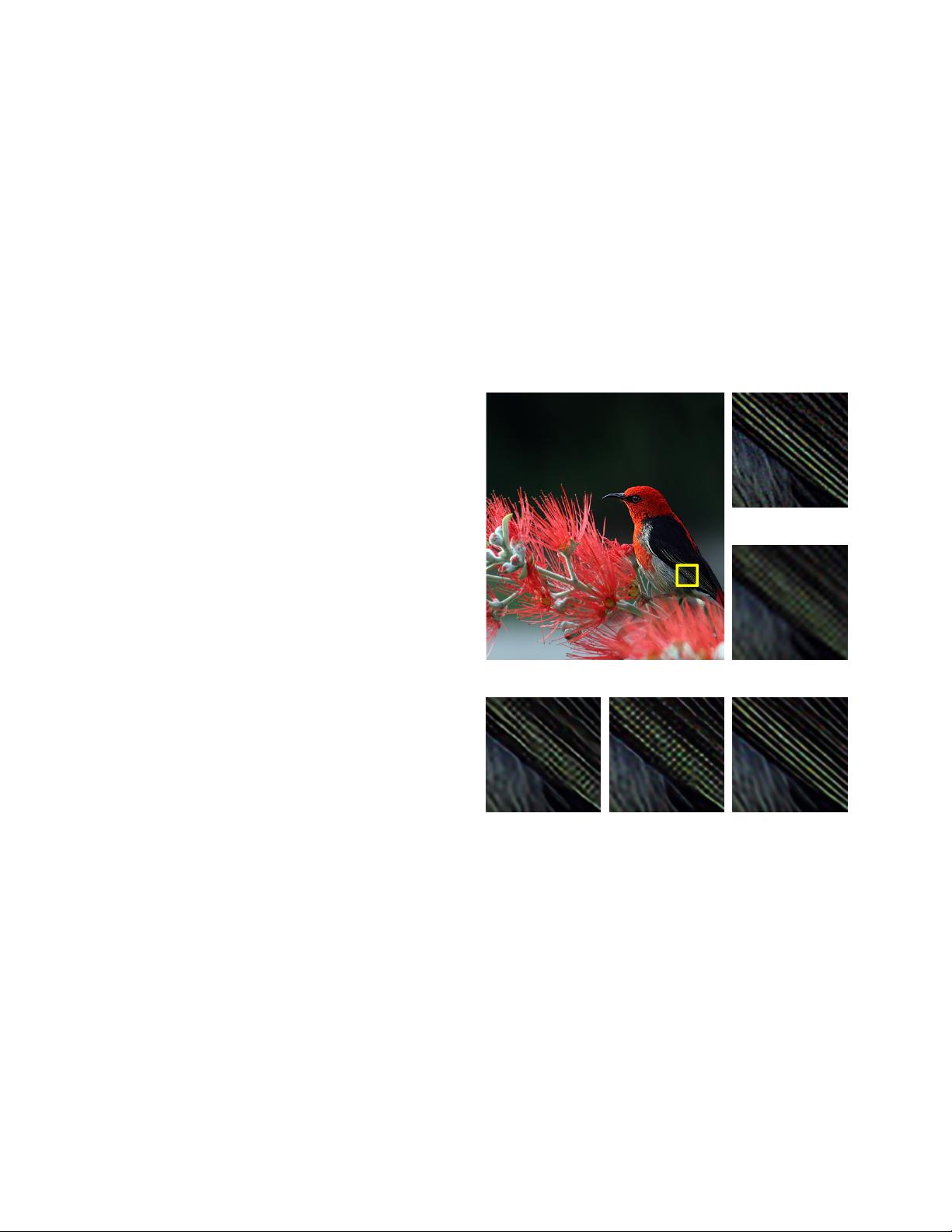

0853 from DIV2K [26]

HR

(PSNR / SSIM)

Bicubic

(30.80 dB / 0.9537)

VDSR [11]

(32.82 dB / 0.9623)

SRResNet [14]

(34.00 dB / 0.9679)

EDSR+ (Ours)

(34.78 dB / 0.9708)

Figure 1: ×4 Super-resolution result of our single-scale SR

method (EDSR) compared with existing algorithms.

ferent initialization and training techniques. Thus, carefully

designed model architecture and sophisticated optimization

methods are essential in training the neural networks.

Second, most existing SR algorithms treat super-

resolution of different scale factors as independent prob-

lems without considering and utilizing mutual relationships

among different scales in SR. As such, those algorithms re-

quire many scale-specific networks that need to to be trained

independently to deal with various scales. Exceptionally,

1

arXiv:1707.02921v1 [cs.CV] 10 Jul 2017