F. Qi et al. / Information and Software Technology 92 (2017) 145–157 147

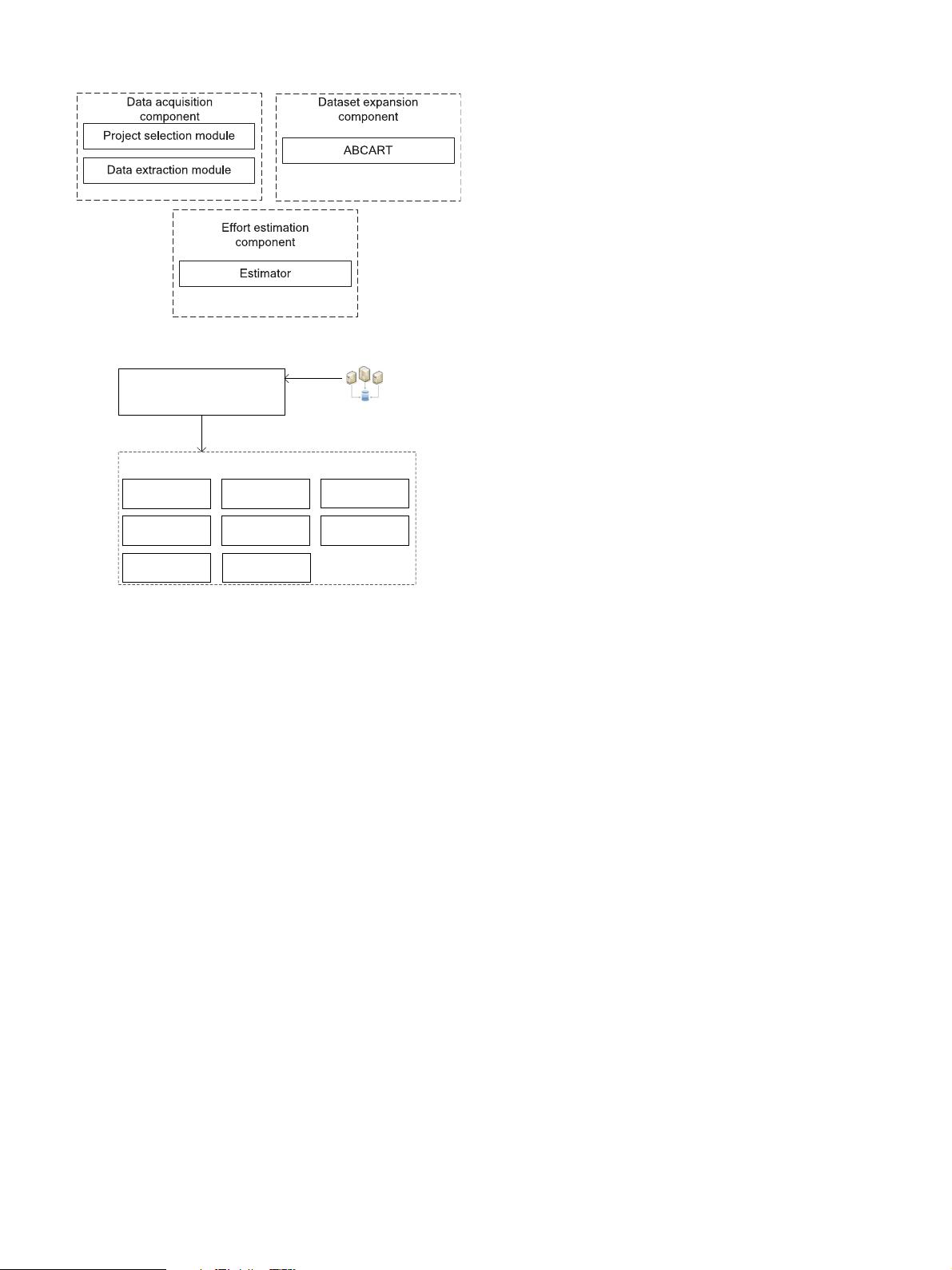

Fig. 1. Overview of our method.

http://www.github.com

Project information

selection module

Metric 1 Metric 2

Metric 4 Metric 5 Metric 6

Metric 7

Metric 3

Effort

Effort data extraction module

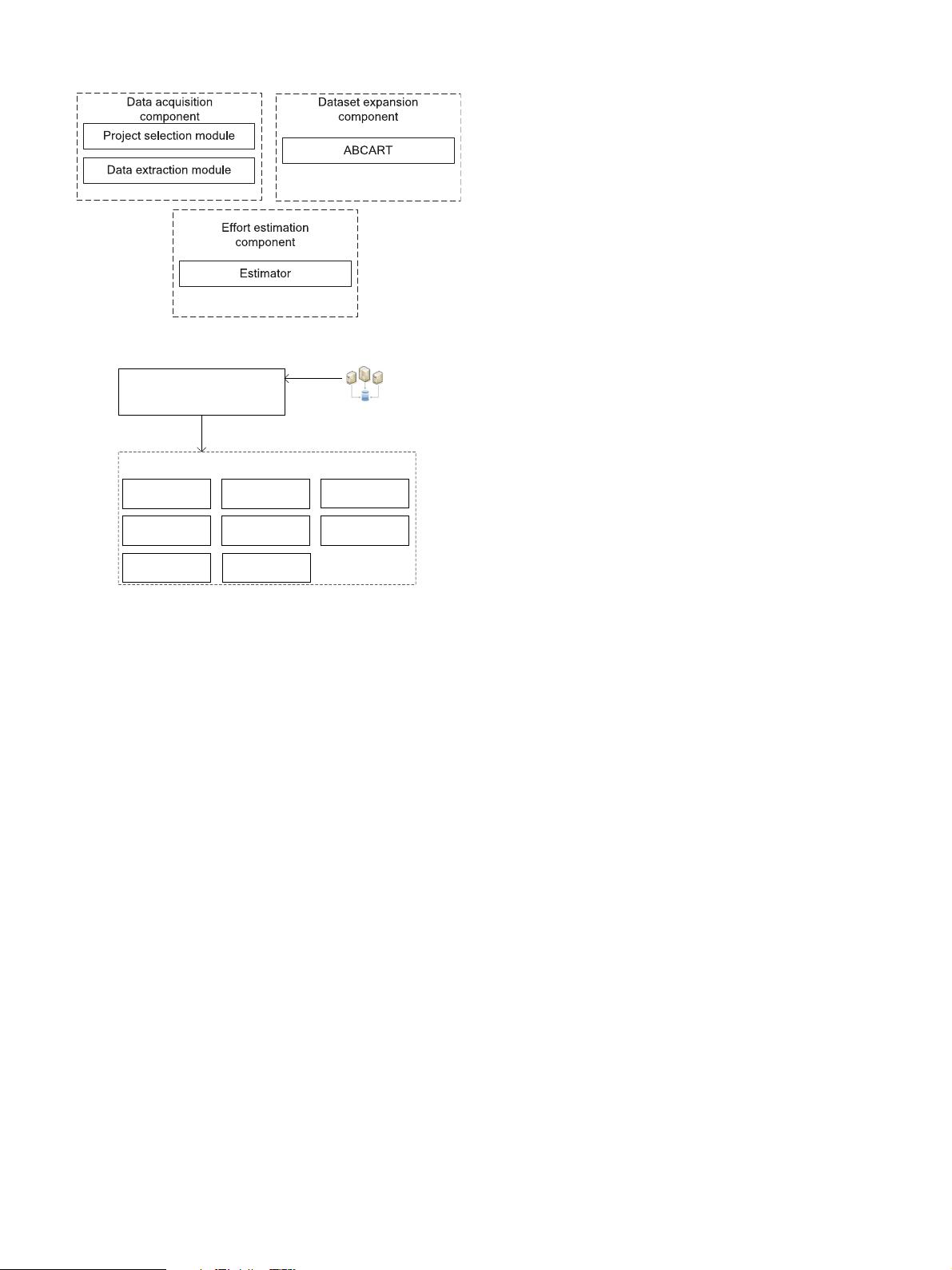

Fig. 2. Compositions of data acquisition component.

3. Our method

3.1. Overview of our method

Our proposed method consists of three parts, which is illus-

trated as Fig. 1 .

Data acquisition component: This component is responsible

for obtaining necessary data from OSP for effort estimation. The

main functions of this component include scrapping projects from

GitHub, filtering the collected projects, and extracting the neces-

sary data from the filtered projects.

Dataset expansion component: To get more effort data to train

the prediction models, we design this component to increase the

samples of the collected dataset.

Effort estimation component: This component is responsible

to train a prediction model on the collected data to estimate the

effort of a new project.

In this paper, we mainly concentrate on the components of data

acquisition and dataset expansion. For the effort estimation com-

ponent, we use CART [43] as the estimator to predict the effort of

a new project.

3.2. Data acquisition component

The data acquisition component consists of two modules: (1)

project information selection module; (2) and effort data extrac-

tion module. The compositions of data acquisition component are

shown in Fig. 2 .

Project information selection module: This module is respon-

sible to scrape relevant projects information from GitHub by using

the user specified keywords, and filter the obtained raw projects

information.

Effort dat a extraction module: This module is responsible for

downloading and analyzing source codes of projects from GitHub,

as well as extracting effort data of each project according to our

cost model.

Next, we will individually introduce these modules.

3.2.1. Project selection module

There are thousands of open source projects stored on GitHub,

where many APIs are provided for users to access project informa-

tion. Assume that we have not enough projects as the reference

object for estimating the effort required by a software system. In-

tuitively, we can obtain the reference projects from GitHub as the

training data for constructing the prediction model. GitHub hosts

over 53 million projects, it’s impossible and unnecessary to analyze

all the projects, therefore, how to select appropriate projects from

these massive data is the first issue to be considered. We develop

this module which takes the following three aspects into consider-

ation to select project.

Development time: The projects we selected are from 2011

to 2016. With the progress of development language and IDE, as

well as the increasing of user’s requirements, the efforts required

by developing a new software project may be different at differ-

ent times. For example, we want to use a machine learning algo-

rithm in a Java project (such as the support vector machine (SVM)

[50] described by Cortes and Vapnik in 1995) to make a predic-

tion in 20 0 0, we may need to spend a lot of time to understand

and rewrite this algorithm with Java language. This is quite time-

consuming. However, in nowadays, with the available of many ma-

chine learning libraries, such as JAVA-ML [51] (which implements

many commonly used machine learning algorithms and provides

services through different interfaces), we can invoke the SVM algo-

rithm in our program directly. Off-the-shelf libraries will improve

the development efficiency of a project and decrease the effort re-

quired by a new project. Therefore, it is necessary to filter out a

part of projects according to the developing time of the projects.

Team size: The team size of a project on GitHub can be eval-

uated by the number of contributors of this project. Since the

project developed by a team with only one or two persons usu-

ally doesn’t conform to the team configurations of most companies

(e.g., Scrum guide recommends that development team size should

be between 3 and 9 [52] ), we require the team size of the project

to be collected must be larger than 3.

Business-related: To train a good prediction model, sufficient

training data is a required element, furthermore, it would be bet-

ter if the collected effort dat a is similar to the projects to be

estimated. As compared with the CSPs, we can easily get more

business-related effort data of OSPs from GitHub. This requirement

can be implemented by using the API of ‘ https://api.github.com/

search/repositories?q=keywords ’.

3.2.2. Data extraction module and cost metrics

The required effort for developing a project is determined by

many cost metrics, such as LOC, FP, PLEX, and etc. [ 16 , 31 , 42 ]. We

divide commonly used cost metrics into two groups: (1) function

point analysis model, and (2) COCOMO II, which are summarized

in Table 1 .

Albrecht [31] published a dataset which is composed by 24

projects completed at IBM in the 1970 s. Their projects are devel-

oped by using the third-generation languages, such as COBOL, PL1,

and etc. China dataset [46] consists of the software projects from

different com panies of China, which contains 499 sam ples with 17

cost metrics and one effort label. Kitchenham dataset [16] is com-

posed by the effort data of different projects from a single out-

sourcing company, which contains 145 projects’ effort data. Chi-

damber and Kemerer [32] published a dataset which is constructed