•

Average Best Overlap (ABO)

!

!

!

!

•

Mean Average Best Overlap (MABO)

Evaluation Metric

Figure 3: The training procedure of our object recognition pipeline. As positive learning examples we use the ground truth. As negatives

we use examples that have a 20-50% overlap with the positive examples. We iteratively add hard negatives using a retraining phase.

by our selective search that have an overlap of 20% to 50% with

apositiveexample. Toavoidnear-duplicatenegativeexamples,

anegativeexampleisexcludedifithasmorethan70%overlap

with another negative. To keep the number of initial negatives per

class below 20,000, we randomly drop half of the negatives forthe

classes car, cat, dog and person.Intuitively,thissetofexamples

can be seen as difficult negatives which are close to the positive ex-

amples. This means they are close to the decision boundary andare

therefore likely to become support vectors even when the complete

set of negatives would be considered. Indeed, we found that this

selection of training examples gives reasonably good initial classi-

fication models.

Then we ent er a retraining phase to iteratively add hard negative

examples (e.g.[12]):Weapplythelearnedmodelstothetraining

set using the locations generated by our selective search. For each

negative image we add the highest scoring location. As our initial

training set already yields good models, our models convergein

only two iterations.

For the test set, the final model is applied to all locations gener-

ated by our selective search. The windows are sorted by classifier

score while windows which have more than 30% overlap with a

higher scoring window are considered near-dupl icates and are re-

moved.

5 Evaluation

In this section we evaluate the quality of our selective search. We

divide our experiment s in four parts , each spanning a separate sub-

section:

Diversification Strategies . We experiment with a variety of

colour spaces, similarity measures, and thresholds of the ini-

tial regions, all which were detailed in Section 3.2. We seek a

trade-off between the number of generated object hypotheses,

computation time, and the qualit y of object locations. We do

this in terms of bounding boxes. This results in a selection of

complementary techniques which together s erve as our final

selective search method.

Quality of Locations . We test the quality of the object location

hypotheses resulting from the selecti ve search.

Object Recognition. We use the locations of our selective search

in the Object Recognition framework detailed in Section 4.

We evaluate performance on the Pascal VOC detection chal-

lenge.

An upper bound of location quality. We investigate how well

our object recognition framework performs when using an ob-

ject hypothesis set of “perfect” quality. How does this com-

pare to the locations that our sel ect ive search generates?

To evaluate the quality of our object hypotheses we define

the Average Best Overlap (ABO) and Mean Average Best Over-

lap (MABO) scores, which slightly generalises the measure used

in [9]. To calculate the Average Best Overlap for a specific class c,

we calculate the bes t overlap between each ground truth annotation

g

c

i

∈ G

c

and the object hypotheses L generated for the corres pond-

ing image, and average:

ABO =

1

|G

c

|

∑

g

c

i

∈G

c

max

l

j

∈L

Overlap(g

c

i

,l

j

). (7)

The Overlap score is taken from [11] and measures the area of the

intersection of two regions divided by its union:

Overlap(g

c

i

,l

j

)=

area(g

c

i

) ∩ area(l

j

)

area(g

c

i

) ∪ area(l

j

)

. (8)

Analogously to Average Precision and Mean Average Precision,

Mean Average Bes t Overlap is now defined as the mean ABO over

all classes.

Other work often uses the recall derived from the Pascal Overlap

Criterion t o measure the qualit y of the boxes [1, 16, 34]. This crite-

rion considers an object to be found when the Overlap of Equation

8islargerthan0.5. However,inmanyofourexperimentsweob-

tain a recall between 95% and 100% for most classes, making this

measure too insensitive for this paper. However, we do reportthis

measure when comparing with other work.

To avoid overfitting, we perform the diversification strategies ex-

periments on the Pascal VOC 2007 TRAIN+VA L set. Other exper-

iments are done on the Pascal VOC 2007 TEST set. Additionally,

our object recognit i on system is benchmarked on the Pascal VOC

2010 detection challenge, using t he independent evaluationserver.

5.1 Diversification Strategies

In this section we evaluate a variety of strategies to obtain good

quality object location hypotheses using a reas onable number of

boxes computed within a reasonable amount of time.

5.1.1 Flat versus Hierarchy

In the description of our method we claim that using a full hierar-

chy is mor e natural than using multiple flat partitionings by chang-

6

Figure 3: The training procedure of our object recognition pipeline. As positive learning examples we use the ground tru th. As negatives

we use examples that have a 20-50% overlap with the positive examples. We iteratively add hard negatives usi ng a retraining phase.

by our selective search that have an overlap of 20% to 50% with

apositiveexample. Toavoidnear-duplicatenegativeexamples,

anegativeexampleisexcludedifithasmorethan70%overlap

with another negative. To keep the number of initial negatives per

class below 20,000, we randomly drop half of the negatives forthe

classes car, cat, dog and person.Intuitively,thissetofexamples

can be seen as difficult negatives which are close to the positive ex-

amples. This means they are close t o the deci sion boundary andare

therefore likely to become support vectors even when the complete

set of negatives would be considered. Indeed, we found that this

selection of training examples gives reasonably good initial classi-

fication models.

Then we enter a retrai ning phase to iteratively add hard negative

examples (e.g.[12]):Weapplythelearnedmodelstothetraining

set using the locations generated by our selective search. For each

negative image we add the hi ghes t scor ing location. As our initial

training set already yields good models, our models convergein

only two iterations.

For the test set, the final model is applied to all locations gener-

ated by our selective search. The windows are sor ted by classifier

score while windows which have more than 30% overlap with a

higher scoring window are considered near-duplicates and are re-

moved.

5 Evaluation

In this section we evaluate the quality of our selective search. We

divide our exper iments in four parts, each spanning a separate sub-

section:

Diversification Strategies. We experiment with a variety of

colour spaces, similarity measures, and thresholds of the ini-

tial regions, all which were detailed in Section 3.2. We seek a

trade-off between the number of generated object hypotheses,

computation time, and the quality of object locations. We do

this in terms of bounding boxes. This results in a selection of

complementary techniques which together serve as our final

selective search method.

Quality of Locat ions . We test the quality of the object location

hypotheses resulting from the selective search.

Object Recognition. We use the locations of our selective search

in the Object Recognition framework detailed in Section 4.

We evaluate performance on the Pascal VOC detection chal-

lenge.

An upper bound of location quality. We investigate how well

our object recognition framework performs when using an ob-

ject hypothesis set of “perfect” quality. How does this com-

pare to the locations that our selective search generates?

To evaluate the quality of our object hypotheses we define

the Average Best Overlap (ABO) and Mean Average Best Over-

lap (MABO) scores, which slightly generalises the measure used

in [9]. To calculate the Average Best Overlap for a specific class c,

we calculate the best overlap between each ground truth annotation

g

c

i

∈ G

c

and the object hypotheses L generated for the cor r espond-

ing image, and average:

ABO =

1

|G

c

|

∑

g

c

i

∈G

c

max

l

j

∈L

Overlap(g

c

i

,l

j

). (7)

The Overlap score is taken f rom [11] and measures the area of the

intersection of two regions divided by its union:

Overlap(g

c

i

,l

j

)=

area(g

c

i

) ∩ area(l

j

)

area(g

c

i

) ∪ area(l

j

)

. (8)

Analogously to Average Precision and Mean Average Precision,

Mean Average Best Overlap is now defined as the mean ABO over

all classes.

Other work often uses the r ecall derived from the Pas cal Overlap

Criterion to measure the quality of the boxes [1, 16, 34]. This crite-

rion considers an object to be found when the Overlap of Equation

8islargerthan0.5. However,inmanyofourexperimentsweob-

tain a recall between 95% and 100% for most classes, making this

measure too insensitive for this paper. However, we do reportthis

measure when comparing with other work.

To avoid overfitting, we perform the diversification strategies ex-

periments on the Pascal VOC 2007 TRAIN+VA L set. Other exper-

iments are done on the Pascal VOC 2007 TEST set. Additionally,

our object recognition system is benchmarked on the Pascal VOC

2010 detection challenge, using the independent evaluationserver.

5.1 Diversification Strategies

In this section we evaluate a variety of strategies to obtain good

quality object location hypotheses using a reasonable number of

boxes computed withi n a reasonable amount of time.

5.1.1 Flat versus Hierarchy

In the description of our method we claim that using a full hierar-

chy is more natural than using multiple flat partiti onings by chang-

6

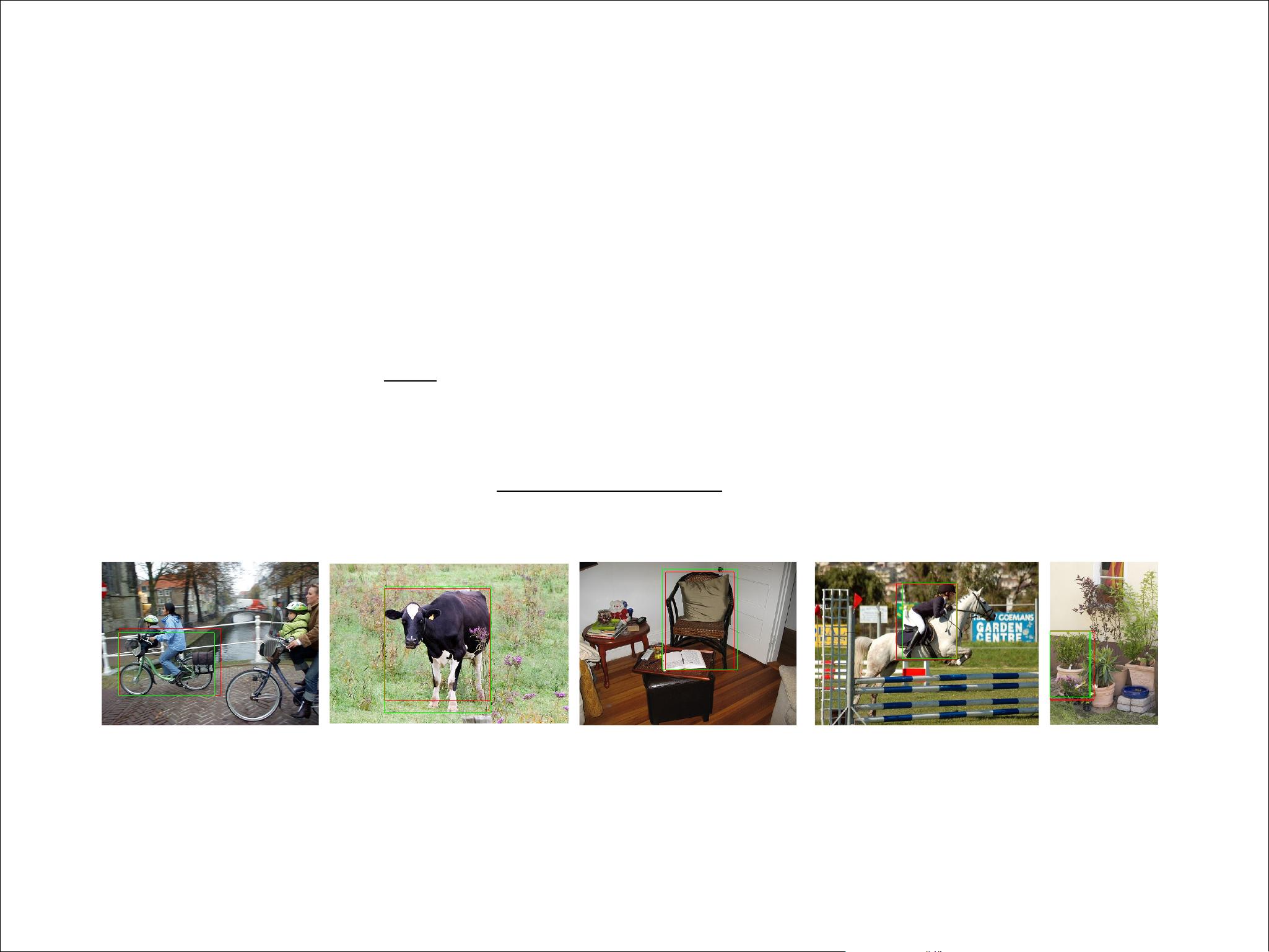

0 500 1000 1500 2000 2500 3000

0.5

0.55

0.6

0.65

0.7

0.75

0.8

0.85

0.9

0.95

1

Number of Object Boxes

Recall

Harzallah et al.

Vedaldi et al.

Alexe et al.

Carreira and Sminchisescu

Endres and Hoiem

Selective search Fast

Selective search Quality

(a) Trade-off between number of object locations and the Pascal Recall criterion.

0 500 1000 1500 2000 2500 3000

0.5

0.55

0.6

0.65

0.7

0.75

0.8

0.85

0.9

0.95

1

Number of Object Boxes

Mean Average Best Overlap

Alexe et al.

Carreira and Sminchisescu

Endres and Hoiem

Selective search Fast

Selective search Quality

(b) Trade-off between number of object locations and the MABO score.

Figure 4: Trade-off between qual i ty and quantity of the object hypotheses in terms of bounding boxes on the Pascal 2007 TEST set. The

dashed lines are for those methods whose quantity is expressed is the number of boxes per class. In terms of recall “Fast” selective

search has the best trade-off. In terms of Mean Average Best Overlap the “Quality” selective search is comparable with [4,9]yetis

much faster to compute and goes on longer resulting in a higherfinalMABOof0.879.

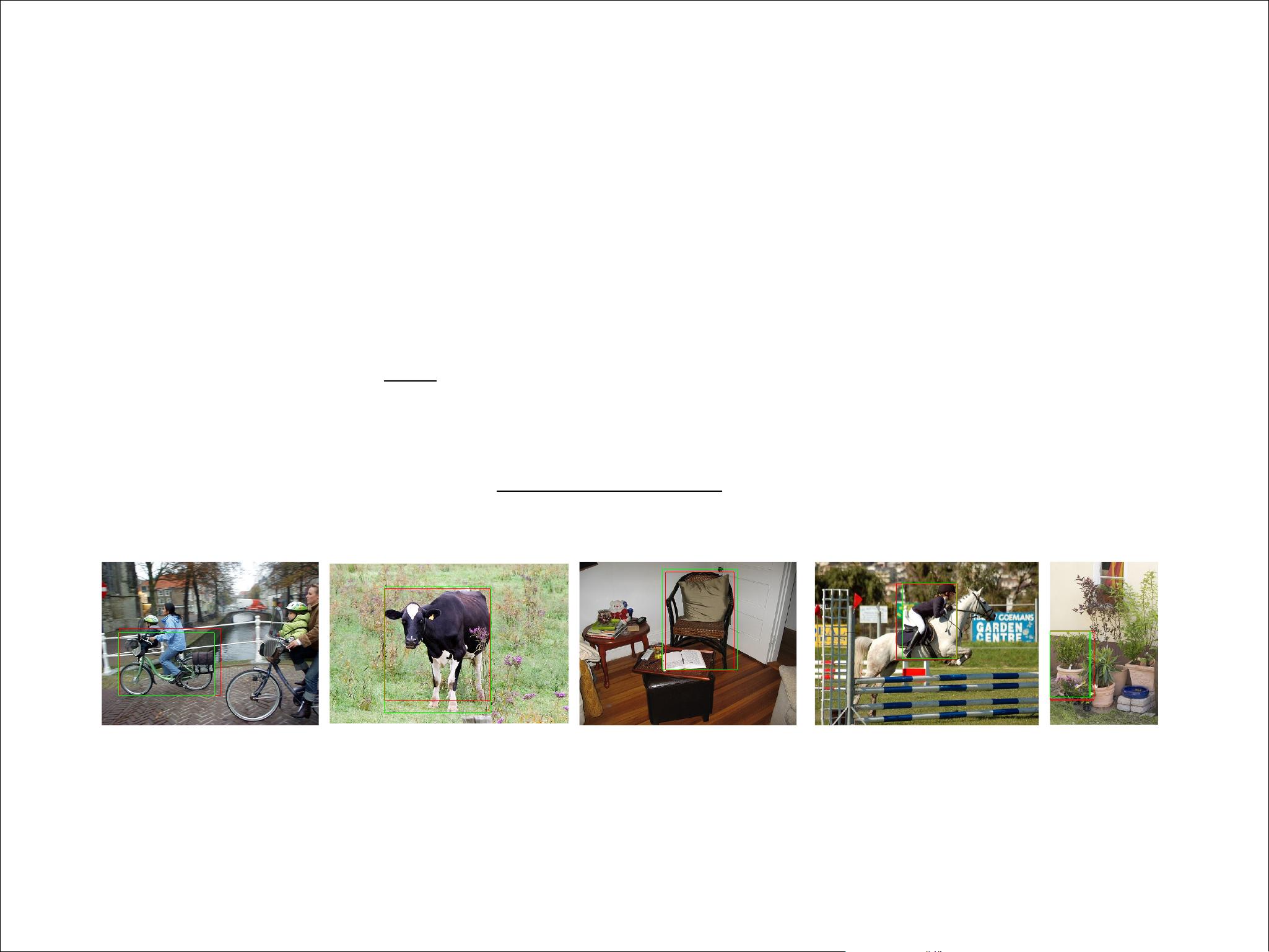

(a) Bike: 0.863 (b) Cow: 0.874 (c) Chair: 0.884 (d) Person: 0.882 (e) Plant: 0.873

Figure 5: Examples of locations for objects whose Best Overlap score is around our Mean Average Best Overlap of 0.879. The green

boxes are the ground truth. The red boxes are created using the“Quality”selectivesearch.

9