network. Characteristics of each call are used to update a database of models for every

telephone number in the United States (Cortes and Pregibon, 1998). Harrison (1993)

reports that Mobil Oil aims to store over 100 terabytes of data on oil exploration. Fayyad,

Djorgovski, and Weir (1996) describe the Digital Palomar Observatory Sky Survey as

involving three terabytes of data. The ongoing Sloan Digital Sky Survey will create a raw

observational data set of 40 terabytes, eventually to be reduced to a mere 400 gigabyte

catalog containing 3 × 10

8

individual sky objects (Szalay et al., 1999). The NASA Earth

Observing System is projected to generate multiple gigabytes of raw data per hour

(Fayyad, Piatetsky-Shapiro, and Smyth, 1996). And the human genome project to

complete sequencing of the entire human genome will likely generate a data set of more

than 3.3 × 10

9

nucleotides in the process (Salzberg, 1999). With data sets of this size

come problems beyond those traditionally considered by statisticians.

Massive data sets can be tackled by sampling (if the aim is modeling, but not necessarily

if the aim is pattern detection) or by adaptive methods, or by summarizing the records in

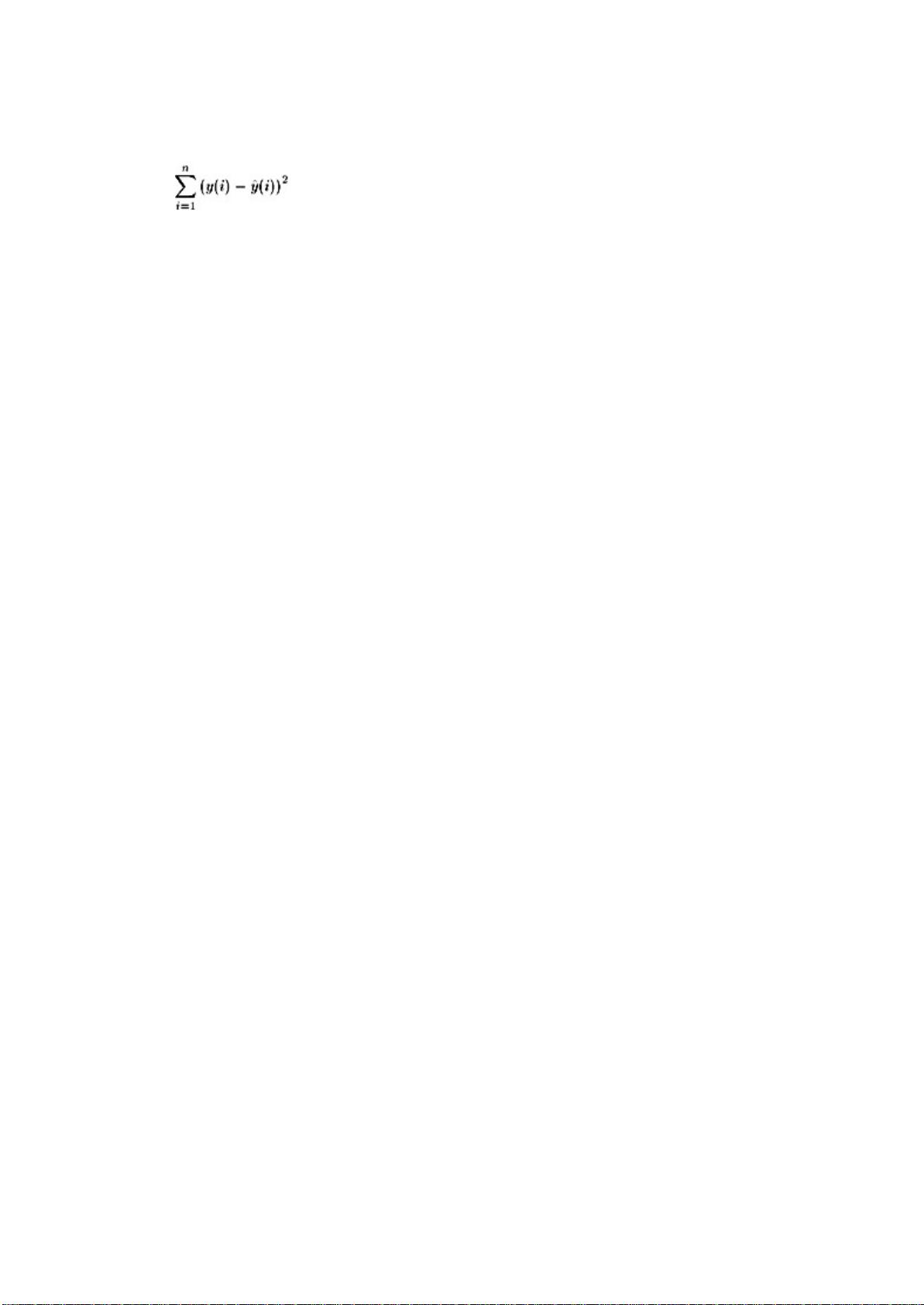

terms of sufficient statistics. For example, in standard least squares regression

problems, we can replace the large numbers of scores on each variable by their sums,

sums of squared values, and sums of products, summed over the records—these are

sufficient for regression co-efficients to be calculated no matter how many records there

are. It is also important to take account of the ways in which algorithms scale, in terms of

computation time, as the number of records or variables increases. For example,

exhaustive search through all subsets of variables to find the "best" subset (according to

some score function), will be feasible only up to a point. With p variables there are 2

p

- 1

possible subsets of variables to consider. Efficient search methods, mentioned in the

previous section, are crucial in pushing back the boundaries here.

Further difficulties arise when there are many variables. One that is important in some

contexts is the curse of dimensionality; the exponential rate of growth of the number of

unit cells in a space as the number of variables increases. Consider, for example, a

single binary variable. To obtain reasonably accurate estimates of parameters within

both of its cells we might wish to have 10 observations per cell; 20 in all. With two binary

variables (and four cells) this becomes 40 observations. With 10 binary variables it

becomes 10240 observations, and with 20 variables it becomes 10485760. The curse of

dimensionality manifests itself in the difficulty of finding accurate estimates of probability

densities in high dimensional spaces without astronomically large databases (so large, in

fact, that the gigabytes available in data mining applications pale into insignificance). In

high dimensional spaces, "nearest" points may be a long way away. These are not

simply difficulties of manipulating the many variables involved, but more fundamental

problems of what can actually be done. In such situations it becomes necessary to

impose additional restrictions through one's prior choice of model (for example, by

assuming linear models).

Various problems arise from the difficulties of accessing very large data sets. The

statistician's conventional viewpoint of a "flat" data file, in which rows represent objects

and columns represent variables, may bear no resemblance to the way the data are

stored (as in the text and Web transaction data sets described earlier). In many cases

the data are distributed, and stored on many machines. Obtaining a random sample from

data that are split up in this way is not a trivial matter. How to define the sampling frame

and how long it takes to access data become important issues.

Worse still, often the data set is constantly evolving—as with, for example, records of

telephone calls or electricity usage. Distributed or evolving data can multiply the size of a

data set many-fold as well as changing the nature of the problems requiring solution.

While the size of a data set may lead to difficulties, so also may other properties not

often found in standard statistical applications. We have already remarked that data

mining is typically a secondary process of data analysis; that is, the data were originally

collected for some other purpose. In contrast, much statistical work is concerned with

primary analysis: the data are collected with particular questions in mind, and then are

analyzed to answer those questions. Indeed, statistics includes subdisciplines of

experimental design and survey design—entire domains of expertise concerned with the

best ways to collect data in order to answer specific questions. When data are used to

address problems beyond those for which they were originally collected, they may not be