RetinaFace: Single-stage Dense Face Localisation in the Wild

Jiankang Deng

* 1,2,4

Jia Guo

* 2

Yuxiang Zhou

1

Jinke Yu

2

Irene Kotsia

3

Stefanos Zafeiriou

1,4

1

Imperial College London

2

InsightFace

3

Middlesex University London

4

FaceSoft

Abstract

Though tremendous strides have been made in uncon-

trolled face detection, accurate and efficient face locali-

sation in the wild remains an open challenge. This pa-

per presents a robust single-stage face detector, named

RetinaFace, which performs pixel-wise face localisation

on various scales of faces by taking advantages of joint

extra-supervised and self-supervised multi-task learning.

Specifically, We make contributions in the following five

aspects: (1) We manually annotate five facial landmarks

on the WIDER FACE dataset and observe significant im-

provement in hard face detection with the assistance of

this extra supervision signal. (2) We further add a self-

supervised mesh decoder branch for predicting a pixel-wise

3D shape face information in parallel with the existing su-

pervised branches. (3) On the WIDER FACE hard test set,

RetinaFace outperforms the state of the art average pre-

cision (AP) by 1.1% (achieving AP equal to 91.4%). (4)

On the IJB-C test set, RetinaFace enables state of the art

methods (ArcFace) to improve their results in face ver-

ification (TAR=89.59% for FAR=1e-6). (5) By employ-

ing light-weight backbone networks, RetinaFace can run

real-time on a single CPU core for a VGA-resolution im-

age. Extra annotations and code have been made avail-

able at: https://github.com/deepinsight/

insightface/tree/master/RetinaFace.

1. Introduction

Automatic face localisation is the prerequisite step of fa-

cial image analysis for many applications such as facial at-

tribute (e.g. expression [64] and age [38]) and facial identity

recognition [45, 31, 55, 11]. A narrow definition of face lo-

calisation may refer to traditional face detection [53, 62],

which aims at estimating the face bounding boxes without

any scale and position prior. Nevertheless, in this paper

*

Equal contributions.

Email: j.deng16@imperial.ac.uk; guojia@gmail.com

InsightFace is a nonprofit Github project for 2D and 3D face analysis.

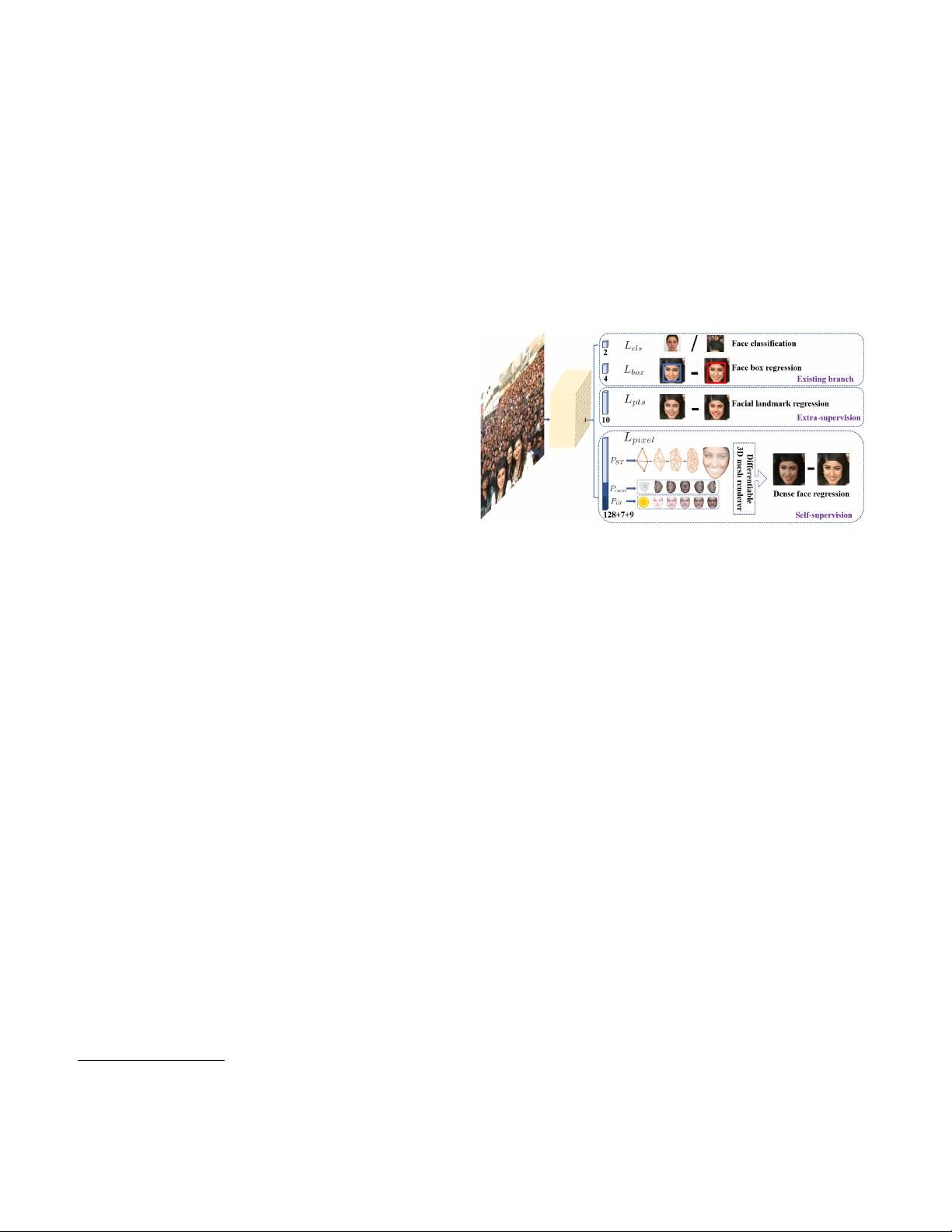

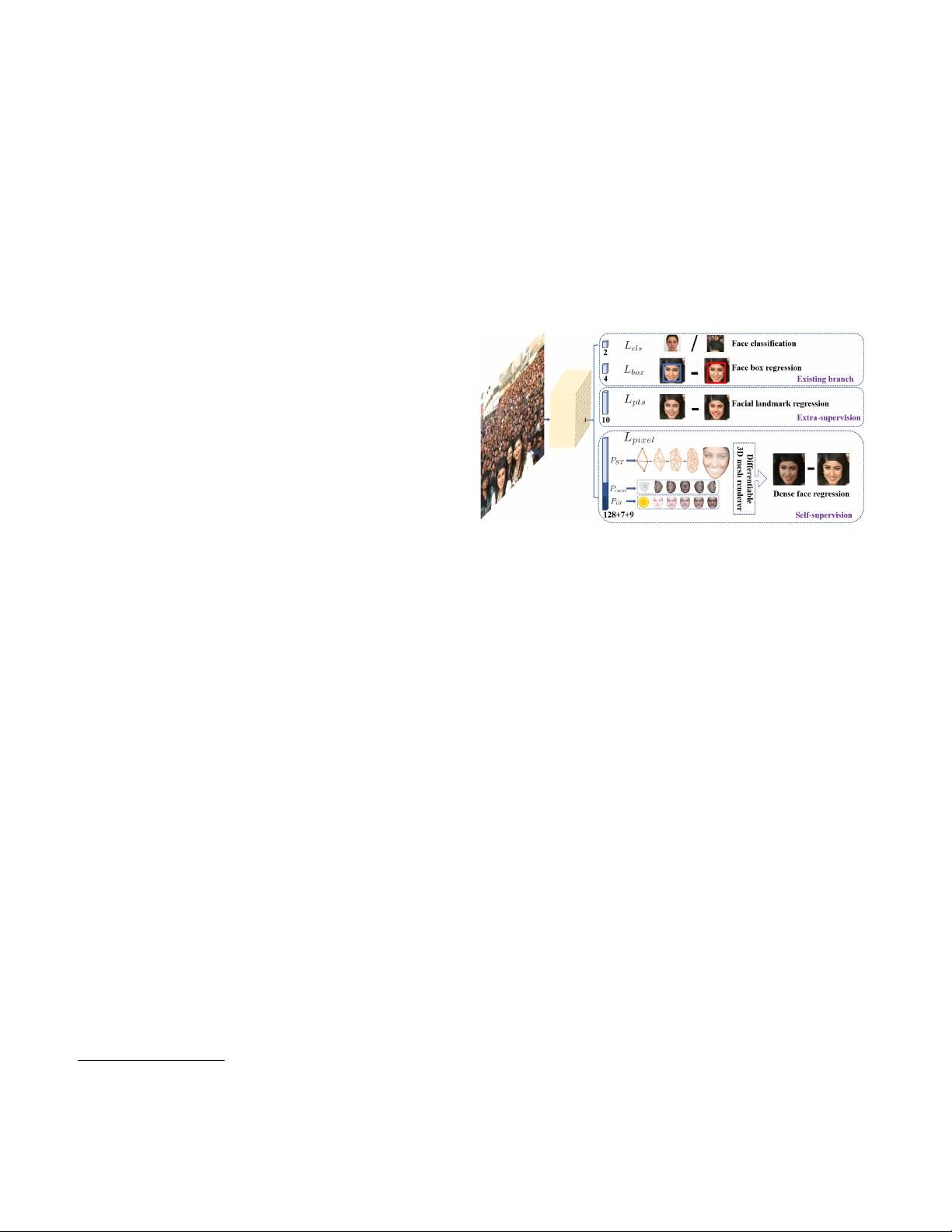

Figure 1. The proposed single-stage pixel-wise face localisation

method employs extra-supervised and self-supervised multi-task

learning in parallel with the existing box classification and regres-

sion branches. Each positive anchor outputs (1) a face score, (2) a

face box, (3) five facial landmarks, and (4) dense 3D face vertices

projected on the image plane.

we refer to a broader definition of face localisation which

includes face detection [39], face alignment [13], pixel-

wise face parsing [48] and 3D dense correspondence regres-

sion [2, 12]. That kind of dense face localisation provides

accurate facial position information for all different scales.

Inspired by generic object detection methods [16, 43, 30,

41, 42, 28, 29], which embraced all the recent advances in

deep learning, face detection has recently achieved remark-

able progress [23, 36, 68, 8, 49]. Different from generic

object detection, face detection features smaller ratio varia-

tions (from 1:1 to 1:1.5) but much larger scale variations

(from several pixels to thousand pixels). The most re-

cent state-of-the-art methods [36, 68, 49] focus on single-

stage [30, 29] design which densely samples face locations

and scales on feature pyramids [28], demonstrating promis-

ing performance and yielding faster speed compared to two-

stage methods [43, 63, 8]. Following this route, we improve

the single-stage face detection framework and propose a

state-of-the-art dense face localisation method by exploit-

ing multi-task losses coming from strongly supervised and

self-supervised signals. Our idea is examplified in Fig. 1.

Typically, face detection training process contains both

classification and box regression losses [16]. Chen et al. [6]

proposed to combine face detection and alignment in a joint

cascade framework based on the observation that aligned

1

arXiv:1905.00641v2 [cs.CV] 4 May 2019