8 W.M. Kouw, M. Loog

Generalization Ultimately, we are not interested in the error of the trained

classifier on the given data set, but in the error on all possible future sa mples

e(h) = E

X ,Y

[h(x) 6= y]. The differe nc e between the true error and the empirical

error is known as the generalization error: e(h)− ˆe(h) [11,151]. Ideally, we would

like to know if the gener alization error will be small, i.e., that our classifier will

be approximately correct. However, because classifiers are functions of data sets,

and data sets are random, we can o nly de scribe how probable it is that our

classifier will be approximately correct. We can say that, with probability 1 − δ,

where δ > 0, the following inequality bolds (Theorem 2.2 from [151]):

e(h) − ˆe(h) ≤

r

1

2n

log |H | + log

2

δ

. (2)

where |H| denotes the cardinality of the finite hypothesis spac e, or the number

of classification functions that are being considered [193,119,151]. This result

is known as a Probably Approximately Corre c t (PAC) bound. In words, the

difference between the true error, e(h), and the empirical error, ˆe(h), of a classifier

is less than the square root of the logarithm of the size of the hypothesis space

|H|, plus the log of 2 over δ, normalized by twice the sample size n. In order to

achieve a similar result fo r the case of an infinite hypothesis spa c e (e.g. line ar

classifiers ), a measure of the c omplexity of the hypothesis space is r equired.

Generalization error bounds are interesting because they analyze what a clas-

sifier’s performance depends on. In this case, it suggest choo sing a smaller or

simpler hypothesis space when the sample size is low. Many variants of bounds

exist. Some use different measures of complexity, such as Rademacher complex-

ity [14] or Vapnik-Chervonenkis dimensions [20,197], while others use concepts

from Bayesian inference [147,131,15].

Bounds can incorporate assumptions on the pr oblem setting [12,151,54]. For

example, one can assume that the posterior distributions in each domain are

equal a nd obtain a bound for a classifier that exploits that assumption (c.f.

Equation 6). Assumptions restrict the problem setting, i.e., settings where that

assumption is invalid are disre garded. This often means that the bound is tighter

and a more accurate desc ription of the behaviour of the classifier can be found.

Such results have inspired new algor ithms in the past, such as Adaboost or the

Support Vector Machine [69,47].

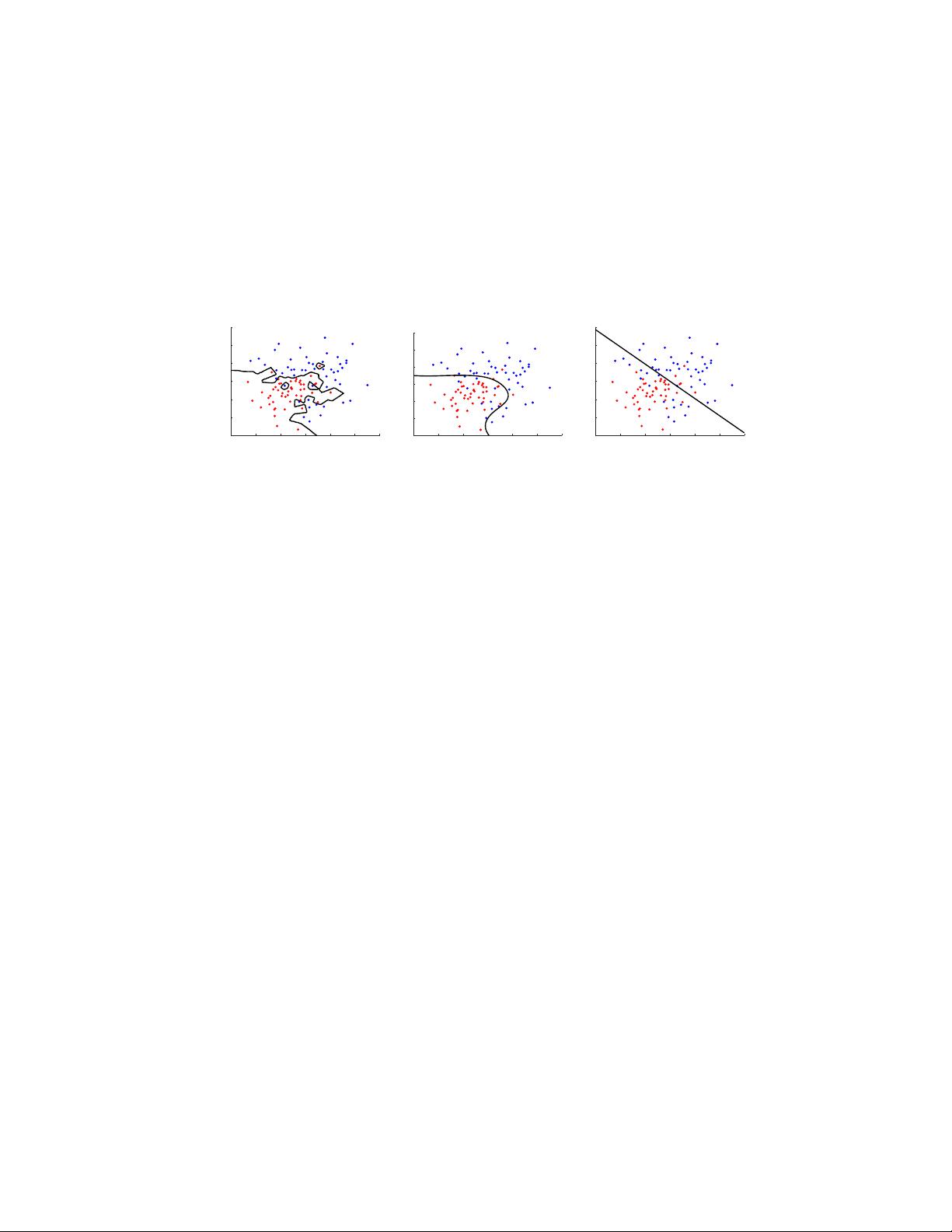

Regularization Generalization error bounds tell us that the complexity, or

flexibility, of a c lassifier has to be traded off with the number of available train-

ing s amples [61,197,54]. In particular, a flexible model c an minimize the error

on a given data set completely, but will be too specific to generalize to new

samples. This is known as overfitting. Figure 2 (left) illustrates an example of

2-dimensional classification problem with a classifier that ha s perfectly fitted to

the training set. As can be imagined, it will not perform as well for new s amples.

In order to combat overfitting, an additional term is introduced in the empiric al