MATLAB实现卡尔曼滤波理论与实践第三版详解

需积分: 5 66 浏览量

更新于2024-06-29

收藏 5.11MB PDF 举报

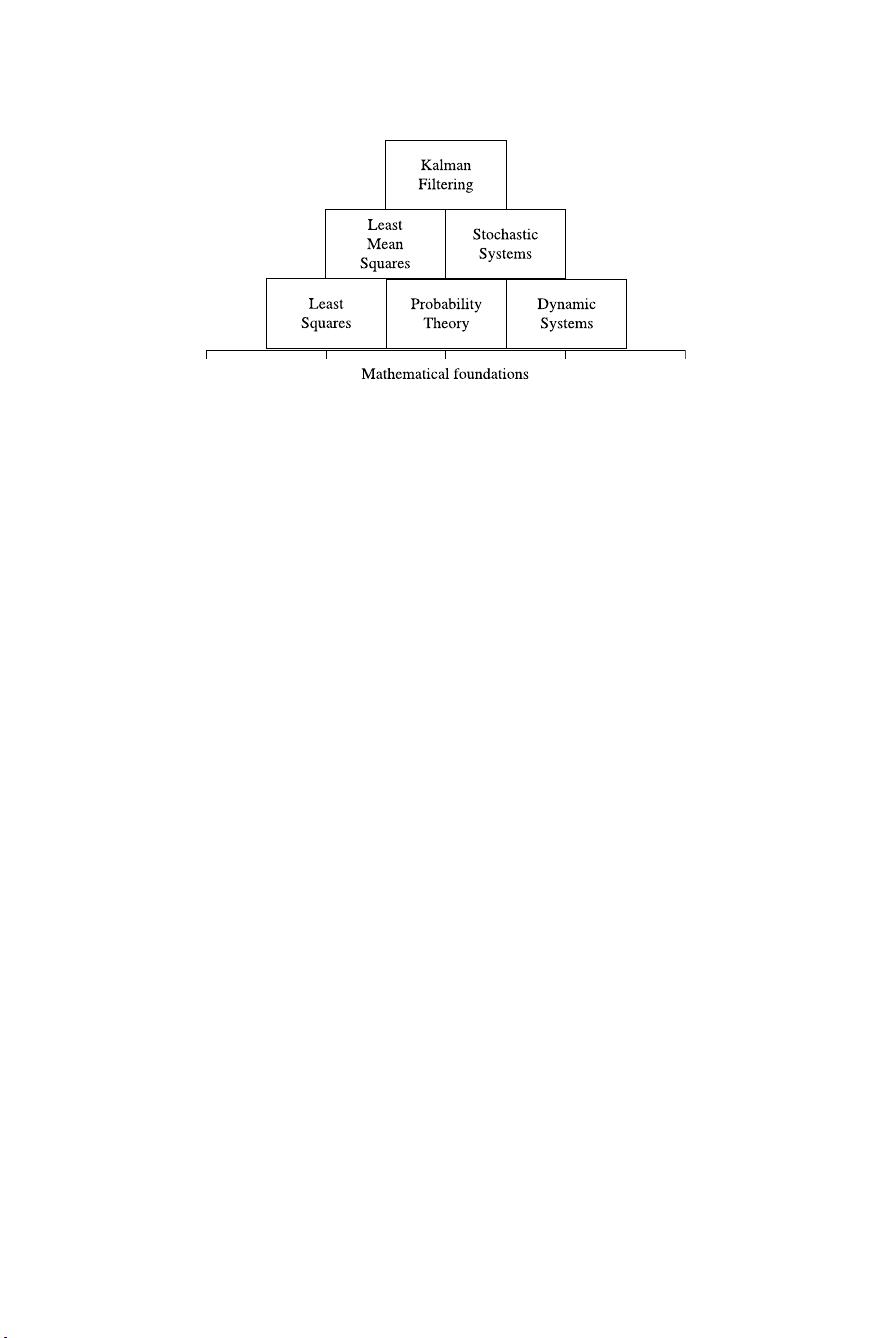

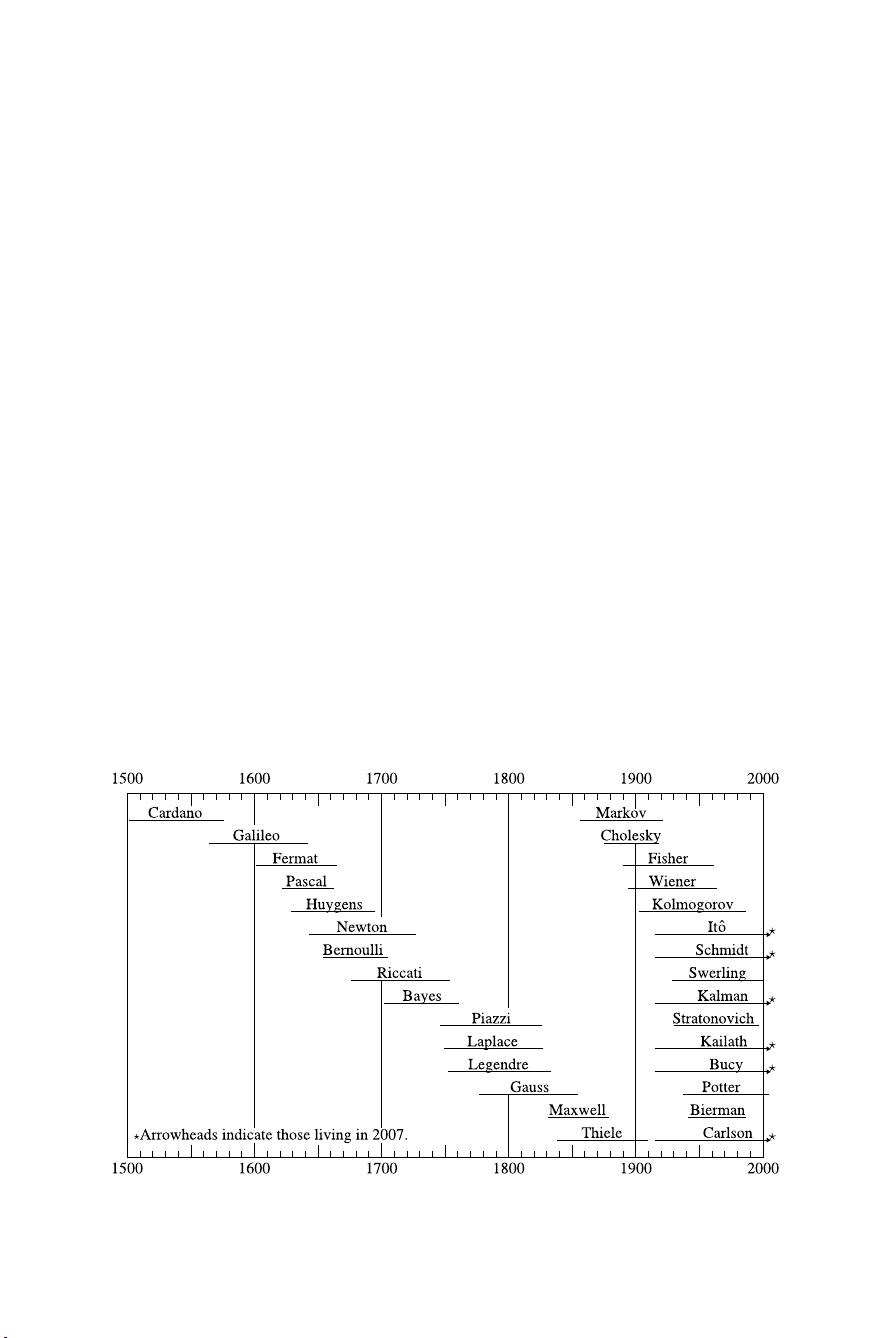

《卡尔曼滤波理论与实践:MATLAB应用教程(第三版)》是一本由Mohinder Grewal教授和Angus Andrews共同编写的著作,出版于2008年,由John Wiley & Sons, Inc. 出版。本书主要聚焦于卡尔曼滤波这一关键的信号处理技术,该技术在许多领域,如导航、控制系统、信号估计和预测等方面发挥着重要作用。卡尔曼滤波是一种递归最小二乘方法,用于估计动态系统的状态,并考虑到观测数据中的噪声不确定性。

本书的核心内容包括以下几个方面:

1. **理论基础**:深入讲解卡尔曼滤波的原理,包括随机过程、线性系统理论、状态空间模型、预测与更新步骤、协方差矩阵和信息增益等概念。读者将理解滤波器如何通过结合系统的动态模型和观测数据来实时估计状态并减小误差。

2. **MATLAB实现**:作者以MATLAB为例,详细介绍了如何在实际项目中使用这个强大的工具进行滤波器设计和仿真。书中提供了大量的代码示例,帮助读者掌握如何编写和调试卡尔曼滤波算法。

3. **应用实例**:书中包含多个实例,涵盖航空导航、自动驾驶、遥感、通信系统等领域,展示了卡尔曼滤波在实际问题中的应用效果和优化策略。

4. **扩展话题**:除了基本的无迹卡尔曼滤波(UKF)和粒子滤波(PF),本书还可能探讨了自适应滤波、非线性滤波和其他高级主题,以满足不同读者的专业需求。

版权信息表明,任何未经许可的复制、存储、传输行为均需获得John Wiley & Sons, Inc. 的明确授权。如果你对本书的内容感兴趣,可通过指定的联系方式申请许可。

《卡尔曼滤波理论与实践:MATLAB应用教程(第三版)》是一本实用的教材,不仅适合工程学生和研究人员学习卡尔曼滤波理论,也适用于需要在实际项目中应用该技术的专业人士。通过本书,读者将获得深入理解卡尔曼滤波的精髓以及如何利用MATLAB进行高效实现和优化。

233 浏览量

2013-01-30 上传

2014-12-02 上传

2023-06-09 上传

2023-02-22 上传

2023-07-30 上传

2023-07-25 上传

2023-06-13 上传

2023-06-15 上传

2023-05-11 上传

承让@

- 粉丝: 8

- 资源: 380

最新资源

- SSM Java项目:StudentInfo 数据管理与可视化分析

- pyedgar:Python库简化EDGAR数据交互与文档下载

- Node.js环境下wfdb文件解码与实时数据处理

- phpcms v2.2企业级网站管理系统发布

- 美团饿了么优惠券推广工具-uniapp源码

- 基于红外传感器的会议室实时占用率测量系统

- DenseNet-201预训练模型:图像分类的深度学习工具箱

- Java实现和弦移调工具:Transposer-java

- phpMyFAQ 2.5.1 Beta多国语言版:技术项目源码共享平台

- Python自动化源码实现便捷自动下单功能

- Android天气预报应用:查看多城市详细天气信息

- PHPTML类:简化HTML页面创建的PHP开源工具

- Biovec在蛋白质分析中的应用:预测、结构和可视化

- EfficientNet-b0深度学习工具箱模型在MATLAB中的应用

- 2024年河北省技能大赛数字化设计开发样题解析

- 笔记本USB加湿器:便携式设计解决方案