一种新的直线提取方法

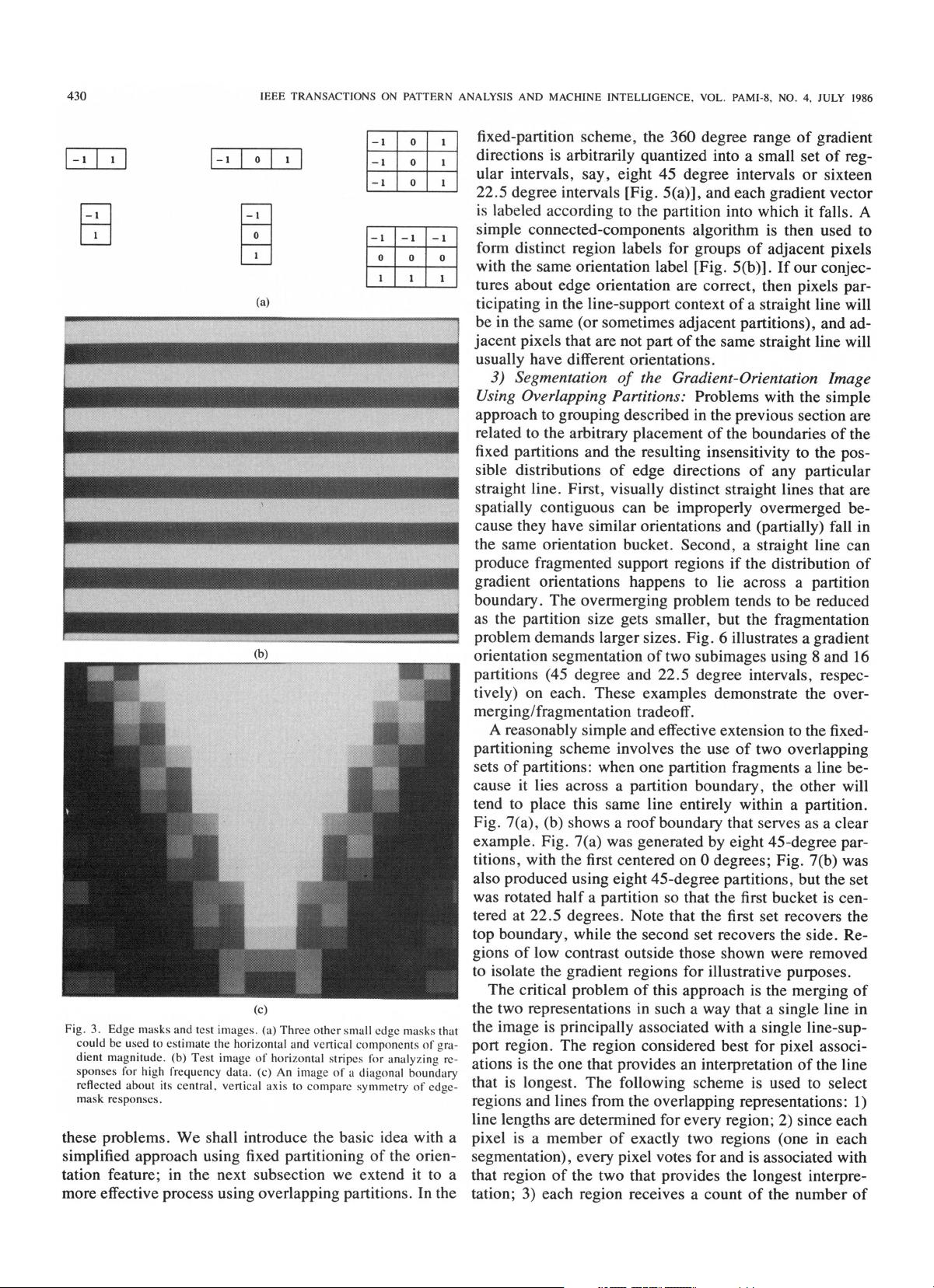

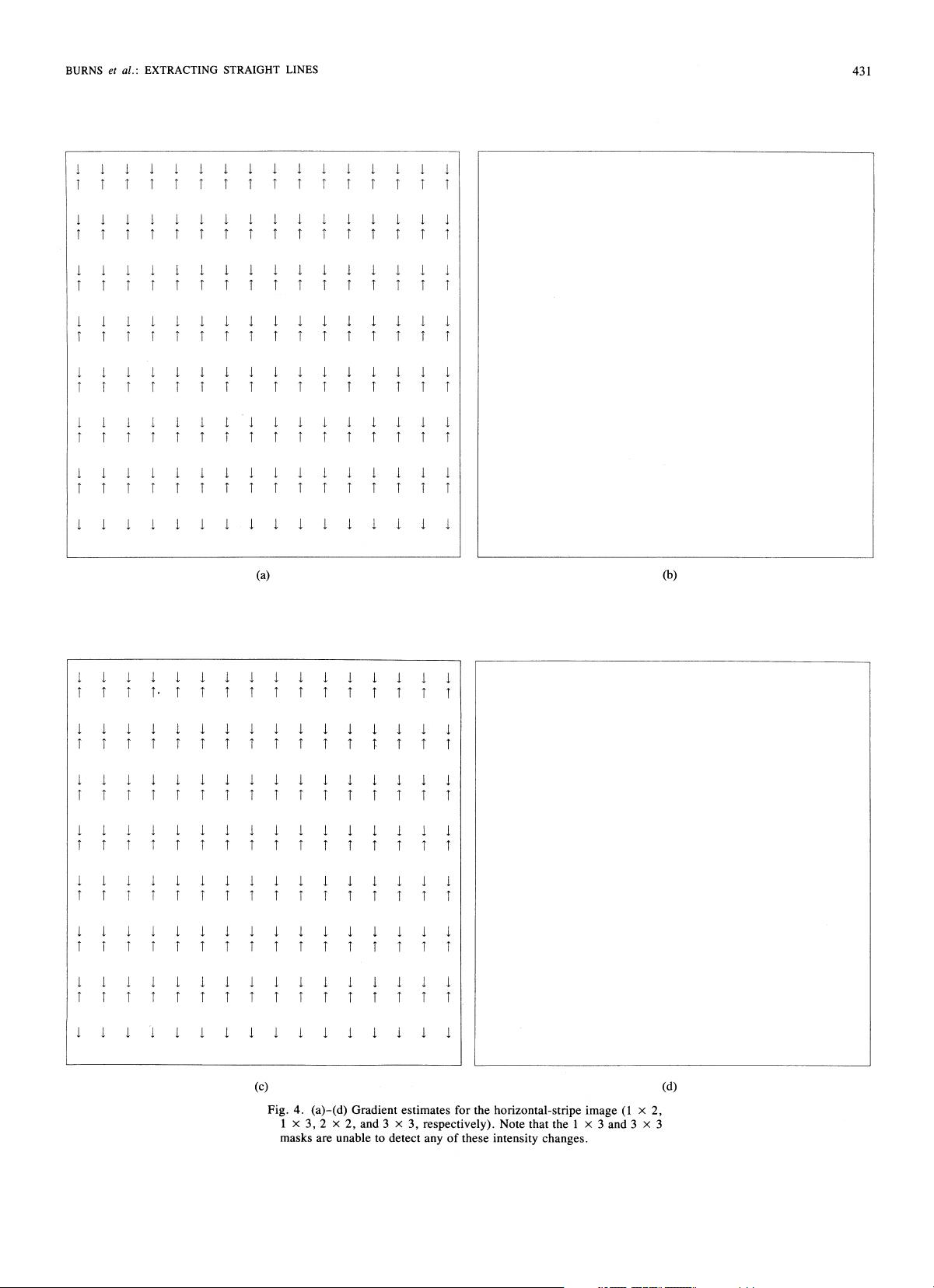

"提取直线——线检测与边缘检测" 在计算机视觉和图像处理领域,提取直线是一种基础且重要的任务,尤其在模式识别、机器智能以及图像分析中占据着核心地位。"Extracting Straight Lines"这篇文章由J. Brian Burns、Allen R. Hanson和Edward M. Rise曼三位IEEE会员发表在1986年7月的《IEEE Transactions on Pattern Analysis and Machine Intelligence》(PAMI-8, No.4)期刊上,提出了一个新的方法来提取强度图像中的直线。 该论文的摘要指出,其方法主要关注像素的梯度方向而非梯度大小,将像素分组成具有相似梯度方向的线支撑区域。然后,通过分析这些区域所关联的强度表面结构来确定边缘的位置和属性。由此生成的区域和提取出的边缘参数构成了一种低层次的图像强度变化表示,这种表示可以用于多种目的。算法的高效性主要归因于两个关键点:1)在提取直线前,使用梯度方向而不是梯度大小作为初步的组织标准;2)在对参与边缘元素进行局部决策之前,确定了与直线相关的全局强度变化上下文。 关键词包括边界提取、边缘分析、基于梯度的分割。边缘分析是图像处理中的一种技术,用于识别图像中亮度或颜色的显著变化,而基于梯度的分割则利用像素的梯度信息来区分图像的不同区域。这种方法在处理如建筑图纸、道路网络等包含大量直线结构的图像时特别有用,因为直线是许多结构和形状的基本组成部分。 在实际应用中,直线检测可以用于自动驾驶中的道路检测,机器人导航中的环境理解,以及文档分析中的文字识别。例如,Hough变换是一种经典的直线检测方法,但计算复杂度较高,而本文提出的方法可能提供了一个更高效的选择。此外,这种新的直线提取技术可能还对图像恢复、目标检测和图像分类等领域有重要贡献。 这篇文章的贡献在于提出了一种改进的直线提取策略,它优化了传统方法,尤其是通过考虑全局信息和梯度方向,提高了直线检测的准确性和效率。这对于依赖精确图像特征分析的现代计算机视觉系统来说,是一个有价值的进步。

剩余30页未读,继续阅读

- 粉丝: 1552

- 资源: 90

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- 计算机系统基石:深度解析与优化秘籍

- 《ThinkingInJava》中文版:经典Java学习宝典

- 《世界是平的》新版:全球化进程加速与教育挑战

- 编程珠玑:程序员的基础与深度探索

- C# 语言规范4.0详解

- Java编程:兔子繁殖与素数、水仙花数问题探索

- Oracle内存结构详解:SGA与PGA

- Java编程中的经典算法解析

- Logback日志管理系统:从入门到精通

- Maven一站式构建与配置教程:从入门到私服搭建

- Linux TCP/IP网络编程基础与实践

- 《CLR via C# 第3版》- 中文译稿,深度探索.NET框架

- Oracle10gR2 RAC在RedHat上的安装指南

- 微信技术总监解密:从架构设计到敏捷开发

- 民用航空专业英汉对照词典:全面指导航空教学与工作

- Rexroth HVE & HVR 2nd Gen. Power Supply Units应用手册:DIAX04选择与安装指南

信息提交成功

信息提交成功