Published as a conference paper at ICLR 2020

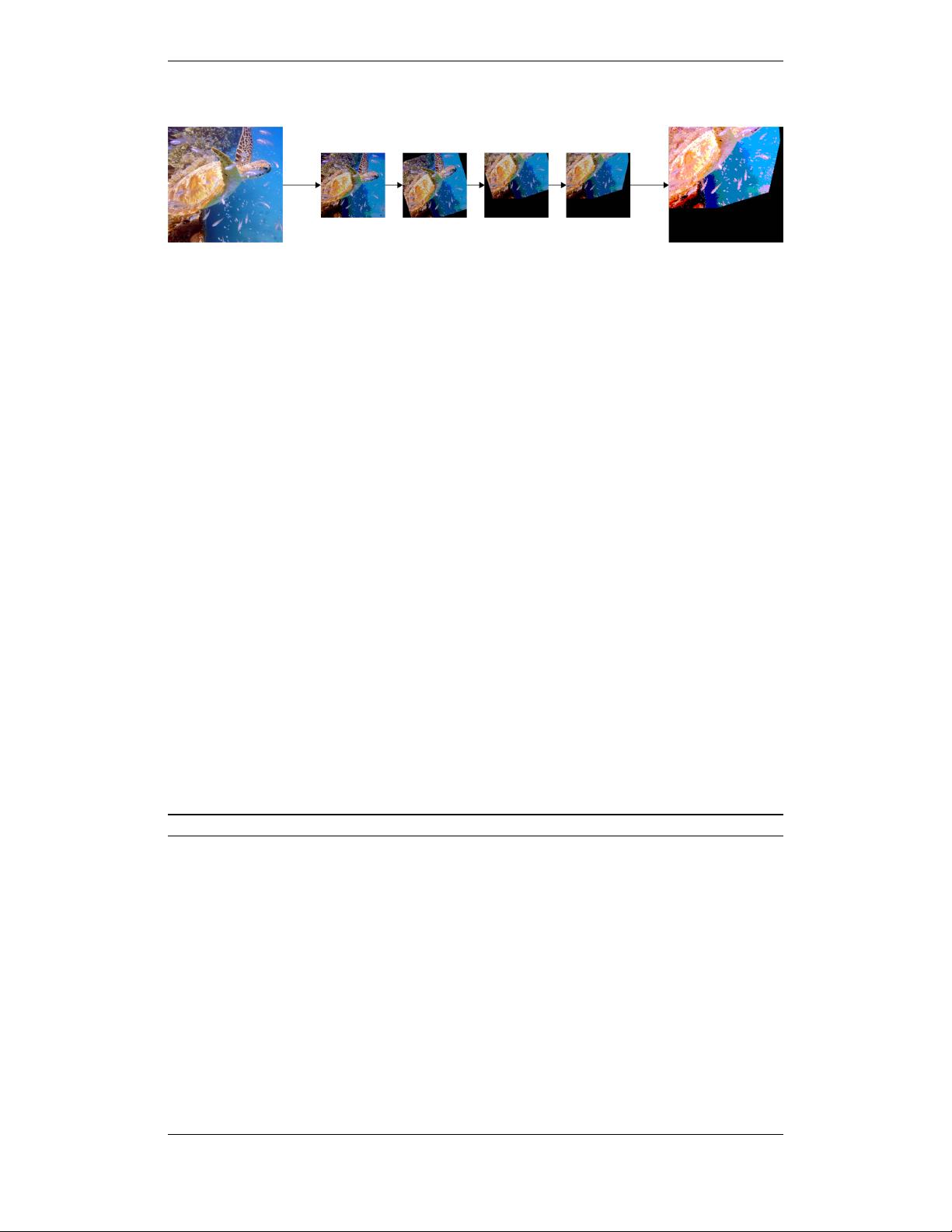

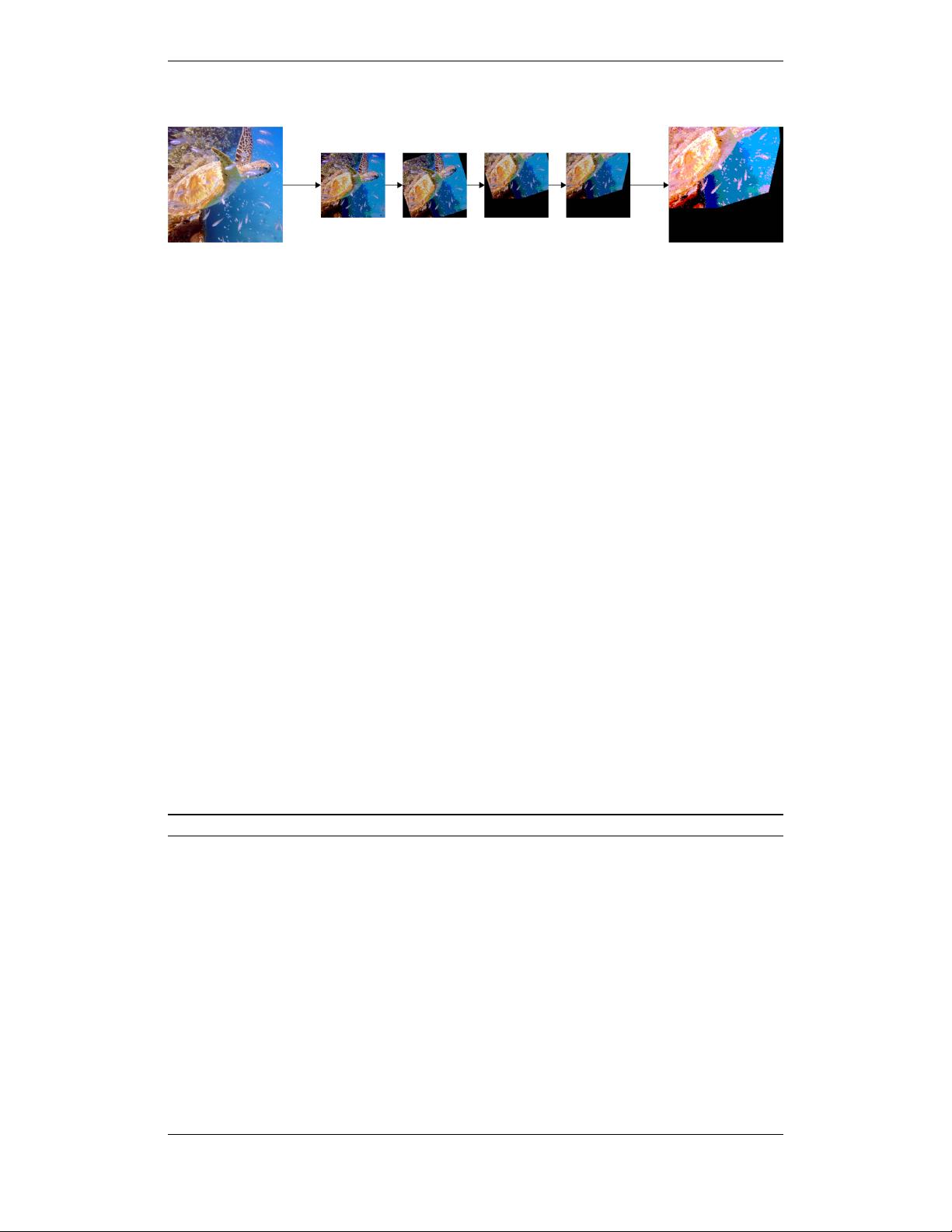

Figure 3: A cascade of successive compositions can produce images which drift far from the original

image, and lead to unrealistic images. However, this divergence can be balanced by controlling the

number of steps. To increase variety, we generate multiple augmented images and mix them.

et al., 2017; Tokozume et al., 2018). Guo et al. (2019) show that Mixup can be improved with

an adaptive mixing policy, so as to prevent manifold intrusion. Separate from these approaches

are learned augmentation methods such as AutoAugment (Cubuk et al., 2018), where a group of

augmentations is tuned to optimize performance on a downstream task. Patch Gaussian augments

data with Gaussian noise applied to a randomly chosen portion of an image (Lopes et al., 2019). A

popular way to make networks robust to

p

adversarial examples is with adversarial training (Madry

et al., 2018), which we use in this paper. However, this tends to increase training time by an order of

magnitude and substantially degrades accuracy on non-adversarial images (Raghunathan et al., 2019).

3 AUGMIX

AUGMIX is a data augmentation technique which improves model robustness and uncertainty esti-

mates, and slots in easily to existing training pipelines. At a high level, AugMix is characterized by its

utilization of simple augmentation operations in concert with a consistency loss. These augmentation

operations are sampled stochastically and layered to produce a high diversity of augmented images.

We then enforce a consistent embedding by the classifier across diverse augmentations of the same

input image through the use of Jensen-Shannon divergence as a consistency loss.

Mixing augmentations allows us to generate diverse transformations, which are important for inducing

robustness, as a common failure mode of deep models in the arena of corruption robustness is the

memorization of fixed augmentations (Vasiljevic et al., 2016; Geirhos et al., 2018). Previous methods

have attempted to increase diversity by directly composing augmentation primitives in a chain, but

this can cause the image to quickly degrade and drift off the data manifold, as depicted in Figure 3.

Such image degradation can be mitigated and the augmentation diversity can be maintained by mixing

together the results of several augmentation chains in convex combinations. A concrete account of

the algorithm is given in the pseudocode below.

Algorithm AUGMIX Pseudocode

1: Input: Model ˆp, Classification Loss L, Image x

orig

, Operations O = {rotate, . . . , posterize}

2: function AugmentAndMix(x

orig

, k = 3, α = 1)

3: Fill x

aug

with zeros

4: Sample mixing weights (w

1

, w

2

, . . . , w

k

) ∼ Dirichlet(α, α, . . . , α)

5: for i = 1, . . . , k do

6: Sample operations op

1

, op

2

, op

3

∼ O

7: Compose operations with varying depth op

12

= op

2

◦ op

1

and op

123

= op

3

◦ op

2

◦ op

1

8: Sample uniformly from one of these operations chain ∼ {op

1

, op

12

, op

123

}

9: x

aug

+= w

i

· chain(x

orig

) Addition is elementwise

10: end for

11: Sample weight m ∼ Beta(α, α)

12: Interpolate with rule x

augmix

= mx

orig

+ (1 − m)x

aug

13: return x

augmix

14: end function

15: x

augmix1

= AugmentAndMix(x

orig

) x

augmix1

is stochastically generated

16: x

augmix2

= AugmentAndMix(x

orig

) x

augmix1

6= x

augmix2

17: Loss Output: L(ˆp(y | x

orig

), y) + λ Jensen-Shannon(ˆp(y | x

orig

); ˆp(y|x

augmix1

); ˆp(y|x

augmix2

))

3